Only released in EOL distros:

Package Summary

Performs object segmentation and simple object recognition for constrained scenes.

- Author: Marius Muja and Matei Ciocarlie

- License: BSD

- Repository: wg-ros-pkg

- Source: svn https://code.ros.org/svn/wg-ros-pkg/stacks/tabletop_object_perception/tags/tabletop_object_perception-0.4.3

Package Summary

Performs object segmentation and simple object recognition for constrained scenes.

- Author: Marius Muja and Matei Ciocarlie

- License: BSD

- Source: svn https://code.ros.org/svn/wg-ros-pkg/stacks/pr2_object_manipulation/branches/0.5-branch

pr2_object_manipulation: active_realtime_segmentation | fast_plane_detection | manipulation_worlds | object_recognition_gui | object_segmentation_gui | pick_and_place_demo_app | pr2_create_object_model | pr2_grasp_adjust | pr2_gripper_grasp_controller | pr2_gripper_grasp_planner_cluster | pr2_gripper_reactive_approach | pr2_gripper_sensor_action | pr2_gripper_sensor_controller | pr2_gripper_sensor_msgs | pr2_handy_tools | pr2_interactive_gripper_pose_action | pr2_interactive_manipulation | pr2_interactive_object_detection | pr2_manipulation_controllers | pr2_marker_control | pr2_navigation_controllers | pr2_object_manipulation_launch | pr2_object_manipulation_msgs | pr2_pick_and_place_demos | pr2_pick_and_place_tutorial | pr2_tabletop_manipulation_launch | pr2_wrappers | rgbd_assembler | robot_self_filter_color | segmented_clutter_grasp_planner | tabletop_collision_map_processing | tabletop_object_detector | tf_throttle

Package Summary

Performs object segmentation and simple object recognition for constrained scenes.

- Author: Marius Muja and Matei Ciocarlie

- License: BSD

- Source: svn https://code.ros.org/svn/wg-ros-pkg/stacks/pr2_object_manipulation/branches/0.6-branch

Package Summary

Performs object segmentation and simple object recognition for constrained scenes.

- Author: Marius Muja and Matei Ciocarlie

- License: BSD

- Source: git https://github.com/ros-interactive-manipulation/pr2_object_manipulation.git (branch: groovy-devel)

Contents

Object Perception

There are two main components of object perception:

- object segmentation: which part of the sensed data corresponds to the object we want to pick up?

- object recognition: which object is it?

Our manipulation pipeline always requires segmentation. If recognition is performed and is successful, this further informs the grasp point selection mechanism. If not, we can still grasp the unknown object based only on perceived data.

Note that it is possible to avoid segmentation as well and select grasp points without knowing object boundaries. The current pipeline does not have this functionality, but it might gain it in the future.

To perform these tasks we rely the following assumptions:

- the objects are resting on a table, which is the dominant plane in the scene

- the minimum distance between two objects exceeds a given threshold (3cm in our demo)

- in addition, object recognition can only handle 2 degrees of freedom: translation along the X and Y axes (with the Z axis assumed to be pointing "up" relative to the table). Therefore, in order to recognize an object:

- it must be rotationally symmetric

- it must have a known orientation, such as a glass or a bowl sitting "upright" on the table.

The sensor data that we use consists of a point cloud from the narrow stereo or Kinect cameras. We perform the following steps:

- segmentation

- the table is detected by finding the dominant plane in the point cloud using RANSAC;

points above the table are considered to belong to graspable objects. We use a clustering algorithm to identify individual objects. We refer to the points corresponding to an individual object as clusters.

- recognition

- for each cluster, a simple iterative fitting technique (a distant cousin of ICP) is used to see how well it corresponds to each mesh in our database of models. If a good fit is found, the database id of the model is returned along with the cluster.

- note that our current fitting method operates in 2D, since based on our assumptions (object resting upright on the known surface of the table) the other 4 dimensions are fixed

The output from this components consists of the location of the table, the identified point clusters, and the corresponding database object id and fitting pose for those clusters found to be similar to database objects.

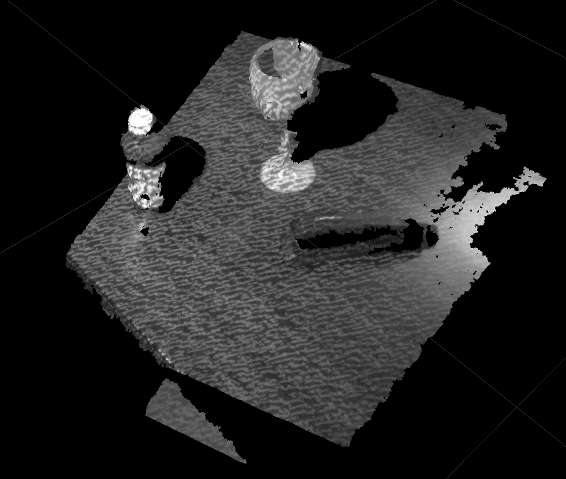

Narrow Stereo image of a table and three objects

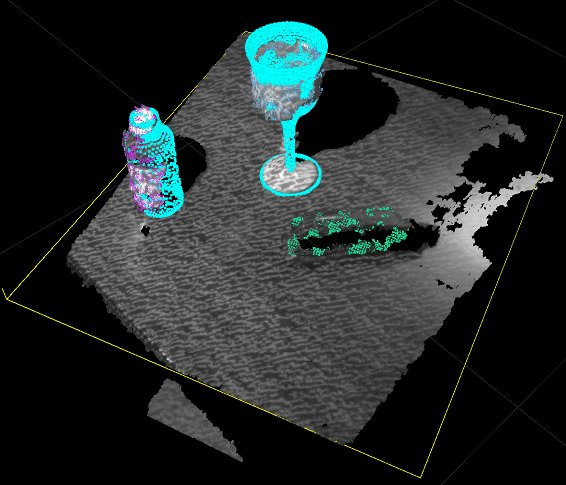

Detection result: table plane has been detected (note the yellow contour). Objects have been segmented (note the different color point clouds superimposed on them). The bottle and the glass have also been recognized (as shown by the cyan mesh further superimposed on them)

Nodes

tabletop_segmentation

Segmentation is performed by the tabletop_segmentation node. It implements the TabletopSegmentation service.Subscribed Topics

cloud_in (sensor_msgs/PointCloud2)- The pointcloud input that is processed to find the table and tabletop objects.

- Markers for visualization of objects segmented in rviz.

Published Topics

markers_out (visualization_msgs/Marker)- Markers for visualization of objects segmented in rviz.

Services

segmentation_srv (tabletop_object_detector/TabletopSegmentation)- The service to call to get the segmented objects.

Parameters

quality_threshold (float, default: 0.005)- a unit-less threshold that is compared to the results of the model recognition to decide if a model recognition is good enough to be used. Lower numbers indicate a better fit. Reduce this if you want the recognition to be more strict.

- the min distance between objects, provided as input to the segmentation algorithm. Objects closer to each other than this threshold will be merged into the same cluster.

- if non-empty, any received point clouds are first transformed in this frame, before any processing. Useful for operating in a frame where intuitive filters can be applied (e.g. tables can usually be found between z=0.5m and z=1.5m if operating in a frame with the origin on the floor and z pointing up, such as the base_link frame of the PR2)

Filtering Parameters

All of these parameters are applied to decide what part of the point cloud the detector should focus on. They must make sense in the processing_frame. If processing_frame is unspecified, all processing takes place in the native frame of the incoming cloud, which on the PR2 robot is the camera frame. Therefore, all these parameters have default values which makes sense in the camera frame.

z_filter_min (float, default: 0.4)

- Incoming point cloud first filtered and only points with z coordinate greater than this value are kept.

- Incoming point cloud first filtered and only points with z coordinate less than this value are kept.

- Incoming point cloud first filtered and only points with x coordinate greater than this value are kept.

- Incoming point cloud first filtered and only points with z coordinate less than this value are kept.

- Incoming point cloud first filtered and only points with x coordinate greater than this value are kept.

- Incoming point cloud first filtered and only points with z coordinate less than this value are kept.

- after the table is detected, only points with height above the table by at least this value are kept

- after the table is detected, only points with height above the table by at most this value are kept

- Whether the natural "up" direction (relative to gravity) is closer to positive or negative

tabletop_object_recognition

Recognition is performed by the tabletop_object_recognition node. It implements the TabletopObjectRecognition service. Note that this service directly takes as input the result of the segmentation service above. Note that object recognition needs a connection to the database of known models to perform recognition. This database itself is documented in household_objects_database. The object recognition service will look for and use the services provided by the household_object_database_node. The names of these services are provided as parameters.Published Topics

markers_out (visualization_msgs/Marker)- Markers for visualization of objects recognized in rviz.

Services

object_recognition_srv (tabletop_object_detector/TabletopObjectRecognition)- Runs object recognition.

- Clears the exclusions list.

- Adds the given model to the exclusion list.

- Negates exclusions

Parameters

fit_merge_threshold (float, default: 0.05)- Service name for get_model_list from the object database node.

- Service name for get_model_mesh from the object database node.}

tabletop_complete

Most often, segmentation and recognition are used together. To simplify this process, the tabletop_complete node will pipe them together, passing the results of segmentation directly to recognition so you don't have to worry about that. It implements the TabletopDetection service.Services

(tabletop_object_detector/TabletopDetection)

Running the Manipulation Pipeline

To launch the manipulation pipeline, complete with the sensor processing provided here, and execute pickup and place tasks using the PR2 robot, tutorials and launch files are provided in pr2_tabletop_manipulation_apps.