Lixin Yang · Licheng Zhong · Pengxiang Zhu · Xinyu Zhan · Junxiao Kong . Jian Xu . Cewu Lu

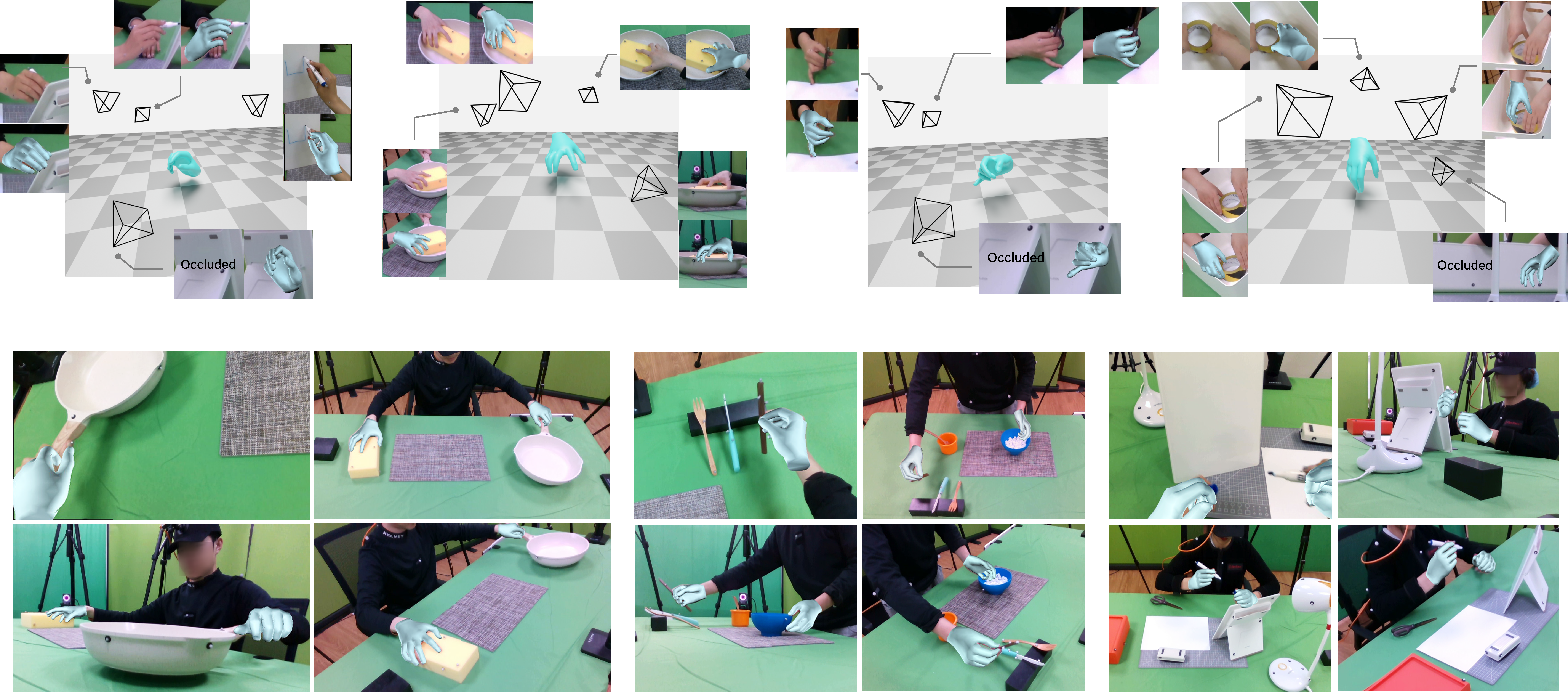

POEM is a generalizable multi-view hand mesh reconstruction (HMR) model designed for practical use in real-world hand motion capture scenerios. It embeds a static basis point within the multi-view stereo space to serve as medium for fusing features across different views. To infer accurate 3D hand mesh from multi-view images, POEM introduce a point-embedded transformer decoder. By employing a combination of five large-scale multi-view datasets and sufficient data augmentation, POEM demonstrates superior generalization ability in real-world applications.

- See docs/installation.md to setup the environment and install all the required packages.

- See docs/datasets.md to download all the datasets and additional assets required.

We provide four models with different configurations for training and evaluation. We have evaluated the models on multiple datasets.

- set

${MODEL}as one in[small, medium, medium_MANO, large]. - set

${DATASET}as one in[HO3D, DexYCB, Arctic, Interhand, Oakink, Freihand].

Download the pretrained checkpoints at 🔗ckpt_release and move the contents to ./checkpoints.

-g, --gpu_id, visible GPUs for training, e.g.-g 0,1,2,3. evaluation only supports single GPU.-w, --workers, num_workers in reading data, e.g.-w 4.-p, --dist_master_port, port for distributed training, e.g.-p 60011, set different-pfor different training processes.-b, --batch_size, e.g.-b 32, default is specified in config file, but will be overwritten if-bis provided.--cfg, config file for this experiment, e.g.--cfg config/release/train_${MODEL}.yaml.--exp_idspecify the name of experiment, e.g.--exp_id ${EXP_ID}. When--exp_idis provided, the code requires that no uncommitted change is remained in the git repo. Otherwise, it defaults to 'default' for training and 'eval_{cfg}' for evaluation. All results will be saved inexp/${EXP_ID}*{timestamp}.--reload, specify the path to the checkpoint (.pth.tar) to be loaded.

Specify the ${PATH_TO_CKPT} to ./checkpoints/${MODEL}.pth.tar. Then, run the following command. Note that we essentially modify the config file in place to suit different configuration settings. view_min and view_max specify the range of views fed into the model. Use --draw option to render the results, note that it is incompatible with the computation of auc metric.

$ python scripts/eval_single.py --cfg config/release/eval_single.yaml

-g ${gpu_id}

--reload ${PATH_TO_CKPT}

--dataset ${DATASET}

--view_min ${MIN_VIEW}

--view_max ${MAX_VIEW}

--model ${MODEL}The evaluation results will be saved at exp/${EXP_ID}_{timestamp}/evaluations.

We have used the mixature of multiple datasets packed by webdataset for training. Excecute the following command to train a specific model on the provided dataset.

$ python scripts/train_ddp_wds.py --cfg config/release/train_${MODEL}.yaml -g 0,1,2,3 -w 4$ cd exp/${EXP_ID}_{timestamp}/runs/

$ tensorboard --logdir .All the checkpoints during training are saved at exp/${EXP_ID}_{timestamp}/checkpoints/, where ../checkpoints/checkpoint records the most recent checkpoint.

This code and model are available for non-commercial scientific research purposes as defined in the LICENSE file. By downloading and using the code and model you agree to the terms in the LICENSE.

@misc{yang2024multiviewhandreconstructionpointembedded,

title={Multi-view Hand Reconstruction with a Point-Embedded Transformer},

author={Lixin Yang and Licheng Zhong and Pengxiang Zhu and Xinyu Zhan and Junxiao Kong and Jian Xu and Cewu Lu},

year={2024},

eprint={2408.10581},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2408.10581},

}For more questions, please contact Lixin Yang: [email protected]