-

Notifications

You must be signed in to change notification settings - Fork 303

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Failed fuse leaky relu with convolution on RTX 3090 #138

Comments

|

Hi, DirectML fuses operators opportunistically - that is, when it is both possible to fuse and there is a performance benefit to doing so. Unfortunately in this case it appears it wasn't possible to fuse the LEAKY_RELU with the metacommand (as the level of metacommand support can vary by hardware and driver version). You might be able to achieve the fusion by using the DISABLE_METACOMMANDS flag, but that's likely to result in worse performance. Let us know if you have an end-to-end scenario that's impacted by this - if there's data that shows a substantial performance difference, this is something we can raise with hardware vendors as a potential optimization in future. |

|

Hi @adtsai , DISABLE_METACOMMANDS will result into bad performance. I replaced the model in the DirectMLSuperResolution sample with a seven-layer CNN and tested it.The results are as follows. Environment

Test Result

Summary

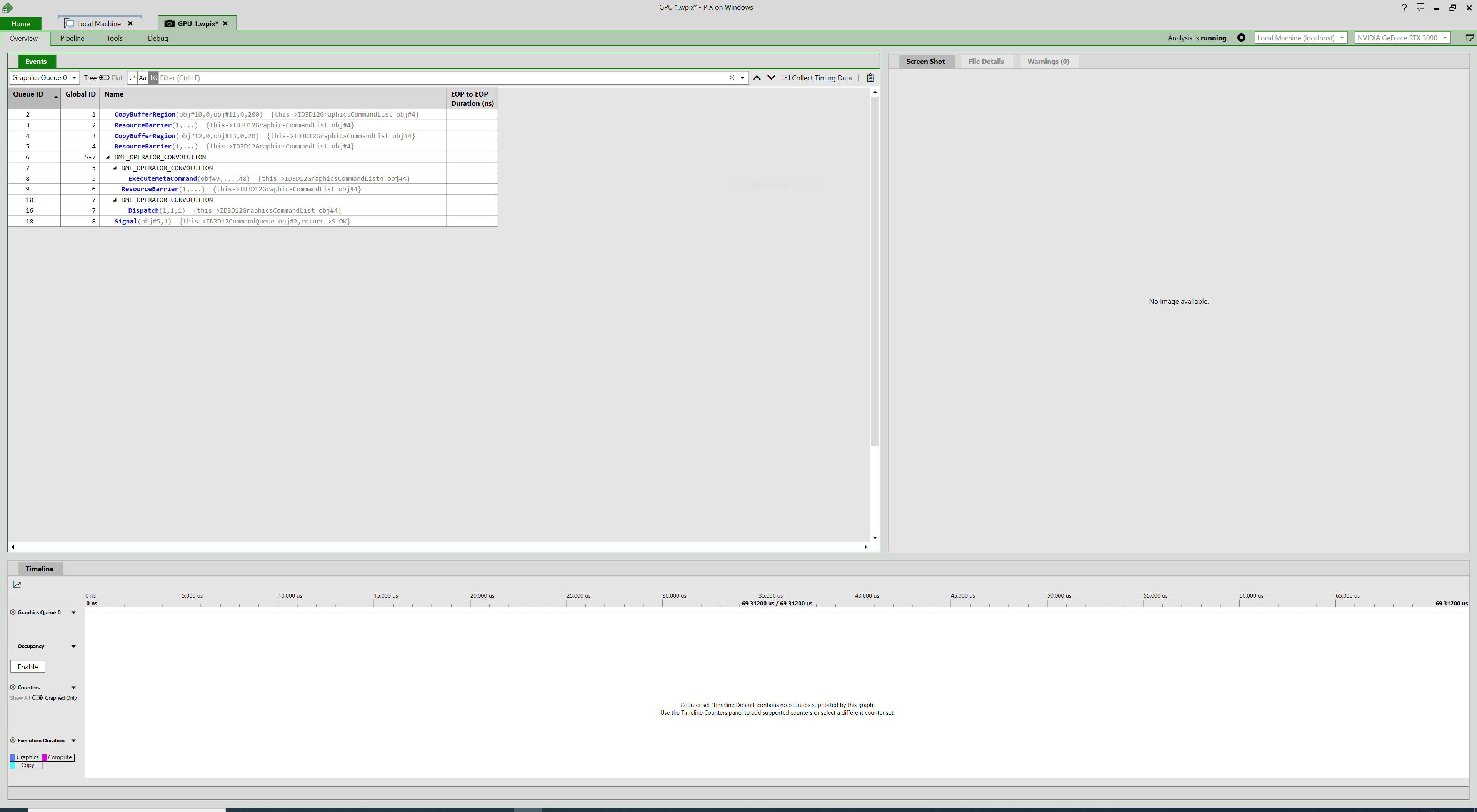

PIX AnalysisDemo model on 5700XT |

This is my code https://github.com/jb2020-super/test-DirectML.git

According to the PIX analysis result, the convolution with FusedActivation set to DML_OPERATOR_ACTIVATION_LEAKY_RELU is splitted into two convolution ops. But when replaced with DML_OPERATOR_ACTIVATION_RELU, fusion succeed. How to solve this?

The text was updated successfully, but these errors were encountered: