In this class, you'll learn how to perform web scraping on Covid-19 data using python.

- Python Basics

- pandas Basics

- HTML Basics

- CSS module

- Beautiful soup/bs4 module

- requests module

- lxml module

Web scraping, also known as web data extraction, is the process of retrieving or “scraping” data from a website. This information is collected and then exported into a format that is more useful for the user. Be it a spreadsheet or an API.

- Always be respectful and try to get permission to scrape, do not bombard a website with scraping requests, otherwise, your IP address may get blocked!

- Be aware that websites change often, meaning your code could go from working to totally broken from one day to the next.

- Request for a response from the webpage

- Parse and extract with the help of Beautiful soup and lxml

- Download and export the data with pandas into excel

It can serve several purposes, most popular ones are Investment Decision Making, Competitor Monitoring, News Monitoring, Market Trend Analysis, Appraising Property Value, Estimating Rental Yields, Politics and Campaigns and many more.

We will use Worldometer website to fetch the data because we are interested in the data contained in a table at Worldometer’s website, where there are lists all the countries together with their current reported coronavirus cases, new cases for the day, total deaths, new deaths for the day, etc.

<!DOCTYPE html>

<html>

<head>

</head>

<body>

<h1> Scrapping </h1>

<p> Hello </p>

</body>

</html><!DOCTYPE html>: HTML documents must start with a type declaration.- The HTML document is contained between

<html>and</html>. - The meta and script declaration of the HTML document is between

<head>and</head>. - The visible part of the HTML document is between

<body>and</body>tags. - Title headings are defined with the

<h1>through<h6>tags. - Paragraphs are defined with the

<p>tag. - Other useful tags include

<a>for hyperlinks,<table>for tables,<tr>for table rows, and<td>for table columns.

Open your Prompt

and type and run the following command (individually):

-

pip install requests -

pip install lxml -

pip install bs4

- Use the requests library to grab the page.

- This may fail if you have a firewall blocking Python/Jupyter.

- Sometimes you need to run this twice if it fails the first time.

BeautifulSoup library already has lots of built-in tools and methods to grab information from a string of this nature (basically an HTML file). It is a Python library for pulling data out of HTML and XML files.

Using BeautifulSoup we can create a "soup" object that contains all the "ingredients" of the webpage.

Once Installed now we can import it inside our python code.

You can and

Starring and Forking is free for you, but it tells me and other people that it was helpful and you like this tutorial.

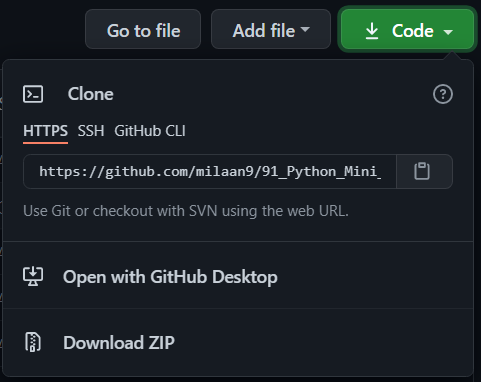

Go here if you aren't here already and click ➞ ✰ Star and ⵖ Fork button in the top right corner. You will be asked to create a GitHub account if you don't already have one.

-

Go

hereand click the big green ➞Codebutton in the top right of the page, then click ➞Download ZIP. -

Extract the ZIP and open it. Unfortunately I don't have any more specific instructions because how exactly this is done depends on which operating system you run.

-

Launch ipython notebook from the folder which contains the notebooks. Open each one of them

Kernel > Restart & Clear Output

This will clear all the outputs and now you can understand each statement and learn interactively.

If you have git and you know how to use it, you can also clone the repository instead of downloading a zip and extracting it. An advantage with doing it this way is that you don't need to download the whole tutorial again to get the latest version of it, all you need to do is to pull with git and run ipython notebook again.

I'm Dr. Milaan Parmar and I have written this tutorial. If you think you can add/correct/edit and enhance this tutorial you are most welcome🙏

See github's contributors page for details.

If you have trouble with this tutorial please tell me about it by Create an issue on GitHub. and I'll make this tutorial better. This is probably the best choice if you had trouble following the tutorial, and something in it should be explained better. You will be asked to create a GitHub account if you don't already have one.

If you like this tutorial, please give it a ⭐ star.

You may use this tutorial freely at your own risk. See LICENSE.

Copyright (c) 2020 Dr. Milaan Parmar