Created by Panos Achlioptas, Olga Diamanti, Ioannis Mitliagkas, Leonidas J. Guibas.

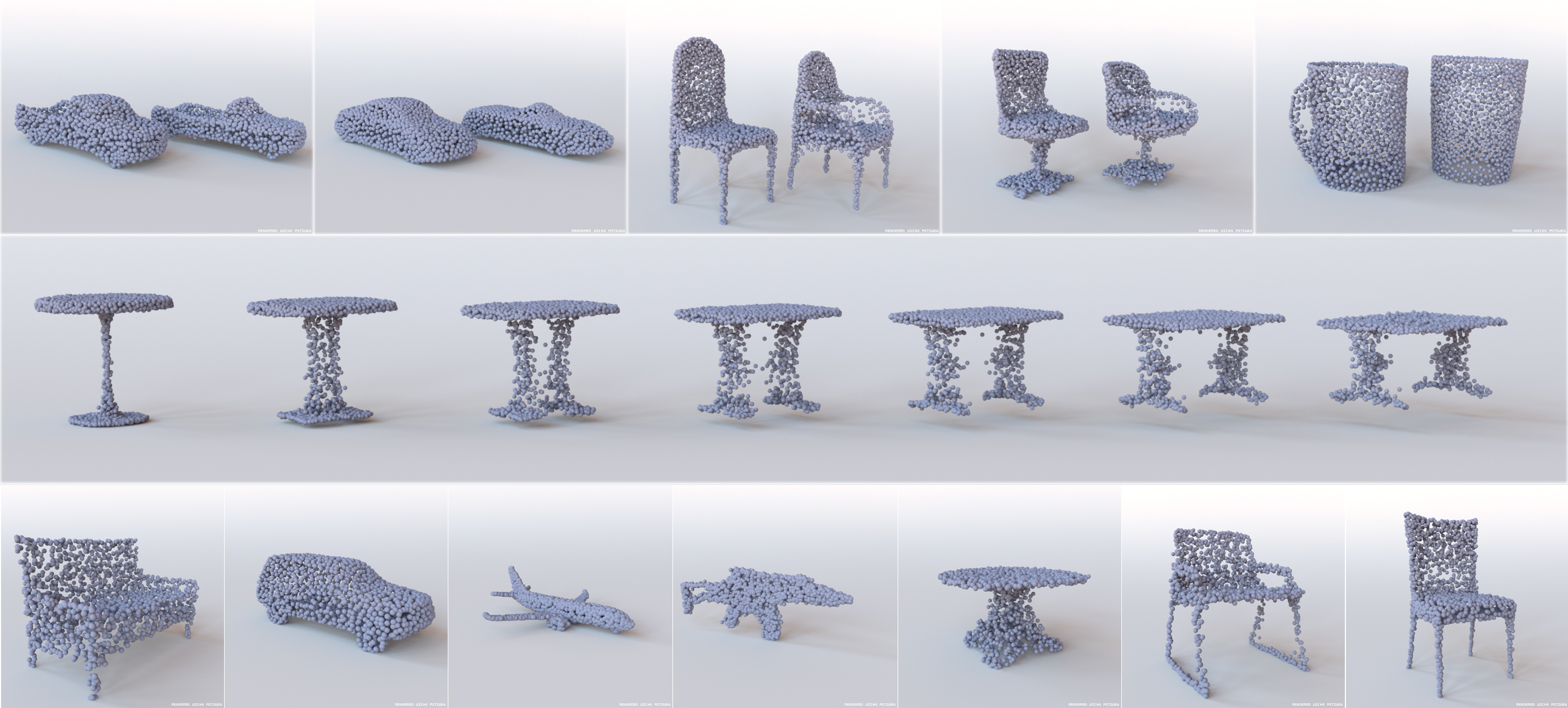

This work is based on our arXiv tech report. We proposed a novel deep net architecture for auto-encoding point clouds. The learned representations was amenable to xxx.

If you find our work useful in your research, please consider citing:

@article{achlioptas2017latent_pc,

title={Learning Representations And Generative Models For 3D Point Clouds},

author={Achlioptas, Panos and Diamanti, Olga and Mitliagkas, Ioannis and Guibas, Leonidas J},

journal={arXiv preprint arXiv:1707.02392},

year={2017}

}

Requirements:

- Python 2.7+ with Numpy, Scipy and Matplotlib

- Tensorflow (version 1.0+)

- TFLearn

Our code has been tested with Python 2.7, TensorFlow 1.3.0, TFLearn 0.3.2, CUDA 8.0 and cuDNN 6.0 on Ubuntu 14.04.

Download the source code from the git repository:

git clone https://github.com/optas/latent_3d_points

To be able to train your own model you need first to compile the EMD/Chamfer losses. In latent_3d_points/external/structural_losses we have inculded the cuda implementations of Fan et. al.

cd latent_3d_points/external

with your editor change the first three lines of the makefile to point on your nvcc, cudalib and tensorflow library.

make

This project is licensed under the terms of the MIT license (see LICENSE.md for details).