Replies: 7 comments 14 replies

-

|

We have people with 14k+ item libraries experiencing no performance issues. If you run into performance issues please let me know! As for MySQL/other databases; it’s possible. I may expose configuration for it through environment variables in the future for advanced users. |

Beta Was this translation helpful? Give feedback.

-

|

Well the ARRs all say the same thing ;) but then they also have issues lol but are too far down the road to fix them. |

Beta Was this translation helpful? Give feedback.

-

|

This is a discussion no? You tell me what the performance should be, youre making the app lol. Right now I have it on a 32c 128gb box but I assume thats not typical. Also 14k items seems kinda low. I just imported > 12k movies, and 4k tv series. (There also 4k versions but you get the idea). Performance issues dont usually appear on import, they tend to appear over time. The ARRs have DB issues and time outs with bots trying to add to them. They might not be doing good indexes. But its good to hear you can support MySql it will help out for those that want to do a dedicated DB server especially if they have large content/user sizes. |

Beta Was this translation helpful? Give feedback.

-

|

I'd like to see MySQL configuration option exposed for multiple reasons, but the main being that those of us who do use overseerr in a docker swarm and use NFS mounts can run into problems with database locking. And using ?nolock=1 doesn't entirely fix this option. Radarr has, in it's nightly versions, support for an external DMBS (PostgreSQL) and while I'm not a big fan of PostgreSQL, either MySQL or PostgreSQL is better than SQLite. As I've said in Plex forums, if the DB is handled by an abstract layer, using any RDBMS should be no problem. The problem becomes when applications (like Plex, Emby, Jellyfin) so tightly tie their products to SQLite instead, and refractoring becomes an issue. But looking at how Olaris, for instance is handling database functions, using an abstract layer that isn't tied to any specific DB is the way to go. The default can be SQLite, as for a lot of people this is fine. But for those of us who run our services in a swarm, in a cluster, etc, and using NFS, this makes things just work (tm). My setup, for instance, runs Overseerr in a 6 member swarm (3/ESXI, 3/Proxmox). My servers have no local storage, outside of the SSD that ESXI/Proxmox boots from, and in two cases, has a datastore just for TrueNAS (which in turn has the HBA passthrough to the VM for complete control). The datastores are all iSCSI for the VMs, and NFS for media/etc. For instance, all my docker VMS have NFS mounts for /NAS/Config, /NAS/Media, and /NAS/Shared. All Docker configs/data directories are reachable via /NAS/Config/ so my containers, regardless of where they are running from, all have the exact same config. So, doesn't matter if 'pildocker02' or 'pildocker05' are down, the container can run on 'pildocker04' using the same mapping. I do have a few things that are outside of the swarm, because of SQLite and the issues with NFS. Plex, for one (I don't run Plex as a container anyway) but because of issues with file locking, having Plex's data directory on a NFS caused too many issues I'm forced to use custom software to keep, say Plex's DB in sync across multiple nodes. Needless to say, it's not just the size of ones database, it's more the use of NFS and the problems associated with it. |

Beta Was this translation helpful? Give feedback.

-

|

FWIW, radarr and prowlarr now support postgres, and sonarr is considering it after v4 comes out, so the tides are shifting. I'd be happy to make a donation if it helps get overseerr to support postgres as well. |

Beta Was this translation helpful? Give feedback.

-

|

@sct I'd like to re-phrase @evanrich 's statement above from my own view - Would you be willing to scope Postgres support to fully flesh out how much work it'd take, and could you guess at how much would it cost to complete the scoping portion (either in $ to you, or in time from you if you'd prefer)? If you know it's trivial to scope just based on the code base, that's great, but if it takes you anything more than say, an hour, please don't hesitate to associate a $ value with it. Then should it eventually get scoped out, could that scope also include a potential cost + way to fund it? After reading some over the last few months, I'm trying to take a new tact with open source projects I use with how I think about their overall support as well as maint. support - while I pretty much always try throw an anonymous chip in the tip jar for any project I value, I understand even that's almost an anomaly, and shouldn't be expected to be the sole source of funding for all enhancements/feature requests as well as regular maintenance... Basically where I'm coming from is 'if a feature is so important to folks (including myself of course!), why not ask if we've the possibility to straight up pay for it?' (with the understanding that the response may simply be 'no', due to any number of reasons, time commitments, whatever) For the record, my preference would be postgres as I'm far more familiar with it... But I don't want that to be a barrier to entry lol |

Beta Was this translation helpful? Give feedback.

-

|

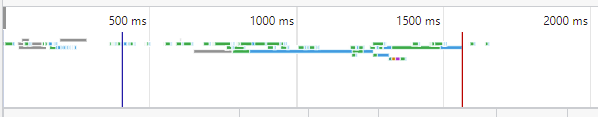

FYI, the docker image on Synology complains about these 2 potential performance issues:

|

Beta Was this translation helpful? Give feedback.

-

How is performance going to be addressed and not be slugglish like Ombi? Can the DB changed out for MYSQL etc?

Beta Was this translation helpful? Give feedback.

All reactions