Henry Hengyuan Zhao

·

Pan Zhou

·

Difei Gao

·

Zechen Bai

·

Mike Zheng Shou

Show Lab, National University of Singapore | Singapore Management University

Question answering, asking, and assessment are three innate human traits crucial for understanding the world and acquiring knowledge. By enhancing these capabilities, humans can more effectively utilize data, leading to better comprehension and learning outcomes. However, current Multimodal Large Language Models (MLLMs) primarily focus on question answering, often neglecting the full potential of questioning and assessment skills. In this study, we introduce LOVA3, an innovative framework named "Learning tO Visual Question Answering, Asking and Assessment," designed to equip MLLMs with these additional capabilities.

- [10/16/2024] 🔥 We release the webpage.

- [09/26/2024] 🔥 LOVA3 is accepted by NeurIPS 2024.

- [07/01/2024] 🔥 Related work Genixer is accepted by ECCV 2024.

- [05/24/2024] 🔥 We release the code of LOVA3, the EvalQABench, the training dataset Mixed_VQA_GenQA_EvalQA_1.5M.jsonl, and the checkpoint LOVA3-llava-v1.5-7b.

- [05/23/2024] 🔥 We release the LOVA3 paper.

-

Using Gemini-1.5-Flash to creating EvalQA training data with larger size and higher quality.

-

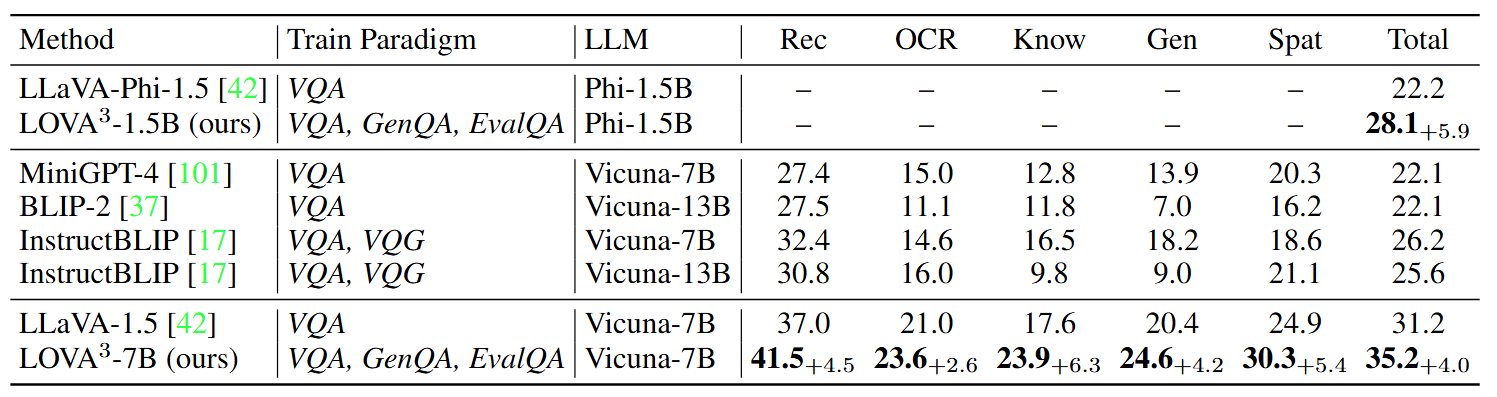

Applying LOVA3 to samller language model Phi-1.5.

-

LOVA3 - To the best of our knowledge, LOVA3 is the first effort to imbue the asking and assessment abilities in training a robust and intelligent MLLM, inspired from human learning mechanism.

-

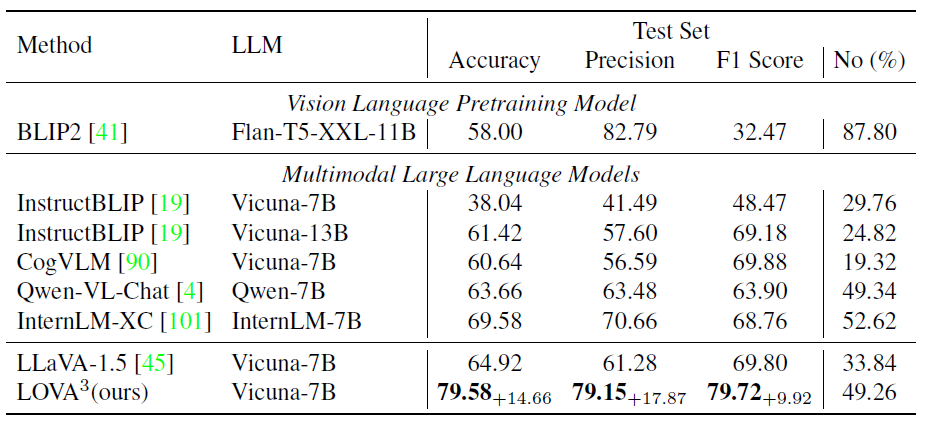

EvalQABench - We build a new benchmark EvalQABench for the VQA correction evaluation as the first effort to advance the development of future research.

-

Performance Improvement - Training with our proposed LOVA3 framework, we observe consistent improvement on 10 representative benchmarks.

Usage and License Notices: The data, and code is intended and licensed for research use only. They are also restricted to uses that follow the license agreement of LLaMA, Vicuna. The dataset is CC BY NC 4.0 (allowing only non-commercial use) and models trained using the dataset should not be used outside of research purposes.

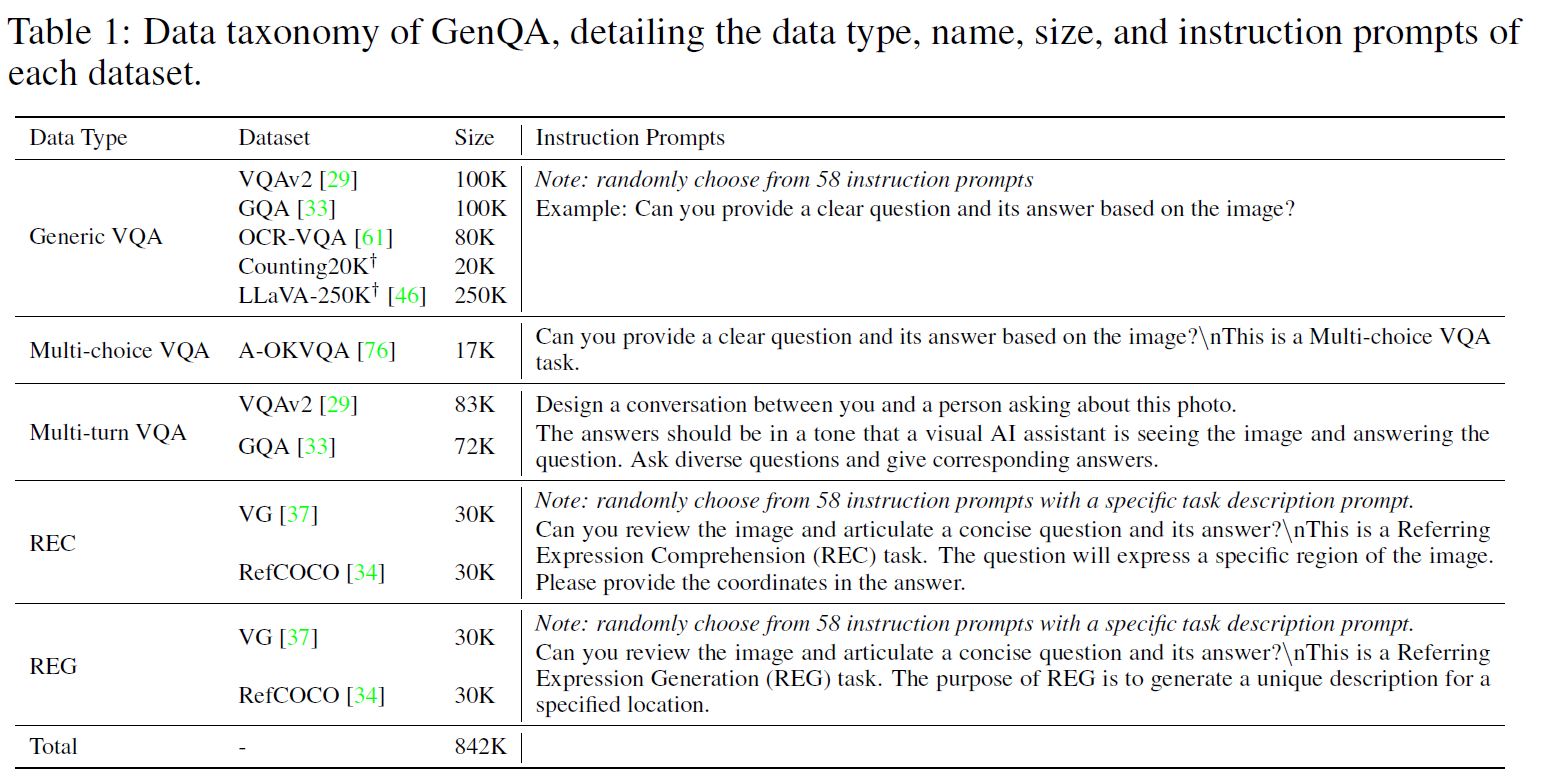

If one MLLM is able to successfully generate high-quality question-answer pairs based on visual input, it indicates a stronger problem-solving ability. To enable the MLLM to ask questions, we carefully define five main multimodal data types as listed in following table.

Illustration of the proposed pipeline for generating negative answers and feedback.

conda create -n LOVA python=3.10

conda activate LOVA

pip install --upgrade pip

pip install -e .Pretrained weight: LOVA3-llava-v1.5-7b

Download it by using following command:

git clone https://huggingface.co/hhenryz/LOVA3-llava-v1.5-7b

-

Here we provide the training/Evaluation/Testing sets of EvalQABench under the folder

EvalQABench. -

Training data: Mixed_VQA_GenQA_EvalQA_1.5M.jsonl.

Please download the images from constituting datasets:

- COCO: train2014

- GQA: images

- OCR-VQA: download script, we save all files as

.jpg - AOKVQA: download script

- TextVQA: train_val_images

- VisualGenome: part1, part2

- LLaVA-Instruct: huggingface

-

Download LOVA3-llava-v1.5-7b under the folder

checkpoints. -

Download the CLIP vision encoder clip-vit-large-patch14-336 under the folder

checkpoints. -

Run the evaluation scripts under the folder

scripts/v1_5/eval. There are 12 multimodal datasets and benchmarks awaiting evaluation.

Take VizWiz as an example, the running command is as follows:

modelname=LOVA3-llava-v1.5-7b

python -m llava.eval.model_vqa_loader \

--model-path checkpoints/$modelname \

--question-file ./playground/data/eval/vizwiz/llava_test.jsonl \

--image-folder /yourpath/vizwiz/test/ \

--answers-file ./playground/data/eval/vizwiz/answers/$modelname.jsonl \

--temperature 0 \

--conv-mode vicuna_v1

python scripts/convert_vizwiz_for_submission.py \

--annotation-file ./playground/data/eval/vizwiz/llava_test.jsonl \

--result-file ./playground/data/eval/vizwiz/answers/$modelname.jsonl \

--result-upload-file ./playground/data/eval/vizwiz/answers_upload/$modelname.json

-

Download the pretrained MLP adapter weights llava-v1.5-mlp2x-336px-pretrain-vicuna-7b-v1.5 from and put it under the folder

checkpoints. -

Download the model weight clip-vit-large-patch14-336 under the folder

checkpoints. -

Download the model weight vicuna-7b-v1.5 under the folder

checkpoints. -

Download the training data Mixed_VQA_GenQA_EvalQA_1.5M.jsonl under the folder

data. -

Run the training script.

bash scripts/v1_5/finetune.sh

If you find LOVA3 useful, please cite using this BibTeX:

@misc{zhao2024lova3learningvisualquestion,

title={LOVA3: Learning to Visual Question Answering, Asking and Assessment},

author={Henry Hengyuan Zhao and Pan Zhou and Difei Gao and Zechen Bai and Mike Zheng Shou},

year={2024},

eprint={2405.14974},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2405.14974},

}