-

Notifications

You must be signed in to change notification settings - Fork 1.9k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Ignore objects in loss calculation #964

Comments

|

@r3krut did you find a working way to do it? So far I was dealing with this by not calculating loss at those positions where I know the objects to be ignored are. But I am not sure about correctness of this solution. |

|

@janaoravcova I have found a similar way to ignore this type of bboxes as you described. I checked this approach for single class detection problem,. Maybe, it's will not work for multi-class detection problem. In loss calculation: |

|

I think, maybe you should generate a mask which ignore image as well as label. Only ignore label will infuence network performance. |

Hi! Thanks for your outstanding work!

I have a some question about loss calculation.

Suppose, I have in my dataset some target objects which should be ignored during training.

There is a right way to do this during heatmap loss calculation based on Gaussians?

Should I generate a binary training mask for pixels which belongs to "ignore" object for whole gaussian(positive+negative) or only for center(positive) pixel?

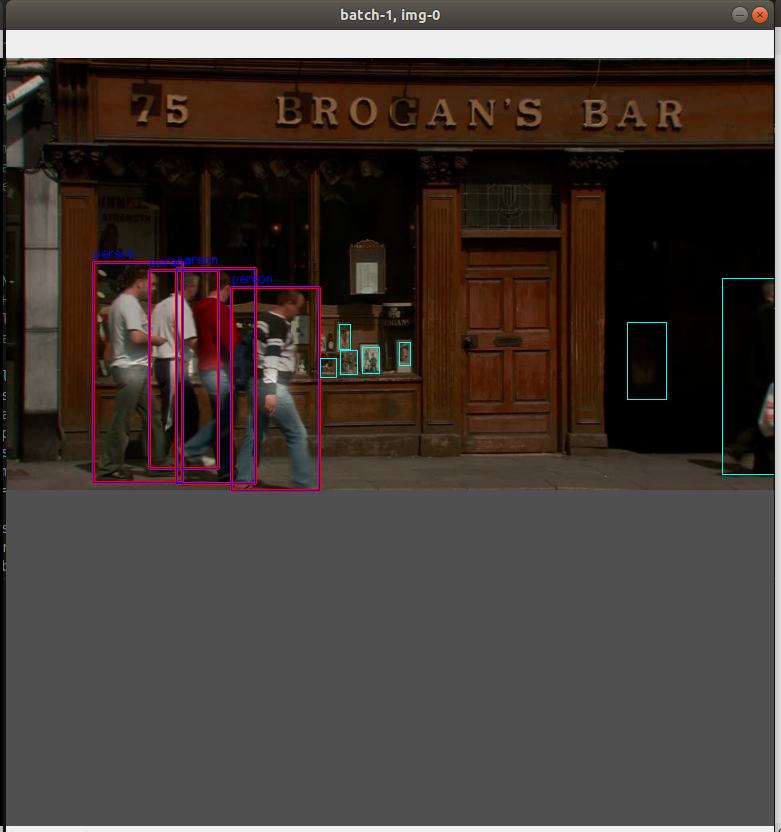

This is original image with targets visualization(red - targets, cyan - ignoring objects.

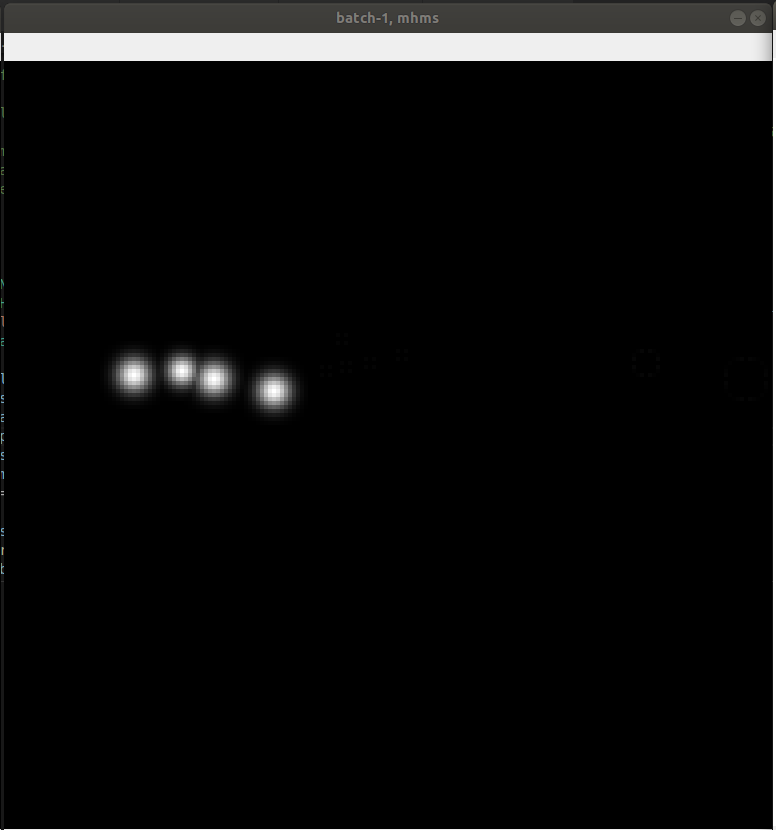

This is source heatmaps for all objects.

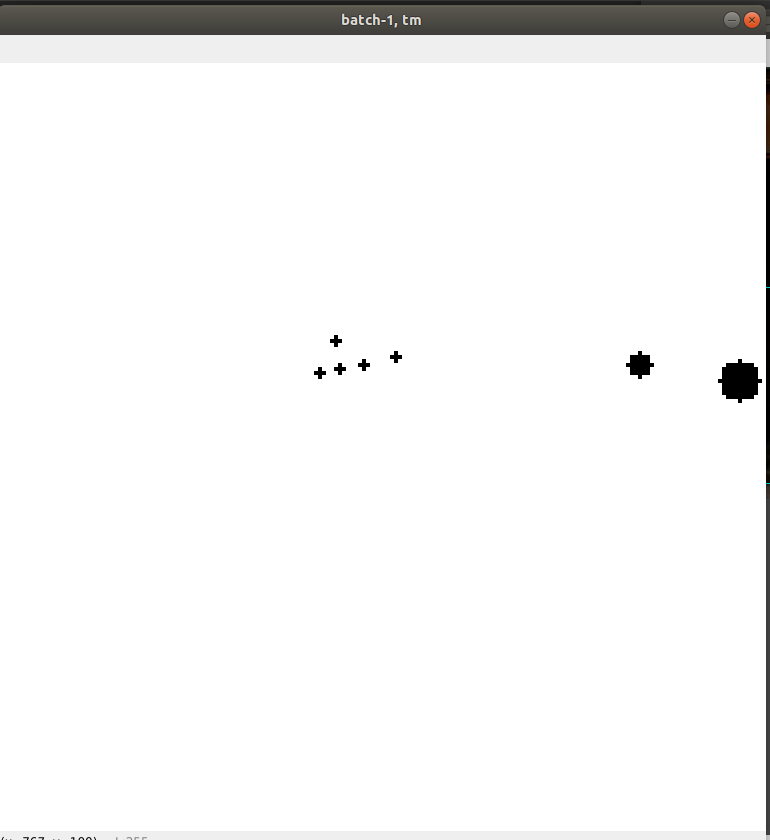

This mask for ignoring objects(drawing as circle with same radius as in heatmap generation)

This is masked source heatmaps.

My question is: is there a proper way to generate training mask for ignoring objects?

The text was updated successfully, but these errors were encountered: