This is an official PyTorch implementation for paper "V-FloodNet: A Video Segmentation System for Urban Flood Detection and Quantification". A robust automatic system for water level or inundation depth estimation from images and videos, consisting of reliable water and reference object detection/segmentation, and depth estimation models.

This paper was published in Environmental Modelling & Software, Elsevier (2023). Click here to view the paper.

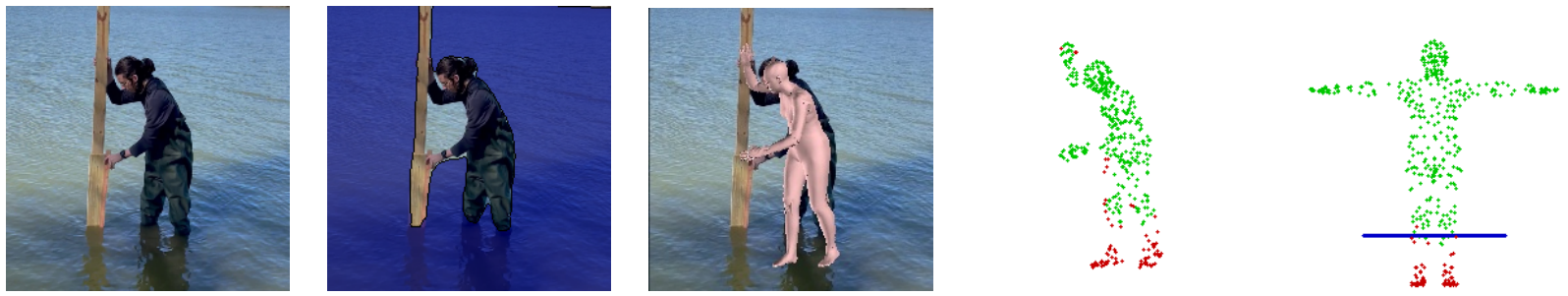

Here are some screenshots of our results. We can estimate water depth from the detected objects in the scene.

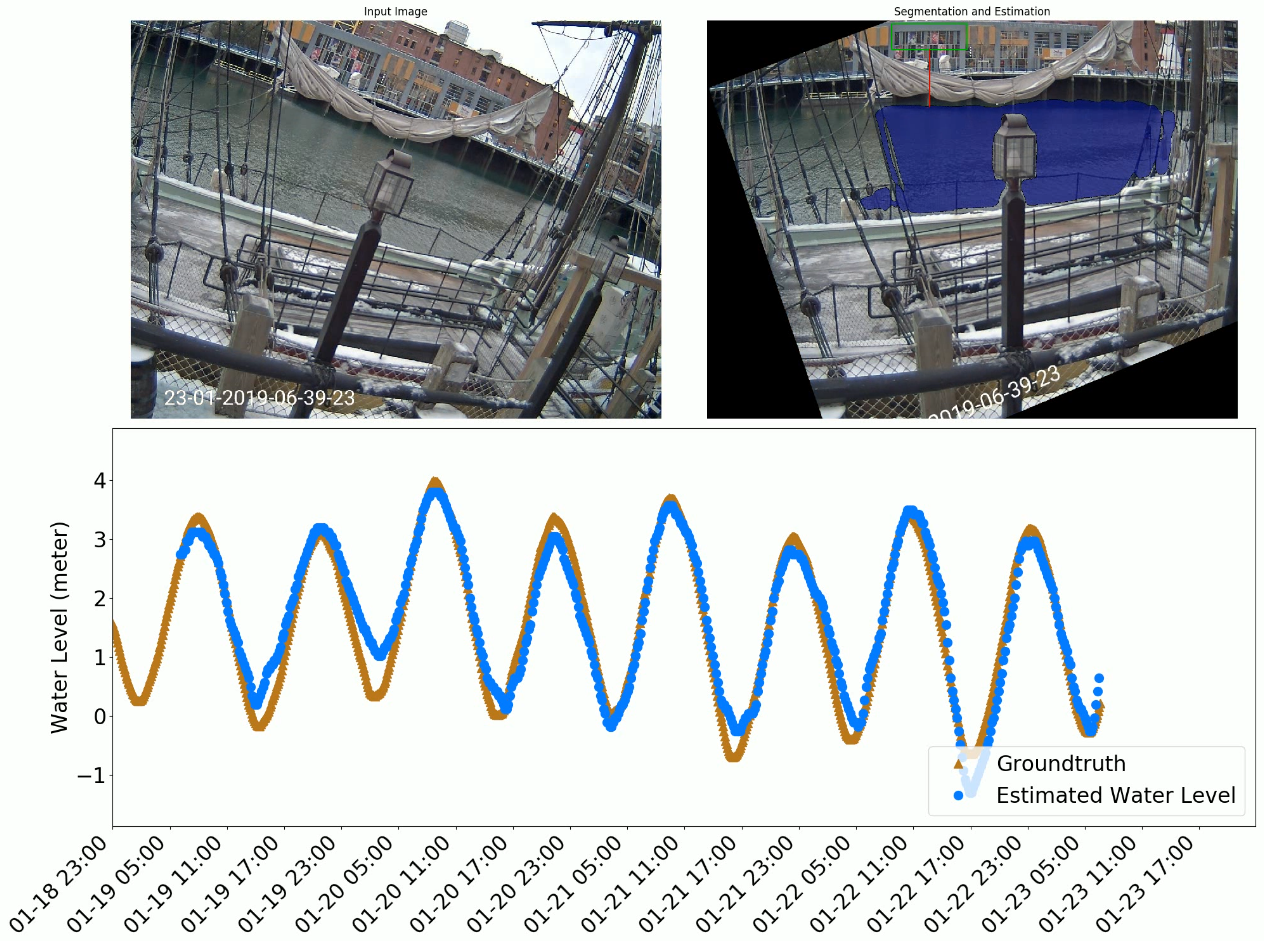

We can also estimate water level from from long videos under various weather and illumination conditions.

We provide our dataset and results in Google Drive.

More demo videos are available in the animation_videos folder.

We developed and tested the source code on Ubuntu 18.04 and PyTorch framework. Nvidia GPU with driver is required.

For Windows users, running our code on WSL2 subsystem is recommended. Because part of our code has GUI window, you need to install X Server on Windows. Please refer to this post for instructions.

Update for WSL2 users:

- We found that the submodule MeshTransformer contains multiple softlink. When you are using WSL2, make sure you clone the repo into a NTFS disk, otherwise the softlink doesn't work.

- The end-of-line-sequence for bash files should be LF instead of CRLF, you can easily change it by setting git config or in Visual Studio Code.

The following packages are required to run the code. First, git clone this repository

git clone https://github.com/xmlyqing00/V-FloodNet.git

cd V-FloodNet

git submodule update --init --recursiveSecond, a python virtual environment is recommended. PyTorch 1.8.2 LTS requires the python<=3.8. We also provide the installation guidance if you want to use higher version of PyTorch.

conda create -n vflood python=3.8

conda activate vfloodIn the virtual environment, install the following required packages from their official instructions.

- torch, torchvision, from PyTorch. We used v1.8.2+cu111 is used in our code or v2.2.0+cu121.

- PyTorch Scatter for scatter operations. We used the version for torch 1.8.1+cu111 or not specified.

- Detectron2 for reference objects segmentation. We use version 0.6 here.

- MeshTransformer for human detection and 3D mesh alignment.

Installation commands for CUDA 11.1 (NVIDIA GPU older than 40X0 such as 3090)

# Install essential libaries

sudo apt install build-essential libosmesa6-dev libgl1-mesa-dev libglu1-mesa-dev freeglut3-dev

# Install PyTorch

pip install torch==1.8.2+cu111 torchvision==0.9.2+cu111 torchaudio==0.8.2 -f https://download.pytorch.org/whl/lts/1.8/torch_lts.html

pip install torch-scatter==2.0.8 -f https://data.pyg.org/whl/torch-1.8.1+cu111.html -v

# Install Detectron2

pip install detectron2 -f https://dl.fbaipublicfiles.com/detectron2/wheels/cu111/torch1.8/index.html

# Install MeshTransformer, make sure you are in the folder of this repository.

cd MeshTransformer

python setup.py build develop

pip install ./manopth/.

cd ..

# Install other python packages

pip install -r requirements.txtInstallation commands for CUDA 12.1 (NVIDIA GPU 40X0)

Since our package depends on several PyTorch packages which are not updated frequently. Modern GPU cards such as 4090 can not run on the old PyTorch version. We need to use some tricks to install the dependants. I tested the following environment on NVIDIA 4090 and CUDA 12.1. nvcc is not required.

First, we need to install the gh tool which can switch repository to a pull request version

sudo apt install gh

gh auth loginSecond, we use the following commands to install the libraries

# Install essential libaries

sudo apt install build-essential libosmesa6-dev libgl1-mesa-dev libglu1-mesa-dev freeglut3-dev

# Install nightly PyTorch 2.2.0 which supports the latest CUDA 12.1

pip install --pre torch torchvision torchaudio --index-url https://download.pytorch.org/whl/nightly/cu121

pip install torch-scatter

# Install Detectron2, we have to manually compile it instead of using wheel.

cd ..

git clone [email protected]:facebookresearch/detectron2.git

cd detectron2/

gh pr checkout 4868

pip install -e .

cd ../V-FloodNet/

# Install MeshTransformer, make sure you are in the folder of this repository.

cd MeshTransformer

# Manually create the __init__.py file to include the package.

touch metro/modeling/data/__init__.py

touch metro/modeling/hrnet/__init__.py

python setup.py build develop

pip install ./manopth/.

cd ..

# Install other python packages

pip install -r requirements.txtLinks to pretrained models and dataset.

First, run the following script to download the pretrained models of MeshTransformer (it may take about 15 mins)

sh scripts/download_MeshTransformer_models.shSecond, due to the license, we have to manually download some body files.

- Download SMPL model

SMPLIFY_CODE_V2.ZIPfrom the official website SMPLify. When it starts downloading, it showsmpips_smplify_public_v2.zip. - Extract it and copy the model file

basicModel_neutral_lbs_10_207_0_v1.0.0.pklfromsmplify_public/code/models/toV-FloodNet/MeshTransformer/metro/modeling/data/.

Third, download the archives from Google Drive.

- Extract the pretrained models for water segmentation

records.zipand put them in the folderV-FloodNet/records/. - Extract the water dataset

WaterDatasetin any path, which includes the training images and testing videos. You can put it atV-FloodNet/WaterDataset/.

The final file structure looks like

V-FloodNet/

-- MeshTransformer/

-- metro/

-- modeling/

-- data/

-- basicModel_neutral_lbs_10_207_0_v1.0.0.pkl

-- records/

-- groundtruth/

-- link_efficientb4_model.pth

-- video_seg_checkpoint_20200212-001734.pth

...

-- WaterDataset/

-- train_images/

-- test_videos/

...

Put the testing images in a folder then

python test_image_seg.py --test-path=/path/to/image/folder --test-name=<anyname>The default output folder is output/segs/

Example

python test_image_seg.py --test-path=assets/img_exp/ --test-name img_expIf your input is a video, we provide a script scripts/cvt_video_to_imgs.py to extract frames of the video.

Put the extracted frames in a folder then

python test_video_seg.py --test-path=/path/to/image/folder --test-name=<anyname>Example

python test_video_seg.py --test-path=assets/lake_exp/ --test-name lake_expMore arguments can be found in python test_video_seg.py --help.

Note that the --budget argument controls the maximum number of features that can be stored in GPU memory for adaptive feature bank. The default value 3000000 is an experimental number for 11GB GPU memory.

Arguments for water depth estimation

--test-pathindicates the path to the image forder.--test-namehere should be as the same as the above segmentations.--opt: should be one of the three optionsstopsign,people, andrefto specify three types reference objects.

python est_waterlevel.py --test-path=/path/to/image/folder --test-name=<anyname> --opt=<opt>Example

python est_waterlevel.py --test-path=assets/lake_exp/ --test-name lake_exp --opt people

python est_waterlevel.py --test-path=assets/lake_exp/ --test-name lake_exp --opt stopsignThis is an optional step. For input video, to compare the estimated water level with the groundtruths in records/groundtruth/, you can use

python scripts/cmp_hydrograph.py --test-name=<anyname>Retrain the model from scratch or your own data could take a long time for weeks. We strongly recommend you used our pretrained model for inferring.

To train the image segmentation module, please refer to the train_image_seg.py

python train_image_seg.py \

--dataset-path=/path/to/Datasets/WaterDataset/train_images/ \

--encoder=efficientnet-b4

To train the video segmentation module, please refer to the train_video_seg.py. We provide an initial training point in Google Drive, where the network weights are pretrained on large general videos.

We use the following parameters to train our model

python train_video_seg.py \

--dataset=/path/to/WaterDataset/train_images \

--resume=./records/level2_YouTubeVOS.pth \

--new --log

@article{liang2023v,

title={V-FloodNet: A video segmentation system for urban flood detection and quantification},

author={Liang, Yongqing and Li, Xin and Tsai, Brian and Chen, Qin and Jafari, Navid},

journal={Environmental Modelling \& Software},

volume={160},

pages={105586},

year={2023},

publisher={Elsevier}

}

This paper was published in Environmental Modelling & Software, Elsevier (2023). The corresponding author is Xin Li (Xin Li [email protected]). All rights are reserved.