Henry Hengyuan Zhao

·

Pan Zhou

·

Mike Zheng Shou

Show Lab, National University of Singapore | Singapore Management University

-

Two Data Generators - Genixer$_L$ and Genixer$_S$. Genixer$_L$ can generate various types of data, including Common VQA, MC-VQA, and MD data, etc.Genixer$_S$ is specialized in generating grounding-like VQA data.

-

Two Synthetic Datasets - 915K VQA-like data and 350K REC-like data are two synthetic datasets for pretraining stage.

-

8K Synthetic Dataset - llava_mix665k_synthetic_8k.jsonl is the mixed jsonl file for finetuning stage. Take it for enhancing your own MLLM.

- Medium level synthetic VQA with Fuyu probability (0.5-0.7) would be benefit the model training. We consider that the data with higher probability primarily belong to the simple samples which would contribute less for finally model understanding.

Usage and License Notices: The data, and code is intended and licensed for research use only. They are also restricted to uses that follow the license agreement of LLaMA, Vicuna. The dataset is CC BY NC 4.0 (allowing only non-commercial use) and models trained using the dataset should not be used outside of research purposes.

-

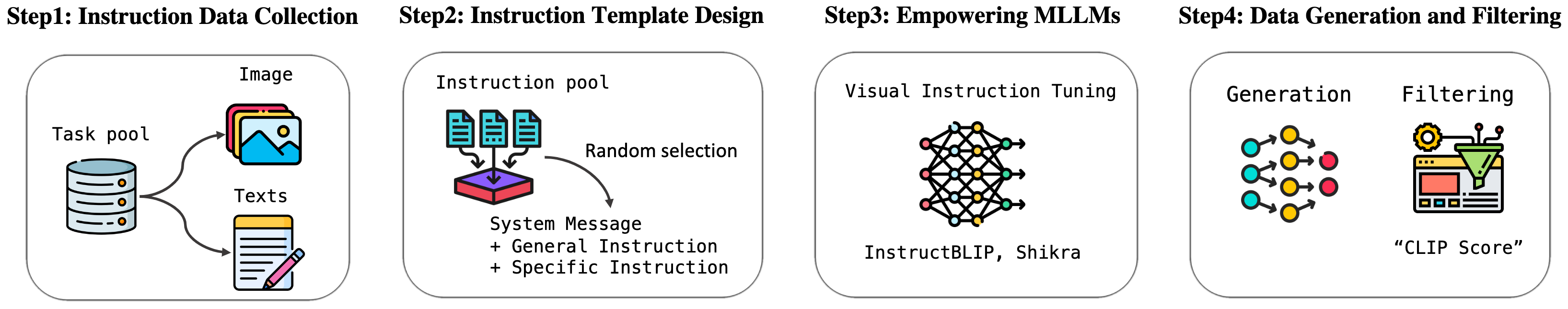

Instruction Data Collection: We systematically collected 9 primary vision-language (VL) tasks, categorized into two main groups: Generic and Grounding multimodal tasks.

-

Instruction Template Design: We developed a two-level instruction template tailored separately for task-agnostic and task-specific data generation.

-

Empowering MLLMs: We obtained two data generators.

-

Data Filtering: We use Fuyu-8B as a third-party MLLM for free data quality assessment. Logistic probabilities are calculated to evaluate the correctness of each data sample. Additionally, we found that data with medium-level probabilities tend to contribute more to the final model performance.

In accordance with the prevalence and practical relevance of real-world multi-modal tasks, we have carefully selected 9 representative multimodal tasks as listed in the following table for corresponding data generation. We categorize the VL tasks into two groups: 4 Generic tasks and 5 Grounding tasks.

The illustration of proposed Fuyu-driven data filtering framework. The outputs of the framework compose a probability and a direct answer.

In an automatic data generation context, where image content is agnostic, preemptively determining the specific task type becomes particularly daunting, especially when it involves large-scale data creation purposes. Hence, we consider two key modes for visual instruction data generation: 1) task-agnostic data generation and 2) task-specific data generation.

Selected examples generated from

For the generic instructions used in training Genixer, please refer to the path Genixer_Shikra/config/_base_/dataset/template/GenQA_general_instructions.json for the details.

cd Genixer_LLaVA

conda create -n genixerL python=3.10 -y

conda activate genixerL

pip install --upgrade pip

pip install -e .| Model Name | Checkpoints | Description |

|---|---|---|

| Genixer-llava-v1.5-7b | Model weights | Data Generator |

| llava-Genixer-915K-FT-8K-v1.5-7b | Model weights | Trained Model |

Please download the images from constituting datasets:

- COCO: train2014

- GQA: images

- OCR-VQA: download script, we save all files as

.jpg - AOKVQA: download script

- TextVQA: train_val_images

- VisualGenome: part1, part2

- LLaVA-CC3M-Pretrain-595K: huggingface

- LLaVA-Pretrain: huggingface

- LLaVA-Instruct: huggingface

- Flickr30K: Kaggle

TrainDataforGenixerLLaVA.jsonl: 1M instruction tuning data for training the

Genixer_915K.jsonl: This is the synthetic instruction tuning data generated by our trained

Moreover, we provide additional two synthetic pretraining datasets mentioned in ablation study for your preference:

- Download model weight Genixer-llava-v1.5-7b under the folder

checkpoints. - Run evaluation on Flickr30K unannotated images with generic data type, please refer to the script

scripts/eval_genixer/generic_generation.sh.

CHUNKS=8

CKPT=Genixer-llava-v1.5-7b

qfile=data/flickr30k_imagequery.jsonl

imgdir=/yourpath/flickr30k/flickr30k_images/flickr30k_images

datatype=flickr30k_tem0.2

tasktype=generic

for IDX in $(seq 0 $((CHUNKS-1))); do

CUDA_VISIBLE_DEVICES=$IDX python -m model_genixer_eval \

--model-path checkpoints/$CKPT \

--question-file $qfile \

--image-folder $imgdir \

--answers-file ./playground/data/genixer_eval/$datatype/$tasktype/answers/$CKPT/${CHUNKS}_${IDX}.jsonl \

--task-type $tasktype \

--num-chunks $CHUNKS \

--chunk-idx $IDX \

--temperature 0.2 \

--conv-mode vicuna_v1 &

done

wait

output_file=./playground/data/genixer_eval/$datatype/$tasktype/answers/$CKPT/merge.jsonl

> "$output_file"

for IDX in $(seq 0 $((CHUNKS-1))); do

cat ./playground/data/genixer_eval/$datatype/$tasktype/answers/$CKPT/${CHUNKS}_${IDX}.jsonl >> "$output_file"

doneMore evaluation scripts can be found in scripts/eval_genixer.

-

Download the model weight clip-vit-large-patch14-336 under the folder

checkpoints. -

Download the model weight llava-v1.5-7b under the folder

checkpoints. -

Preparing the TrainDataforGenixerLLaVA.jsonl under the folder

data. -

Run the training script

bash scripts/train_genixer.sh

#!/bin/bash

outputdir=exp/llava-v1.5-7b-Genixer

deepspeed llava/train/train_mem.py \

--deepspeed ./scripts/zero3.json \

--model_name_or_path checkpoints/llava-v1.5-7b \

--version v1 \

--data_path ./data/TrainDataforGenixerLLaVA.jsonl \

--image_folder ./data \

--vision_tower checkpoints/clip-vit-large-patch14-336 \

--mm_projector_type mlp2x_gelu \

--mm_vision_select_layer -2 \

--mm_use_im_start_end False \

--mm_use_im_patch_token False \

--image_aspect_ratio pad \

--group_by_modality_length True \

--bf16 True \

--output_dir $outputdir \

--num_train_epochs 1 \

--per_device_train_batch_size 8 \

--per_device_eval_batch_size 4 \

--gradient_accumulation_steps 2 \

--evaluation_strategy "no" \

--save_strategy "steps" \

--save_steps 50000 \

--save_total_limit 1 \

--learning_rate 1e-5 \

--weight_decay 0. \

--warmup_ratio 0.03 \

--lr_scheduler_type "cosine" \

--logging_steps 1 \

--tf32 True \

--model_max_length 2048 \

--gradient_checkpointing True \

--dataloader_num_workers 4 \

--lazy_preprocess True \

--report_to wandb-

Download the model weight clip-vit-large-patch14-336 under the folder

checkpoints. -

Download the model weight vicuna-7b-v1.5 under the folder

checkpoints. -

Download the synthetic pretraining data Genixer_915K.jsonl under the folder

data. -

Download the mixture finetuning data llava_mix665k_synthetic_8k.jsonl under the folder

data. -

Run the pretraining script.

bash scripts/pretrain.sh

- Run the finetuing script.

bash scripts/finetune.sh

-

Download llava-Genixer-915K-FT-8K-v1.5-7b under the folder

checkpoints. -

Following the data preparation steps from here.

Take VizWiz as an example, you just need to set the modelname of downloaded model and ensure the correctness of the path of image folder.

modelname=llava-Genixer-915K-FT-8K-v1.5-7b

python -m llava.eval.model_vqa_loader \

--model-path exp/$modelname \

--question-file ./playground/data/eval/vizwiz/llava_test.jsonl \

--image-folder /dataset/lavis/vizwiz/test/ \

--answers-file ./playground/data/eval/vizwiz/answers/$modelname.jsonl \

--temperature 0 \

--conv-mode vicuna_v1

python scripts/convert_vizwiz_for_submission.py \

--annotation-file ./playground/data/eval/vizwiz/llava_test.jsonl \

--result-file ./playground/data/eval/vizwiz/answers/$modelname.jsonl \

--result-upload-file ./playground/data/eval/vizwiz/answers_upload/$modelname.json

cd Genixer_Shikra

conda create -n GenixerS python=3.10

conda activate GenixerS

pip install -r requirements.txt| Model Name | Checkpoints | Description |

|---|---|---|

| Genixer-shikra-7b | Model weights | Data Generator |

| shikra-Genixer-350K-7b | Model weights | Trained Model |

- COCO: train2014

- GQA: images

- VisualGenome: part1, part2

- LLaVA-CC3M-Pretrain-595K: huggingface

- LLaVA-Pretrain: huggingface

- LLaVA-Instruct: huggingface

- Flickr30K: Kaggle

- SBU: images

Download the original annotation data from here and put it under data.

Please refer to the file Genixer_Shikra/config/_base_/dataset/DEFAULT_TRAIN_DATASET.py to replace yourpath with the exact folder path on your machine.

genrecdata=dict(

type='GenRECDataset',

filename=r'{{fileDirname}}/../../../data/REC_ref3_train.jsonl',

image_folder=r'/yourpath/coco2014/train2014',

template_file=r"{{fileDirname}}/template/GenQA_general_instructions.json",

),

We use

-

Download the model weight of Genixer-shikra-7b under the folder

checkpoints. -

Download the vision encoder clip-vit-large-patch14 under the folder

checkpoints. -

Run the script

run_eval_genixer.sh.

accelerate launch --num_processes 8 \

--main_process_port 23782 \

mllm/pipeline/finetune.py \

config/genixer_eval_GenQA.py \

--cfg-options model_args.model_name_or_path=checkpoints/Genixer-shikra-7b \

training_args.output_dir=results/Genixer-shikra-7b

-

Download the vision encoder clip-vit-large-patch14 under the folder

checkpoints. -

Download the LLM model weight vicuna-7b-v1.1 under the folder

checkpoints. -

Download the delta model shikra-7b-delta-v1 of Shikra.

-

Transform the delta model to

shikra-7b-v1.1with the commandbash model_transform.sh.

python mllm/models/models/apply_delta.py \

--base /yourpath/vicuna-7b-v1.1 \

--target checkpoints/shikra-7b-v1.1 \

--delta checkpoints/shikra-7b-delta-v1

- Run the stage-1 training script.

bash run_genixer_stage1.sh

- Run the stage-2 training script.

bash run_genixer_stage2.sh

-

Download the vision encoder clip-vit-large-patch14 under the folder

checkpoints. -

Download the LLM model weight vicuna-7b-v1.1 under the folder

checkpoints. -

Run the script for the stage-0 pretraining.

bash run_genixer_shikra_stage0.sh

- Run the script for the stage-1 pretraining.

bash run_genixer_shikra_stage1.sh

- Run the script for the stage-2 pretraining.

bash run_genixer_shikra_stage2.sh

-

Download the model shikra-Genixer-350K-7b under the folder

checkpoints. -

Download the vision encoder clip-vit-large-patch14 under the folder

checkpoints. -

Run the script

bash run_eval_rec.sh.

accelerate launch --num_processes 8 \

--main_process_port 23782 \

mllm/pipeline/finetune.py \

config/eval_multi_rec.py \

--cfg-options model_args.model_name_or_path=checkpoints/shikra-Genixer-350K-7b \

training_args.output_dir=results/shikra-Genixer-350K-7b

We prepare the code of using Fuyu-8B as the data filtering in the file Genixer_LLaVA/fuyudatafiltering/GenQA_filtering_mp.py

Run the following command for multi-GPU data filtering.

bash scripts/fuyudatafilter.sh

We run the CLIP-Driven REC data filtering with this script multiprocess_evalclipscore.py.

bash Genixer_Shikra/multiprocess_evalclipscore.py

If you find Genixer useful, please cite using this BibTeX:

@misc{zhao2024genixerempoweringmultimodallarge,

title={Genixer: Empowering Multimodal Large Language Models as a Powerful Data Generator},

author={Henry Hengyuan Zhao and Pan Zhou and Mike Zheng Shou},

year={2024},

eprint={2312.06731},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2312.06731},

}