-

Notifications

You must be signed in to change notification settings - Fork 4.9k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[Fleet]: event.datasets field is blank with logs under Logs tab. #27404

Comments

|

Pinging @elastic/fleet (Team:Fleet) |

|

@manishgupta-qasource Please review. |

|

Reviewed & Assigned to @jen-huang CC: @EricDavisX |

|

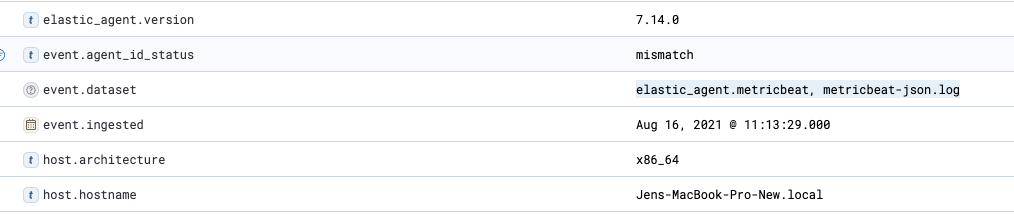

Looks like this is happening because multiple values are being written to This looks like something the agent team should look at and confirm if these kind of values are expected ( |

|

Pinging @elastic/agent (Team:Agent) |

|

if this is coming from a 7.14 Agent, I'd ask for further review to see how impactful this may be. we might fix it more urgently if it is impactful. |

|

Does anyone know where the Agent adds beats/x-pack/elastic-agent/pkg/agent/operation/monitoring.go Lines 322 to 329 in 084ba4e

|

|

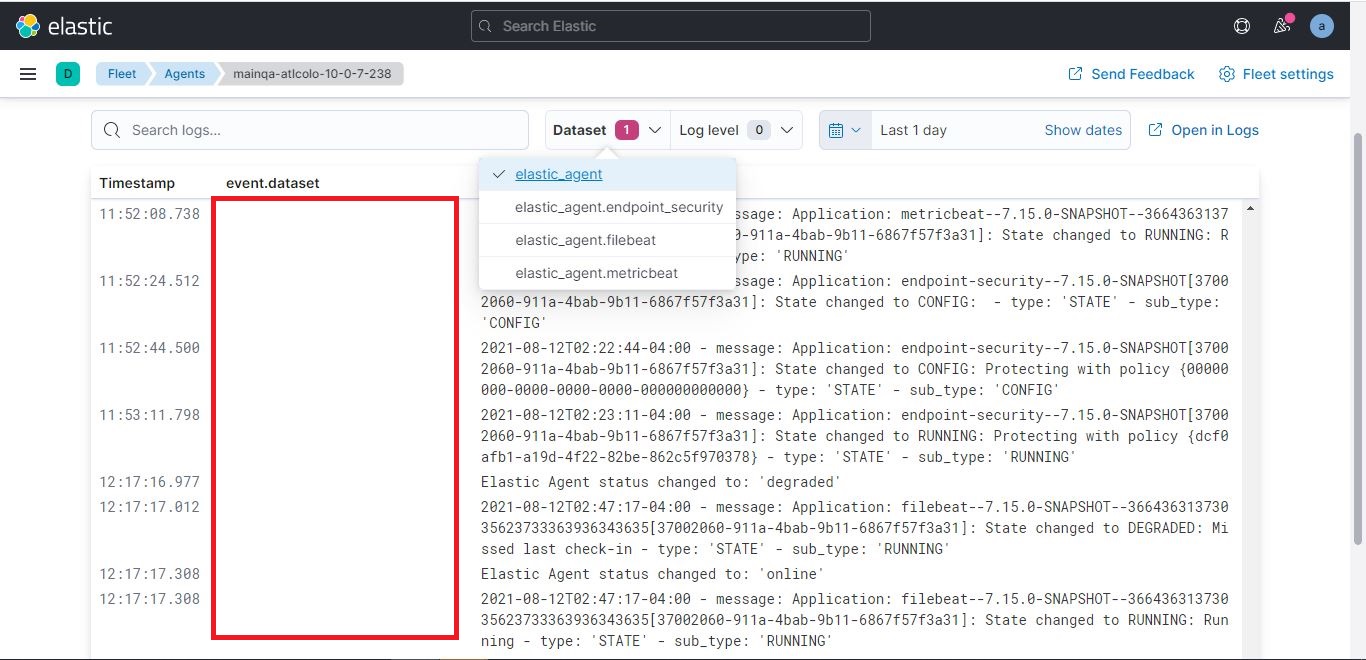

@michel-laterman Interesting that your UI screenshot actually displays values in the |

|

|

|

@andrewkroh, It looks like the filename event.dataset entries are being written directly into the logs. For example {

"log.level":"info",

"@timestamp":"2021-08-16T20:02:19.901Z",

"log.logger":"api",

"log.origin": {

"file.name":"api/server.go",

"file.line":62

},

"message":"Starting stats endpoint",

"service.name":"filebeat",

"event.dataset":"filebeat-json.log",

"ecs.version":"1.6.0"

}this only occurs with the log entries (no dataset arrays for metrics) |

|

the filename dataset entries are added as part of the ECS annotations: Lines 199 to 205 in afde058

|

|

@hop-dev Can you take a look on the Fleet side to see why the |

|

Adding the LogFileName as |

|

@felixbarny I see you suggested |

|

@ruflin is that because the The idea was that each Beat/shipper defines its dataset and that the Filebeat config to ship the log files looks the same for every shipper, without needing to define the dataset. However, that requires event routing so that all logs can be sent to the same routing pipeline that routes the events based on the |

|

In general I like the idea that the

Most important, there can only be a single dataset value in the logs or in the end event. I think the short term fix is that we get rid of the |

This seemed to be a best practice looking at datasets like

Why not have the same datastream regardless of how it's run?

++ |

|

I must confess I'm a but surprised by the "best practice" in ECS Logging. Why would we include

I don't think I have good pure technical argument on this one. The way I think of it is: If you run just filebeat, this is the service you run and is monitored. As soon as you run Elastic Agent, it will run some processes which is an implementation detail for the user. How it writes logs is also an implementation detail. All the logs can be consumed under |

Looking at examples (maybe from Beats modules, not sure), I thought that this was an established best practice, so I added it to the ECS logging spec as a default value. I don't feel strongly about the |

|

I believe the root cause of the field not being shown in the table is that |

@ruflin See also the example in the logs app docs Do you have a suggestion for a better default? |

|

I wonder where these values come from. In modules / packages we don't use In case of filebeat I assume the json logs and non json logs contain exactly the same content. So in the ideal scenario there is an ingest pipeline that converts both into the same format so we could just use |

Kibana version: 7.15.0 Snapshot Kibana cloud environment

Host OS and Browser version: All, All

Build details:

Preconditions:

Steps to reproduce:

Expected Result:

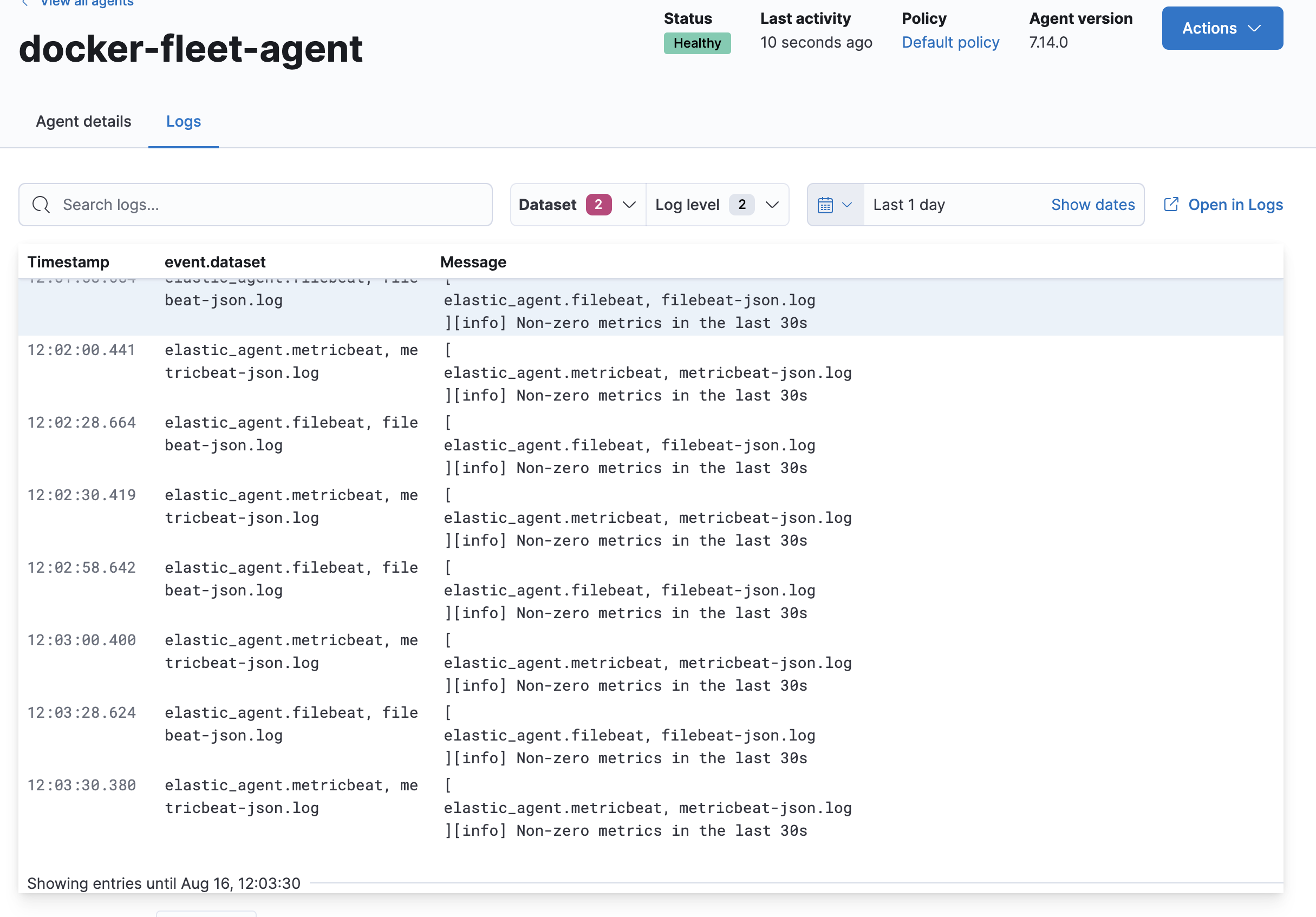

event.datasets field should not be blank with logs under Logs tab. The dataset selected from Datasets dropdown should be visible under event.datasets field.

Note:

Screenshot:

The text was updated successfully, but these errors were encountered: