Inference at the edge #205

Replies: 22 comments 33 replies

-

|

Kudos on all the incredible work you and the community did! |

Beta Was this translation helpful? Give feedback.

-

|

Thanks for your amazing work with both whisper.cpp, and llama.cpp. I've been hugely inspired by your contributions (especially with Whisper while working on Buzz), and I share your excitement about all the amazing possibilities of on-device inference. Personally, I've been thinking a lot about multi-modal on-device inference, like an assistive device that can both capture image data and respond to voice and text commands offline, and I plan to hack something together soon. In any case, thanks again for all your work. I really do hope to continue contributing to your projects (and keep improving my C++ :)) and learning more from you in the future. — Chidi |

Beta Was this translation helpful? Give feedback.

-

|

"This project will remain open-source" |

Beta Was this translation helpful? Give feedback.

-

|

As someone involved in a number of "Inference at the edge" projects which are features focused, the feature all of them truly needed was performance! Now with llama.cpp and its forks these projects can reach audiences they never could before. These are good thoughts. Thank you Georgi, and thank you everyone else who has contributed and is contributing no matter how big or small. |

Beta Was this translation helpful? Give feedback.

-

|

this core / edge thing appears to have a lot of potential and should at least be made very transparent by all. I.e. what exactly runs at the edge in the current commercial products? so that we know how to plan our resources and not get "frustrated" when, e.g., Microsoft Edge crashes on some of them, or when the claims don't hold because the edge is not at the expected level. Before more countries embark on building AI capabilities (UK?), maybe one should get that clear. Will there be a national core or a national edge? |

Beta Was this translation helpful? Give feedback.

-

|

This is the true spirit of hacking technologies from the bottom up! 🥷💻

Keep doing the good work! 🚀🤘 |

Beta Was this translation helpful? Give feedback.

-

|

LLaMA.cpp works shockingly well. You've proved that we don't need 16 bits, only 16 levels! Thanks so much for what you're doing, it's because of people like you that open source / community / collaborative AI will catch up with the strongest commercial AI, or at least contribute substantially and remain valuable. I've noticed that open source software is generally of much higher quality (for security, dev and research purposes at least). |

Beta Was this translation helpful? Give feedback.

-

|

I really appreciate the incredible efforts you've put into this project. It's wonderful to know that you intend keep it open-source and are considering the addition of more models. One particularly intriguing model is FLAN-UL2 20B. Though its MMLU performance is inferior to LLaMA 65B, it has already been instruction fine-tuned and comes with an Apache 2.0 license. This could potentially enable a lot of interesting real-world use cases, such as question answering across a collection of documents. It is however an encoder-decoder architecture and might be more work to get up and running. |

Beta Was this translation helpful? Give feedback.

-

|

One important difference is that FLAN-UL2 20B has a 4x bigger context length with 2048 tokens. That makes it more suitable for some use-cases such as in-context learning and retrieval-augmented generation. |

Beta Was this translation helpful? Give feedback.

-

|

CTranslate2 could be good inspiration, it's a C++ inference engine for Transformer language models with a focus on machine translation. It performs very well on CPUs and uses hardware acceleration effectively. Thanks for the great open-source work! I want to play around with llama.cpp this weekend. |

Beta Was this translation helpful? Give feedback.

-

|

@ggerganov About these AVX2 routines, I had an idea about possible micro-optimizations. |

Beta Was this translation helpful? Give feedback.

-

|

I like your phrase "Bloating the software with the ideas of today will make it useless tomorrow." |

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

Btw, thank you very much for this code which runs beautifully on my 10 year old Macbook Pro. Edmund. |

Beta Was this translation helpful? Give feedback.

-

|

There are libraries for hardware-accelerated matrix options on the raspberry pi: https://github.com/Idein/qmkl6 |

Beta Was this translation helpful? Give feedback.

-

|

Very slow hope iPhone |

Beta Was this translation helpful? Give feedback.

-

|

This is a great effort and hope it reaches somewhere. Right now, the cost to run model for inference in GPU is cost-prohibitive for most ideas, projects, and bootstrapping startups compared to just using chatgpt API. Once you are locked in the ecosystem the cost which seems low for tokens, can increase exponentially. Plus, llama licensing is also ambiguous. |

Beta Was this translation helpful? Give feedback.

-

|

Thanks for the incredible work! |

Beta Was this translation helpful? Give feedback.

-

Hi, I made a flutter mobile app with your project, i am really interested in this idea! The repo : Sherpa Github I will soon update to the latest version of llama, but if there is an optimisation for low end devices, I am extremely interested. |

Beta Was this translation helpful? Give feedback.

-

|

we will look back on this in 20 years, when telling the tales of cloud vs edge... |

Beta Was this translation helpful? Give feedback.

-

|

I really appreciate your support for memory-mapped files. Having the ability to "cheat" and run models I shouldn't be able to due to your efficient programming is simply a miracle. |

Beta Was this translation helpful? Give feedback.

-

|

what do you think it is that happened that people might consider that they “shouldn’t be able” to run large models on small hardware? |

Beta Was this translation helpful? Give feedback.

-

Inference at the edge

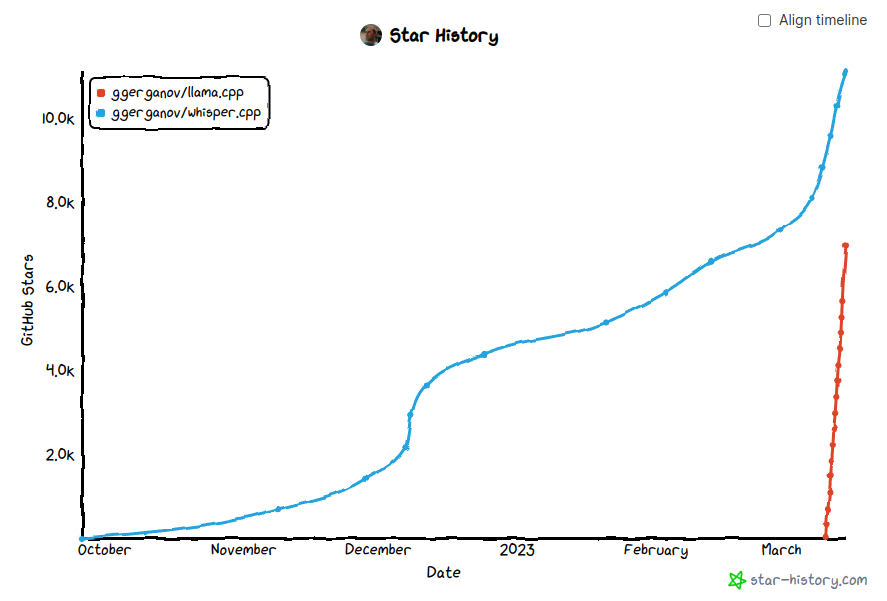

Based on the positive responses to whisper.cpp, and more recently, llama.cpp, it looks like there is a strong and growing interest for doing efficient transformer model inference on-device (i.e. at the edge).

The past few days, I received a large number of requests and e-mails with various ideas for startups, projects, collaboration. This makes me confident that there is something of value in these little projects. It would be foolish to let this existing momentum go to waste.

Recently, I've also been seeing some very good ideas and code contributions by many developers:

llama.cppefficiency by 10% with simple SIMD change TwitterThe AI field currently presents a wide range of cool things to do. Not all of them (probably most) really useful, but still - fun and cool. And I think a lot of people like to work on fun and cool projects (for now, we can leave the "useful" projects to the big corps :)). From chat bots that can listen and talk in your browser, to editing code with your voice or even running 7B models on a mobile device. The ideas are endless and I personally have many of them. Bringing those ideas from the cloud to the device, in the hands of the users is exciting!

Naturally, I am thinking about ways to build on top of all this. So here are a few thoughts that I have so far:

I hope that you share the hacking spirit that I have and would love to hear your ideas and comments about how you see the future of "inference at the edge".

Edit: "on the edge" -> "at the edge"

Beta Was this translation helpful? Give feedback.

All reactions