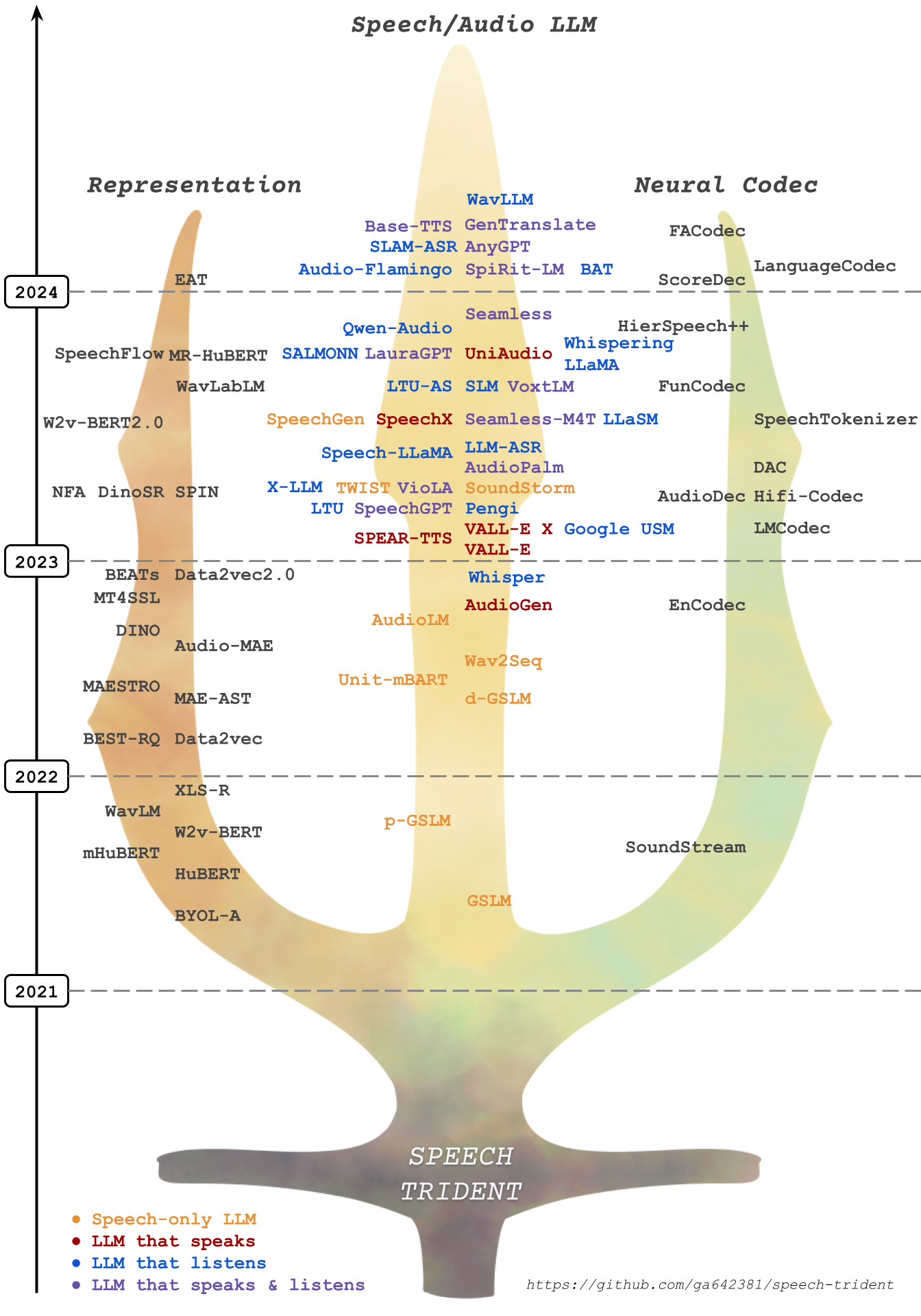

In this repository, we survey three crucial areas: (1) representation learning, (2) neural codec, and (3) language models that contribute to speech/audio large language models.

1.⚡ Speech Representation Models: These models focus on learning structural speech representations, which can then be quantized into discrete speech tokens, often refer to semantic tokens.

2.⚡ Speech Neural Codec Models: These models are designed to learn speech and audio discrete tokens, often referred to as acoustic tokens, while maintaining reconstruction ability and low bitrate.

3.⚡ Speech Large Language Models: These models are trained on top of speech and acoustic tokens in a language modeling approach. They demonstrate proficiency in tasks on speech understanding and speech generation.

|

Kai-Wei Chang |

Haibin Wu |

Wei-Cheng Tseng |

|

Kehan Lu |

Chun-Yi Kuan |

Hung-yi Lee |

- The challenge covers nowday's neural audio codecs and speech / audio language models.

- Time: December 3 starting at 15:15

- Detailed agenda: https://codecsuperb.github.io/

- Keynote speakers

- Neil Zeghidour (Moshi): 15:15-16:00

- Dongchao Yang (CUHK): 16:00-16:35

- Shang-Wen Li (Meta): 16:35-17:10

- Wenwu Wang (University of Surrey): 17:40-18:15

- Minje Kim (UIUC): 18:15-18:50

- Host

- Accepted papers (recording)

- ESPnet-Codec: Comprehensive Training and Evaluation of Neural Codecs for Audio, Music, and Speech

- Codec-SUPERB @ SLT 2024: A lightweight benchmark for neural audio codec models

- Investigating neural audio codecs for speech language model-based speech generation

- Addressing Index Collapse of Large-Codebook Speech Tokenizer with Dual-Decoding Product-Quantized Variational Auto-Encoder

- MDCTCodec: A Lightweight MDCT-based Neural Audio Codec towards High Sampling Rate and Low Bitrate Scenarios

| Date | Model Name | Paper Title | Link |

|---|---|---|---|

| 2024-12 | GLM-4-Voice | GLM-4-Voice: Towards Intelligent and Human-Like End-to-End Spoken Chatbot | Paper |

| 2024-12 | AlignFormer | AlignFormer: Modality Matching Can Achieve Better Zero-shot Instruction-Following Speech-LLM | Paper |

| 2024-11 | -- | Scaling Speech-Text Pre-training with Synthetic Interleaved Data | Paper |

| 2024-11 | -- | State-Space Large Audio Language Models | Paper |

| 2024-11 | -- | Building a Taiwanese Mandarin Spoken Language Model: A First Attempt | Paper |

| 2024-11 | Ultravox | Ultravox: An open-weight alternative to GPT-4o Realtime | Blog |

| 2024-11 | hertz-dev | blog | GitHub |

| 2024-11 | Freeze-Omni | Freeze-Omni: A Smart and Low Latency Speech-to-speech Dialogue Model with Frozen LLM | paper |

| 2024-11 | Align-SLM | Align-SLM: Textless Spoken Language Models with Reinforcement Learning from AI Feedback | paper |

| 2024-10 | Ichigo | Ichigo: Mixed-Modal Early-Fusion Realtime Voice Assistant | paper, code |

| 2024-10 | OmniFlatten | OmniFlatten: An End-to-end GPT Model for Seamless Voice Conversation | paper |

| 2024-10 | GPT-4o | GPT-4o System Card | paper |

| 2024-10 | Baichuan-OMNI | Baichuan-Omni Technical Report | paper |

| 2024-10 | GLM-4-Voice | GLM-4-Voice | GitHub |

| 2024-10 | -- | Roadmap towards Superhuman Speech Understanding using Large Language Models | paper |

| 2024-10 | SALMONN-OMNI | SALMONN-OMNI: A SPEECH UNDERSTANDING AND GENERATION LLM IN A CODEC-FREE FULL-DUPLEX FRAMEWORK | paper |

| 2024-10 | Mini-Omni 2 | Mini-Omni2: Towards Open-source GPT-4o with Vision, Speech and Duplex Capabilities | paper |

| 2024-10 | HALL-E | HALL-E: Hierarchical Neural Codec Language Model for Minute-Long Zero-Shot Text-to-Speech Synthesis | paper |

| 2024-10 | SyllableLM | SyllableLM: Learning Coarse Semantic Units for Speech Language Models | paper |

| 2024-09 | Moshi | Moshi: a speech-text foundation model for real-time dialogue | paper |

| 2024-09 | Takin AudioLLM | Takin: A Cohort of Superior Quality Zero-shot Speech Generation Models | paper |

| 2024-09 | FireRedTTS | FireRedTTS: A Foundation Text-To-Speech Framework for Industry-Level Generative Speech Applications | paper |

| 2024-09 | LLaMA-Omni | LLaMA-Omni: Seamless Speech Interaction with Large Language Models | paper |

| 2024-09 | MaskGCT | MaskGCT: Zero-Shot Text-to-Speech with Masked Generative Codec Transformer | paper |

| 2024-09 | SSR-Speech | SSR-Speech: Towards Stable, Safe and Robust Zero-shot Text-based Speech Editing and Synthesis | paper |

| 2024-09 | MoWE-Audio | MoWE-Audio: Multitask AudioLLMs with Mixture of Weak Encoders | paper |

| 2024-08 | Mini-Omni | Mini-Omni: Language Models Can Hear, Talk While Thinking in Streaming | paper |

| 2024-08 | Make-A-Voice 2 | Make-A-Voice: Revisiting Voice Large Language Models as Scalable Multilingual and Multitask Learner | paper |

| 2024-08 | LSLM | Language Model Can Listen While Speaking | paper |

| 2024-06 | SimpleSpeech | SimpleSpeech: Towards Simple and Efficient Text-to-Speech with Scalar Latent Transformer Diffusion Models | paper |

| 2024-06 | UniAudio 1.5 | UniAudio 1.5: Large Language Model-driven Audio Codec is A Few-shot Audio Task Learner | paper |

| 2024-06 | VALL-E R | VALL-E R: Robust and Efficient Zero-Shot Text-to-Speech Synthesis via Monotonic Alignment | paper |

| 2024-06 | VALL-E 2 | VALL-E 2: Neural Codec Language Models are Human Parity Zero-Shot Text to Speech Synthesizers | paper |

| 2024-06 | GPST | Generative Pre-trained Speech Language Model with Efficient Hierarchical Transformer | paper |

| 2024-04 | CLaM-TTS | CLaM-TTS: Improving Neural Codec Language Model for Zero-Shot Text-to-Speech | paper |

| 2024-04 | RALL-E | RALL-E: Robust Codec Language Modeling with Chain-of-Thought Prompting for Text-to-Speech Synthesis | paper |

| 2024-04 | WavLLM | WavLLM: Towards Robust and Adaptive Speech Large Language Model | paper |

| 2024-02 | MobileSpeech | MobileSpeech: A Fast and High-Fidelity Framework for Mobile Zero-Shot Text-to-Speech | paper |

| 2024-02 | SLAM-ASR | An Embarrassingly Simple Approach for LLM with Strong ASR Capacity | paper |

| 2024-02 | AnyGPT | AnyGPT: Unified Multimodal LLM with Discrete Sequence Modeling | paper |

| 2024-02 | SpiRit-LM | SpiRit-LM: Interleaved Spoken and Written Language Model | paper |

| 2024-02 | USDM | Integrating Paralinguistics in Speech-Empowered Large Language Models for Natural Conversation | paper |

| 2024-02 | BAT | BAT: Learning to Reason about Spatial Sounds with Large Language Models | paper |

| 2024-02 | Audio Flamingo | Audio Flamingo: A Novel Audio Language Model with Few-Shot Learning and Dialogue Abilities | paper |

| 2024-02 | Text Description to speech | Natural language guidance of high-fidelity text-to-speech with synthetic annotations | paper |

| 2024-02 | GenTranslate | GenTranslate: Large Language Models are Generative Multilingual Speech and Machine Translators | paper |

| 2024-02 | Base-TTS | BASE TTS: Lessons from building a billion-parameter Text-to-Speech model on 100K hours of data | paper |

| 2024-02 | -- | It's Never Too Late: Fusing Acoustic Information into Large Language Models for Automatic Speech Recognition | paper |

| 2024-01 | -- | Large Language Models are Efficient Learners of Noise-Robust Speech Recognition | paper |

| 2024-01 | ELLA-V | ELLA-V: Stable Neural Codec Language Modeling with Alignment-guided Sequence Reordering | paper |

| 2023-12 | Seamless | Seamless: Multilingual Expressive and Streaming Speech Translation | paper |

| 2023-11 | Qwen-Audio | Qwen-Audio: Advancing Universal Audio Understanding via Unified Large-Scale Audio-Language Models | paper |

| 2023-10 | LauraGPT | LauraGPT: Listen, Attend, Understand, and Regenerate Audio with GPT | paper |

| 2023-10 | SALMONN | SALMONN: Towards Generic Hearing Abilities for Large Language Models | paper |

| 2023-10 | UniAudio | UniAudio: An Audio Foundation Model Toward Universal Audio Generation | paper |

| 2023-10 | Whispering LLaMA | Whispering LLaMA: A Cross-Modal Generative Error Correction Framework for Speech Recognition | paper |

| 2023-09 | VoxtLM | Voxtlm: unified decoder-only models for consolidating speech recognition/synthesis and speech/text continuation tasks | paper |

| 2023-09 | LTU-AS | Joint Audio and Speech Understanding | paper |

| 2023-09 | SLM | SLM: Bridge the thin gap between speech and text foundation models | paper |

| 2023-09 | -- | Generative Speech Recognition Error Correction with Large Language Models and Task-Activating Prompting | paper |

| 2023-08 | SpeechGen | SpeechGen: Unlocking the Generative Power of Speech Language Models with Prompts | paper |

| 2023-08 | SpeechX | SpeechX: Neural Codec Language Model as a Versatile Speech Transformer | paper |

| 2023-08 | LLaSM | Large Language and Speech Model | paper |

| 2023-08 | SeamlessM4T | Massively Multilingual & Multimodal Machine Translation | paper |

| 2023-07 | Speech-LLaMA | On decoder-only architecture for speech-to-text and large language model integration | paper |

| 2023-07 | LLM-ASR(temp.) | Prompting Large Language Models with Speech Recognition Abilities | paper |

| 2023-06 | AudioPaLM | AudioPaLM: A Large Language Model That Can Speak and Listen | paper |

| 2023-05 | Make-A-Voice | Make-A-Voice: Unified Voice Synthesis With Discrete Representation | paper |

| 2023-05 | Spectron | Spoken Question Answering and Speech Continuation Using Spectrogram-Powered LLM | paper |

| 2023-05 | TWIST | Textually Pretrained Speech Language Models | paper |

| 2023-05 | Pengi | Pengi: An Audio Language Model for Audio Tasks | paper |

| 2023-05 | SoundStorm | Efficient Parallel Audio Generation | paper |

| 2023-05 | LTU | Joint Audio and Speech Understanding | paper |

| 2023-05 | SpeechGPT | Empowering Large Language Models with Intrinsic Cross-Modal Conversational Abilities | paper |

| 2023-05 | VioLA | Unified Codec Language Models for Speech Recognition, Synthesis, and Translation | paper |

| 2023-05 | X-LLM | X-LLM: Bootstrapping Advanced Large Language Models by Treating Multi-Modalities as Foreign Languages | paper |

| 2023-03 | Google USM | Google USM: Scaling Automatic Speech Recognition Beyond 100 Languages | paper |

| 2023-03 | VALL-E X | Speak Foreign Languages with Your Own Voice: Cross-Lingual Neural Codec Language Modeling | paper |

| 2023-02 | SPEAR-TTS | Speak, Read and Prompt: High-Fidelity Text-to-Speech with Minimal Supervision | paper |

| 2023-01 | VALL-E | Neural Codec Language Models are Zero-Shot Text to Speech Synthesizers | paper |

| 2022-12 | Whisper | Robust Speech Recognition via Large-Scale Weak Supervision | paper |

| 2022-10 | AudioGen | AudioGen: Textually Guided Audio Generation | paper |

| 2022-09 | AudioLM | AudioLM: a Language Modeling Approach to Audio Generation | paper |

| 2022-05 | Wav2Seq | Wav2Seq: Pre-training Speech-to-Text Encoder-Decoder Models Using Pseudo Languages | paper |

| 2022-04 | Unit mBART | Enhanced Direct Speech-to-Speech Translation Using Self-supervised Pre-training and Data Augmentation | paper |

| 2022-03 | d-GSLM | Generative Spoken Dialogue Language Modeling | paper |

| 2021-10 | SLAM | SLAM: A Unified Encoder for Speech and Language Modeling via Speech-Text Joint Pre-Training | paper |

| 2021-09 | p-GSLM | Text-Free Prosody-Aware Generative Spoken Language Modeling | paper |

| 2021-02 | GSLM | Generative Spoken Language Modeling from Raw Audio | paper |

| Date | Model Name | Paper Title | Link |

|---|---|---|---|

| 2024-12 | TS3-Codec | TS3-Codec: Transformer-Based Simple Streaming Single Codec | paper |

| 2024-12 | FreeCodec | FreeCodec: A disentangled neural speech codec with fewer tokens | paper |

| 2024-12 | TAAE | Scaling Transformers for Low-Bitrate High-Quality Speech Coding | paper |

| 2024-11 | PyramidCodec | PyramidCodec: Hierarchical Codec for Long-form Music Generation in Audio Domain | paper |

| 2024-11 | UniCodec | Universal Speech Token Learning Via Low-Bitrate Neural Codec and Pretrained Representations | paper |

| 2024-11 | SimVQ | Addressing Representation Collapse in Vector Quantized Models with One Linear Layer | paper |

| 2024-11 | MDCTCodec | MDCTCodec: A Lightweight MDCT-based Neural Audio Codec towards High Sampling Rate and Low Bitrate Scenarios | paper |

| 2024-10 | APCodec+ | APCodec+: A Spectrum-Coding-Based High-Fidelity and High-Compression-Rate Neural Audio Codec with Staged Training Paradigm | paper |

| 2024-10 | - | A Closer Look at Neural Codec Resynthesis: Bridging the Gap between Codec and Waveform Generation | paper |

| 2024-10 | SNAC | SNAC: Multi-Scale Neural Audio Codec | paper |

| 2024-10 | LSCodec | LSCodec: Low-Bitrate and Speaker-Decoupled Discrete Speech Codec | paper |

| 2024-10 | Co-design for codec and codec-LM | TOWARDS CODEC-LM CO-DESIGN FOR NEURAL CODEC LANGUAGE MODELS | paper |

| 2024-10 | VChangeCodec | VChangeCodec: A High-efficiency Neural Speech Codec with Built-in Voice Changer for Real-time Communication | paper |

| 2024-10 | DC-Spin | DC-Spin: A Speaker-invariant Speech Tokenizer For Spoken Language Models | paper |

| 2024-10 | DM-Codec | DM-Codec: Distilling Multimodal Representations for Speech Tokenization | paper |

| 2024-09 | Mimi | Moshi: a speech-text foundation model for real-time dialogue | paper |

| 2024-09 | NDVQ | NDVQ: Robust Neural Audio Codec with Normal Distribution-Based Vector Quantization | paper |

| 2024-09 | SoCodec | SoCodec: A Semantic-Ordered Multi-Stream Speech Codec for Efficient Language Model Based Text-to-Speech Synthesis | paper |

| 2024-09 | BigCodec | BigCodec: Pushing the Limits of Low-Bitrate Neural Speech Codec | paper |

| 2024-08 | X-Codec | Codec Does Matter: Exploring the Semantic Shortcoming of Codec for Audio Language Model | paper |

| 2024-08 | WavTokenizer | WavTokenizer: an Efficient Acoustic Discrete Codec Tokenizer for Audio Language Modeling | paper |

| 2024-07 | Super-Codec | SuperCodec: A Neural Speech Codec with Selective Back-Projection Network | paper |

| 2024-07 | dMel | dMel: Speech Tokenization made Simple | paper |

| 2024-06 | CodecFake | CodecFake: Enhancing Anti-Spoofing Models Against Deepfake Audios from Codec-Based Speech Synthesis Systems | paper |

| 2024-06 | Single-Codec | Single-Codec: Single-Codebook Speech Codec towards High-Performance Speech Generation | paper |

| 2024-06 | SQ-Codec | SimpleSpeech: Towards Simple and Efficient Text-to-Speech with Scalar Latent Transformer Diffusion Models | paper |

| 2024-06 | PQ-VAE | Addressing Index Collapse of Large-Codebook Speech Tokenizer with Dual-Decoding Product-Quantized Variational Auto-Encoder | paper |

| 2024-06 | LLM-Codec | UniAudio 1.5: Large Language Model-driven Audio Codec is A Few-shot Audio Task Learner | paper |

| 2024-05 | HILCodec | HILCodec: High Fidelity and Lightweight Neural Audio Codec | paper |

| 2024-04 | SemantiCodec | SemantiCodec: An Ultra Low Bitrate Semantic Audio Codec for General Sound | paper |

| 2024-04 | PromptCodec | PromptCodec: High-Fidelity Neural Speech Codec using Disentangled Representation Learning based Adaptive Feature-aware Prompt Encoders | paper |

| 2024-04 | ESC | ESC: Efficient Speech Coding with Cross-Scale Residual Vector Quantized Transformers | paper |

| 2024-03 | FACodec | NaturalSpeech 3: Zero-Shot Speech Synthesis with Factorized Codec and Diffusion Models | paper |

| 2024-02 | AP-Codec | APCodec: A Neural Audio Codec with Parallel Amplitude and Phase Spectrum Encoding and Decoding | paper |

| 2024-02 | Language-Codec | Language-Codec: Reducing the Gaps Between Discrete Codec Representation and Speech Language Models | paper |

| 2024-01 | ScoreDec | ScoreDec: A Phase-preserving High-Fidelity Audio Codec with A Generalized Score-based Diffusion Post-filter | paper |

| 2023-11 | HierSpeech++ | HierSpeech++: Bridging the Gap between Semantic and Acoustic Representation of Speech by Hierarchical Variational Inference for Zero-shot Speech Synthesis | paper |

| 2023-10 | TiCodec | FEWER-TOKEN NEURAL SPEECH CODEC WITH TIME-INVARIANT CODES | paper |

| 2023-09 | RepCodec | RepCodec: A Speech Representation Codec for Speech Tokenization | paper |

| 2023-09 | FunCodec | FunCodec: A Fundamental, Reproducible and Integrable Open-source Toolkit for Neural Speech Codec | paper |

| 2023-08 | SpeechTokenizer | Speechtokenizer: Unified speech tokenizer for speech large language models | paper |

| 2023-06 | VOCOS | VOCOS: CLOSING THE GAP BETWEEN TIME-DOMAIN AND FOURIER-BASED NEURAL VOCODERS FOR HIGH-QUALITY AUDIO SYNTHESIS | paper |

| 2023-06 | Descript-audio-codec | High-Fidelity Audio Compression with Improved RVQGAN | paper |

| 2023-05 | AudioDec | Audiodec: An open-source streaming highfidelity neural audio codec | paper |

| 2023-05 | HiFi-Codec | Hifi-codec: Group-residual vector quantization for high fidelity audio codec | paper |

| 2023-03 | LMCodec | LMCodec: A Low Bitrate Speech Codec With Causal Transformer Models | paper |

| 2022-11 | Disen-TF-Codec | Disentangled Feature Learning for Real-Time Neural Speech Coding | paper |

| 2022-10 | EnCodec | High fidelity neural audio compression | paper |

| 2022-07 | S-TFNet | Cross-Scale Vector Quantization for Scalable Neural Speech Coding | paper |

| 2022-01 | TFNet | End-to-End Neural Speech Coding for Real-Time Communications | paper |

| 2021-07 | SoundStream | SoundStream: An End-to-End Neural Audio Codec | paper |

| Date | Model Name | Paper Title | Link |

|---|---|---|---|

| 2024-09 | NEST-RQ | NEST-RQ: Next Token Prediction for Speech Self-Supervised Pre-Training | paper |

| 2024-01 | EAT | Self-Supervised Pre-Training with Efficient Audio Transformer | paper |

| 2023-10 | MR-HuBERT | Multi-resolution HuBERT: Multi-resolution Speech Self-Supervised Learning with Masked Unit Prediction | paper |

| 2023-10 | SpeechFlow | Generative Pre-training for Speech with Flow Matching | paper |

| 2023-09 | WavLabLM | Joint Prediction and Denoising for Large-scale Multilingual Self-supervised Learning | paper |

| 2023-08 | W2v-BERT 2.0 | Massively Multilingual & Multimodal Machine Translation | paper |

| 2023-07 | Whisper-AT | Noise-Robust Automatic Speech Recognizers are Also Strong General Audio Event Taggers | paper |

| 2023-06 | ATST | Self-supervised Audio Teacher-Student Transformer for Both Clip-level and Frame-level Tasks | paper |

| 2023-05 | SPIN | Self-supervised Fine-tuning for Improved Content Representations by Speaker-invariant Clustering | paper |

| 2023-05 | DinoSR | Self-Distillation and Online Clustering for Self-supervised Speech Representation Learning | paper |

| 2023-05 | NFA | Self-supervised neural factor analysis for disentangling utterance-level speech representations | paper |

| 2022-12 | Data2vec 2.0 | Efficient Self-supervised Learning with Contextualized Target Representations for Vision, Speech and Language | paper |

| 2022-12 | BEATs | Audio Pre-Training with Acoustic Tokenizers | paper |

| 2022-11 | MT4SSL | MT4SSL: Boosting Self-Supervised Speech Representation Learning by Integrating Multiple Targets | paper |

| 2022-08 | DINO | Non-contrastive self-supervised learning of utterance-level speech representations | paper |

| 2022-07 | Audio-MAE | Masked Autoencoders that Listen | paper |

| 2022-04 | MAESTRO | Matched Speech Text Representations through Modality Matching | paper |

| 2022-03 | MAE-AST | Masked Autoencoding Audio Spectrogram Transformer | paper |

| 2022-03 | LightHuBERT | Lightweight and Configurable Speech Representation Learning with Once-for-All Hidden-Unit BERT | paper |

| 2022-02 | Data2vec | A General Framework for Self-supervised Learning in Speech, Vision and Language | paper |

| 2021-10 | WavLM | WavLM: Large-Scale Self-Supervised Pre-Training for Full Stack Speech Processing | paper |

| 2021-08 | W2v-BERT | Combining Contrastive Learning and Masked Language Modeling for Self-Supervised Speech Pre-Training | paper |

| 2021-07 | mHuBERT | Direct speech-to-speech translation with discrete units | paper |

| 2021-06 | HuBERT | Self-Supervised Speech Representation Learning by Masked Prediction of Hidden Units | paper |

| 2021-03 | BYOL-A | Self-Supervised Learning for General-Purpose Audio Representation | paper |

| 2020-12 | DeCoAR2.0 | DeCoAR 2.0: Deep Contextualized Acoustic Representations with Vector Quantization | paper |

| 2020-07 | TERA | TERA: Self-Supervised Learning of Transformer Encoder Representation for Speech | paper |

| 2020-06 | Wav2vec2.0 | wav2vec 2.0: A Framework for Self-Supervised Learning of Speech Representations | paper |

| 2019-10 | APC | Generative Pre-Training for Speech with Autoregressive Predictive Coding | paper |

| 2018-07 | CPC | Representation Learning with Contrastive Predictive Coding | paper |

Webpage. The challenge will cover nowday's neural audio codecs and speech/audio language models. Agenda: To be determined.

Professor Hung-Yi Lee will be giving a talk as part of the Interspeech 2024 survey talk titled Challenges in Developing Spoken Language Models. The topic will cover nowday's speech/audio large language models.

I (Kai-Wei Chang) will be giving a talk as part of the ICASSP 2024 tutorial titled Parameter-Efficient and Prompt Learning for Speech and Language Foundation Models. The topic will cover nowday's speech/audio large language models. The slides from my presentation is available at https://kwchang.org/talks/. Please feel free to reach out to me for any discussions.

- https://github.com/liusongxiang/Large-Audio-Models

- https://github.com/kuan2jiu99/Awesome-Speech-Generation

- https://github.com/ga642381/Speech-Prompts-Adapters

- https://github.com/voidful/Codec-SUPERB

- https://github.com/huckiyang/awesome-neural-reprogramming-prompting

If you find this repository useful, please consider citing the following papers.

@article{wu2024codec,

title={Codec-SUPERB@ SLT 2024: A lightweight benchmark for neural audio codec models},

author={Wu, Haibin and Chen, Xuanjun and Lin, Yi-Cheng and Chang, Kaiwei and Du, Jiawei and Lu, Ke-Han and Liu, Alexander H and Chung, Ho-Lam and Wu, Yuan-Kuei and Yang, Dongchao and others},

journal={arXiv preprint arXiv:2409.14085},

year={2024}

}

@inproceedings{wu-etal-2024-codec,

title = "Codec-{SUPERB}: An In-Depth Analysis of Sound Codec Models",

author = "Wu, Haibin and

Chung, Ho-Lam and

Lin, Yi-Cheng and

Wu, Yuan-Kuei and

Chen, Xuanjun and

Pai, Yu-Chi and

Wang, Hsiu-Hsuan and

Chang, Kai-Wei and

Liu, Alexander and

Lee, Hung-yi",

editor = "Ku, Lun-Wei and

Martins, Andre and

Srikumar, Vivek",

booktitle = "Findings of the Association for Computational Linguistics: ACL 2024",

month = aug,

year = "2024",

address = "Bangkok, Thailand",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2024.findings-acl.616",

doi = "10.18653/v1/2024.findings-acl.616",

pages = "10330--10348",

}

@article{wu2023speechgen,

title={Speechgen: Unlocking the generative power of speech language models with prompts},

author={Wu, Haibin and Chang, Kai-Wei and Wu, Yuan-Kuei and Lee, Hung-yi},

journal={arXiv preprint arXiv:2306.02207},

year={2023}

}

@article{wu2024towards,

title={Towards audio language modeling-an overview},

author={Wu, Haibin and Chen, Xuanjun and Lin, Yi-Cheng and Chang, Kai-wei and Chung, Ho-Lam and Liu, Alexander H and Lee, Hung-yi},

journal={arXiv preprint arXiv:2402.13236},

year={2024}

}