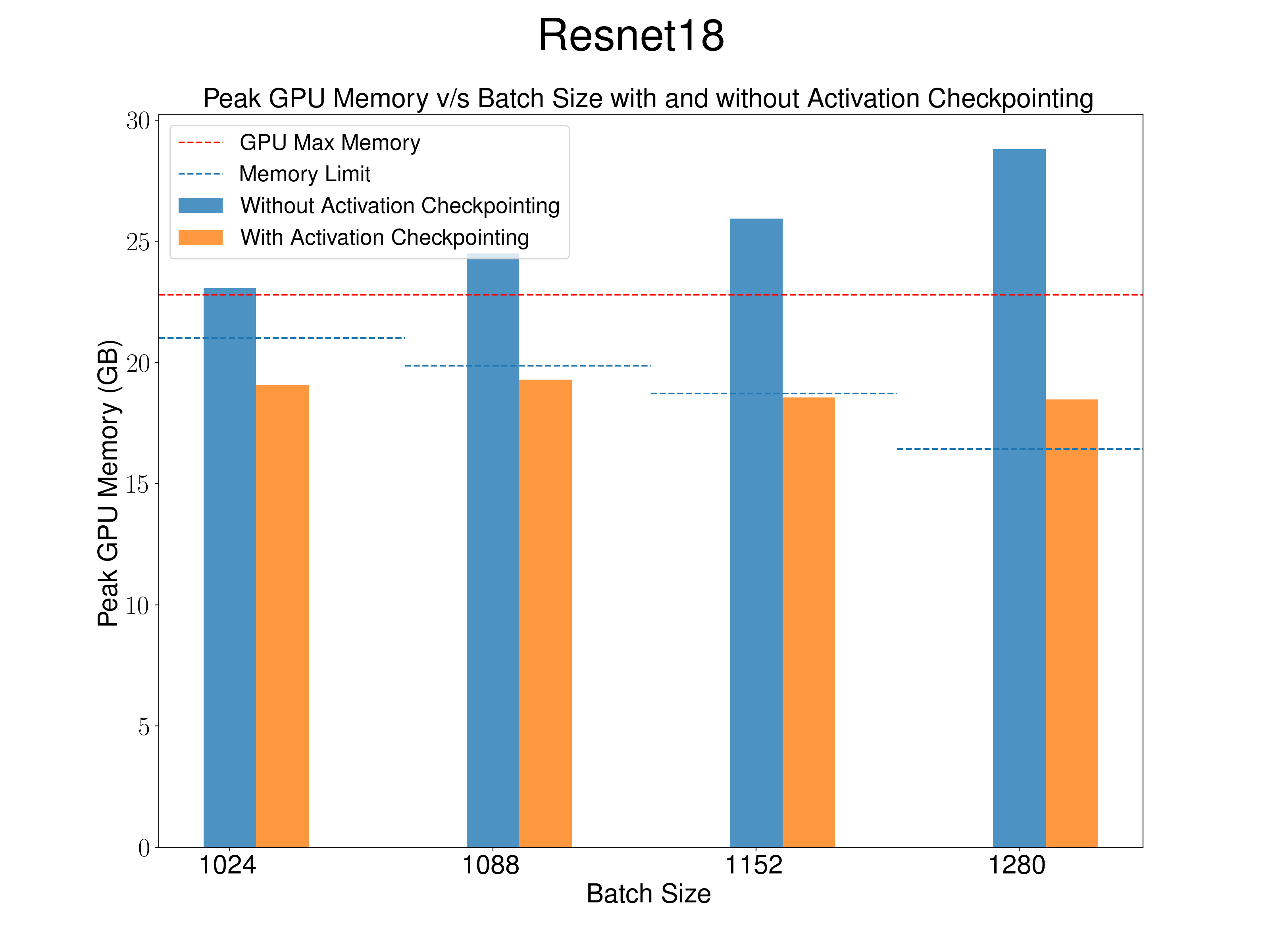

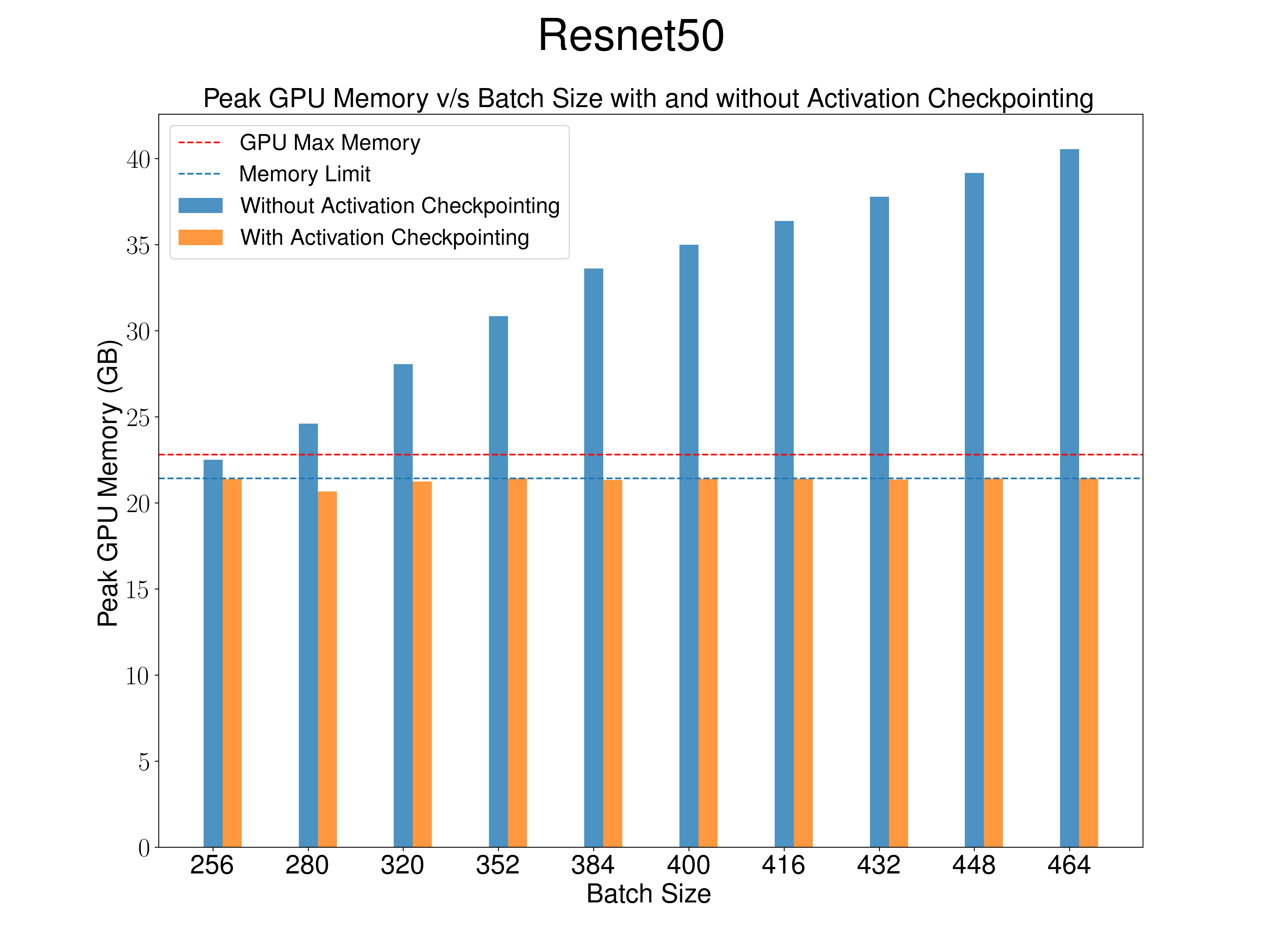

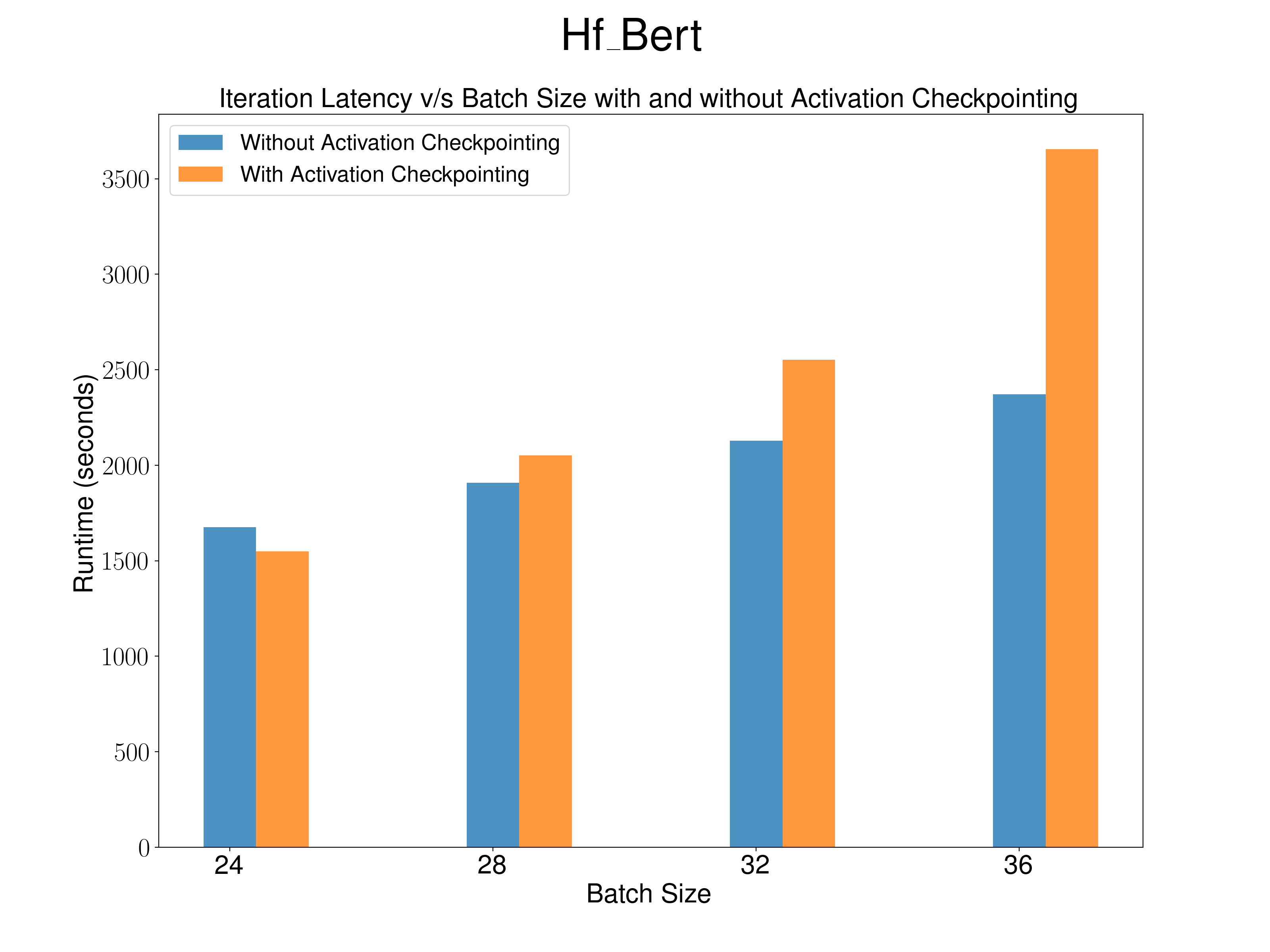

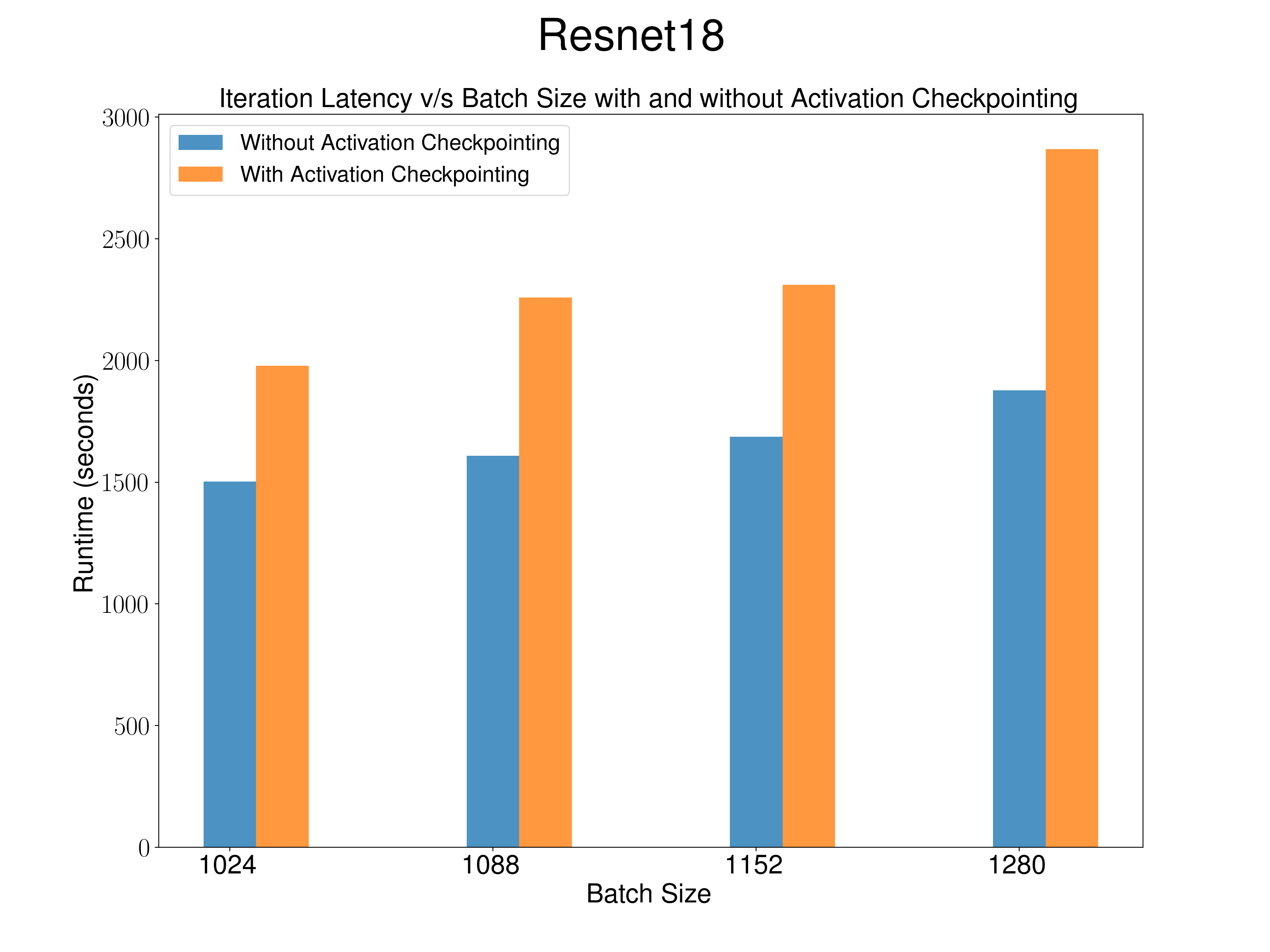

An activation checkpointing algorithm implementation to scale deep learning training workloads beyond batch sizes that entirely fit (including intermediate activations) on a single GPU.

git clone https://github.com/harsha070/cs265-mlsys-2024.git

git clone https://github.com/pytorch/benchmark

pip install -e benchmark/!sed -i '935d' /usr/local/lib/python3.10/dist-packages/transformers/models/bert/modeling_bert.pyEnable operator fusion in optimizer.

- Change ~/benchmark/torchbenchmark/util/framework/huggingface/basic_configs.py line 309-314. Add

fused=Trueto optimizer kwargs. - Change~/benchmark/torchbenchmark/util/framework/vision/model_factory.py line 39. Add

fused=Trueto optimizer kwargs.

To benchmark a model run,

python cs265-mlsys-2024/benchmarks.py torchbenchmark.models.hf_Bert.Model 24python cs265-mlsys-2024/benchmarks.py torchbenchmark.models.resnet18.Model 1024python cs265-mlsys-2024/benchmarks.py torchbenchmark.models.resnet50.Model 256