-

Notifications

You must be signed in to change notification settings - Fork 216

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Hardening: FireFly Hardening & Performance Testing - umbrella issue #316

Comments

Ethereum Throughput and Latency MeasurementGoals:

Please note the initial goal of this exercise is not to slam FireFly with as much RPS as possible. It's much more important for us to be confident in FireFly's performance under somewhat normal conditions. Interfaces targeted:

Desirable metrics:

Test Scenarios:

Tools under consideration: |

|

Here's an overview of the tooling being used so far. perf.go is a small script that utilizes vegeta to simulate load. Currently, it focuses on two categories of metrics:

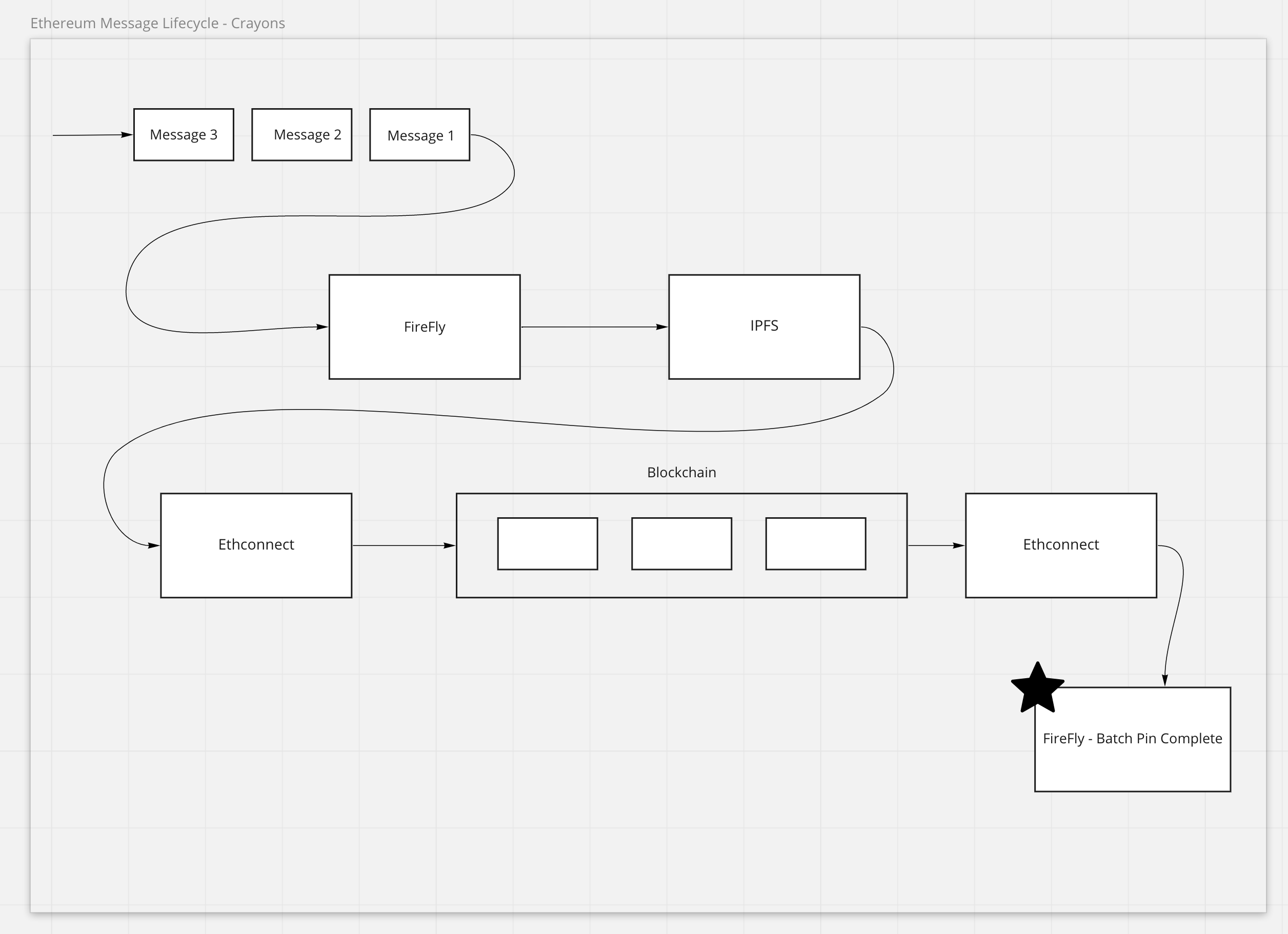

For reference, all testing is currently being done on a 2 node FireFly network, using postgres as the DB layer, generated by FireFly CLI. Here are the some example outputs from 10 RPS: 20 RPS: 30 RPS: 40 RPS: 50 RPS: The current goal is identifying what is causing the high time between the last message and 0 pending transactions. Below is a diagram of the lifecycle of a pinned message in FireFly: The starred box, "FireFly - Batch Pin Complete", is where batches from confirmed transactions are sequentially processed in FireFly. In the 50 RPS test, a batch was taking ~4 seconds to process, with ~120 messages per batch. The bulk of those 4 seconds was making DB queries for each message, where each message was taking roughly 25ms to process. @peterbroadhurst is investigating a way to reduce processing time by limiting the number of DB queries per message. |

|

With @peterbroadhurst's changes in #325, batch processing has been improved 4x. Taking roughly 1second, down from 4seconds, to fully process each batch. |

|

Here are some comparison results for context. All benchmarks were ran on my MBP with a CLI generated 2 node firefly environment, using postgres as the DB: Before #325, we were consistently seeing > 1 minute latency between the last sent message and 0 pending transactions. With #325: Batch processing time was consistently reduced to <= 1, down from ~4. Closing out initial broadcast tests here. |

|

Seeing some good results with pinned private messages |

|

Now, the focus is on testing tokens. After discussion with @awrichar, we've decided on the following order of tests:

Currently, tokens does not batch transactions before submitting to ethconnect, so the results at high RPS should be interesting. |

|

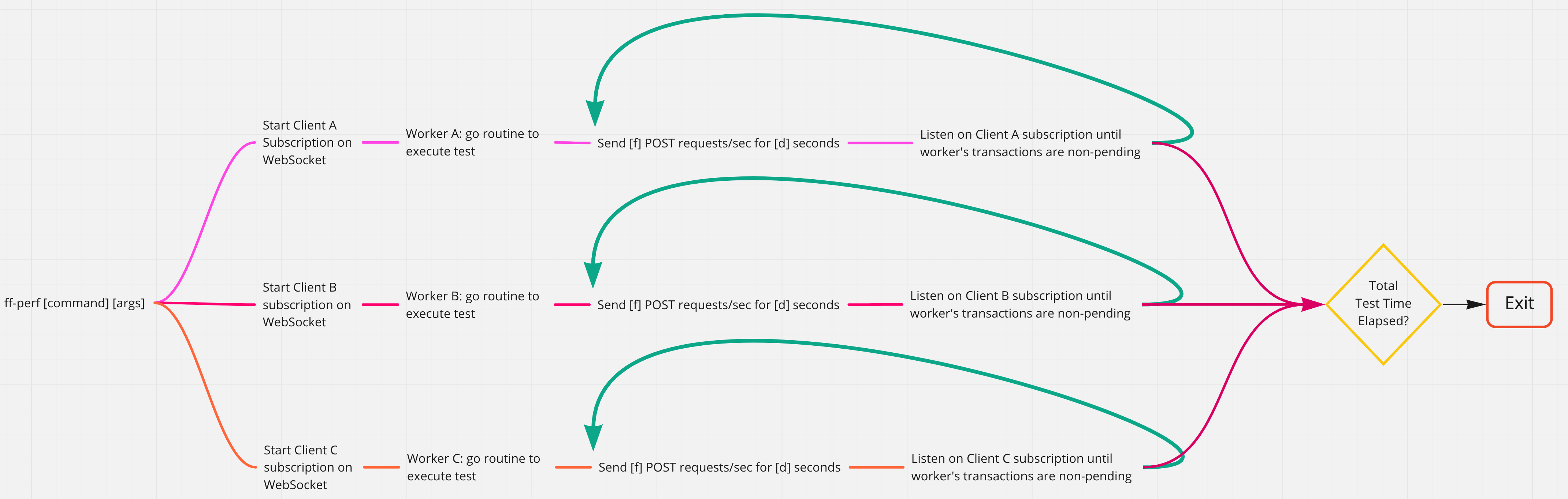

The above mentioned perf.go has turned into an official CLI tool🎉 . firefly-perf-cli is the HTTP load testing tool we are using for performance testing & hardening. |

|

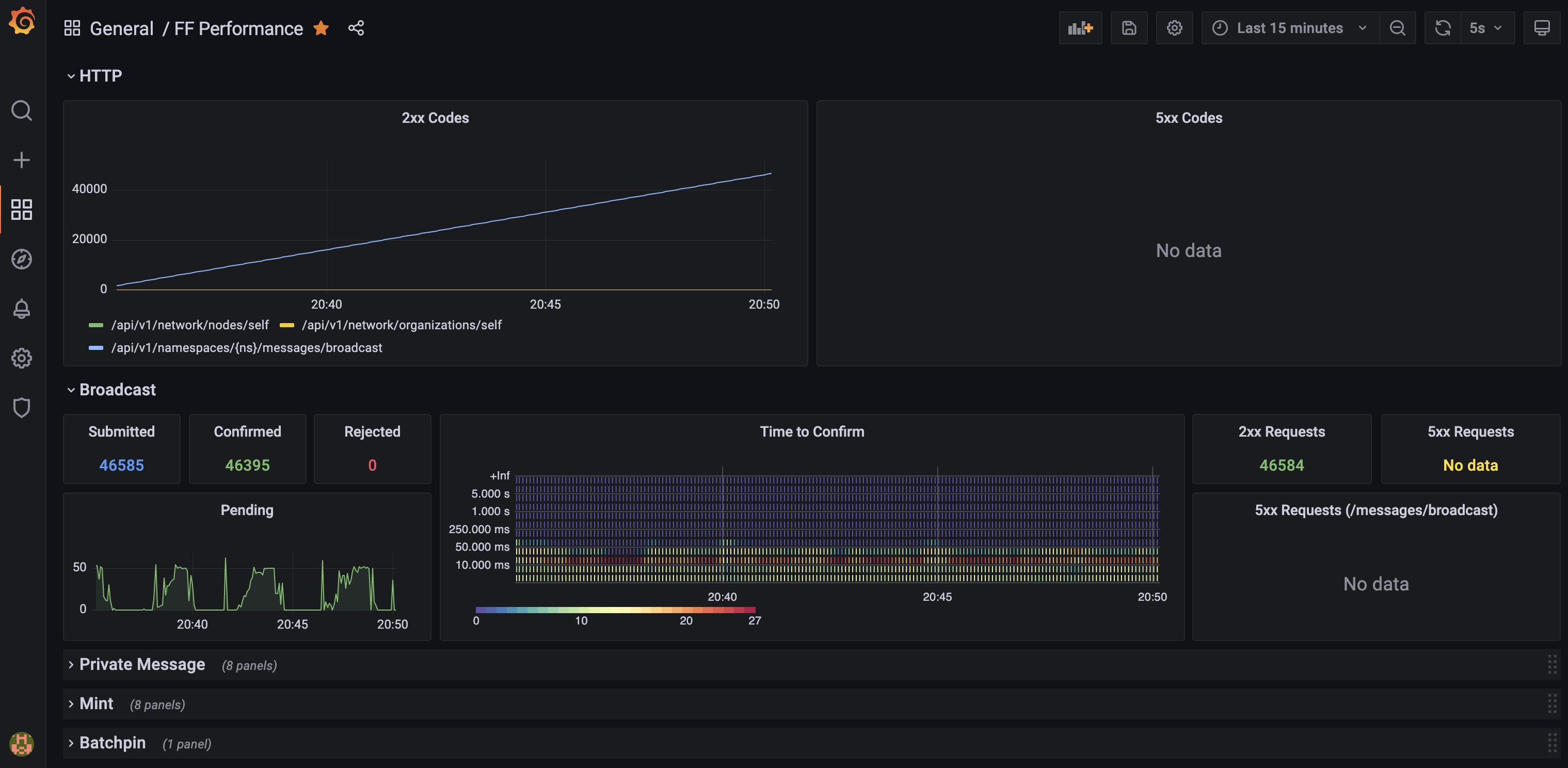

Since the creation of firefly-perf-cli, improvements have been made to performance testing mechanics. These include utilizing goroutines to kick off ArchitectureGrafana Dashboard - Broadcasts (50 tx/sec) |

|

Would be great to put this architecture diagram into the README when you get a chance 🚀 🤖 |

|

#376 - Database change event queue gets exhausted (Closed) |

|

#380 - Database begin transaction failed: pq: too many clients already (Closed) |

|

#382 - Error from data exchange - request entity too large |

|

#383 - Token transfer has already been recorded |

|

#406 - Mismatched nexthash or author (Fabric) |

Part of the 1.0 milestone #117 includes a number of performance tests on FireFly core.

They are:

This issue will track the ongoing efforts to complete these per protocol.

The text was updated successfully, but these errors were encountered: