This application (Knowledge Base QA using RAG pipeline) is ported from chatchat-space/Langchain-Chatchat to run on Intel CPU and GPU (e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max) using IPEX-LLM.

See the demo of running Langchain-Chatchat (Knowledge Base QA using RAG pipeline) on Intel Core Ultra laptop using ipex-llm below. If you have any issues or suggestions, please submit them to the IPEX-LLM Project.

You can change the UI language in the left-side menu. We currently support English and 简体中文 (see video demos below).

| English | 简体中文 |

Langchain-chatchat-en.mp4 |

Langchain-chatchat-chs.mp4 |

The following sections introduce how to install and run Langchain-chatchat on systems equipped with Intel CPUs or GPUs, utilizing the CPU/GPU to run both LLMs and embedding models.

See the Langchain-Chatchat architecture below (source).

Follow the guide that corresponds to your specific system and device type from the links provided below:

- For systems with Intel Core Ultra integrated GPU: Windows Guide | Linux Guide

- For systems with Intel Arc A-Series GPU: Windows Guide | Linux Guide

- For systems with Intel Data Center Max Series GPU: Linux Guide

- For systems with Xeon-Series CPU: Linux Guide

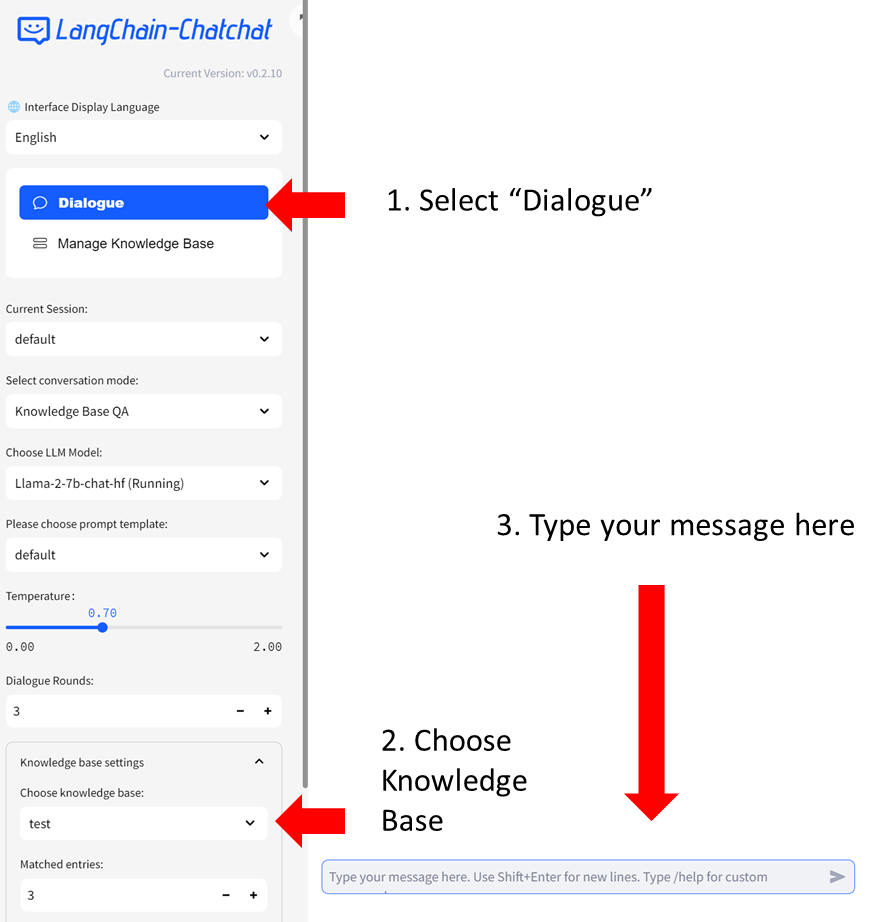

To start chatting with LLMs, simply type your messages in the textbox at the bottom of the UI.

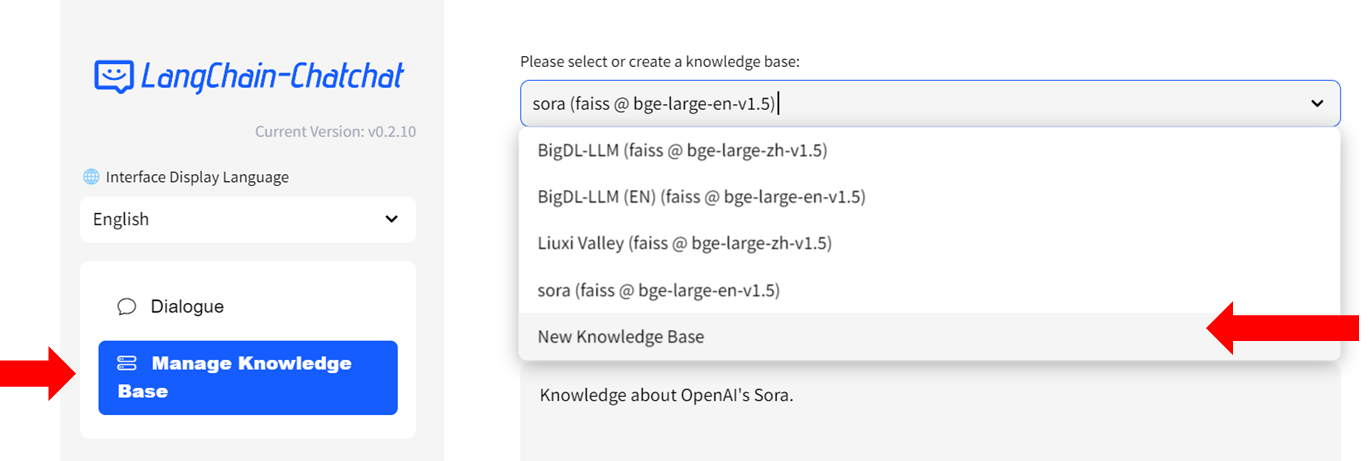

- Select

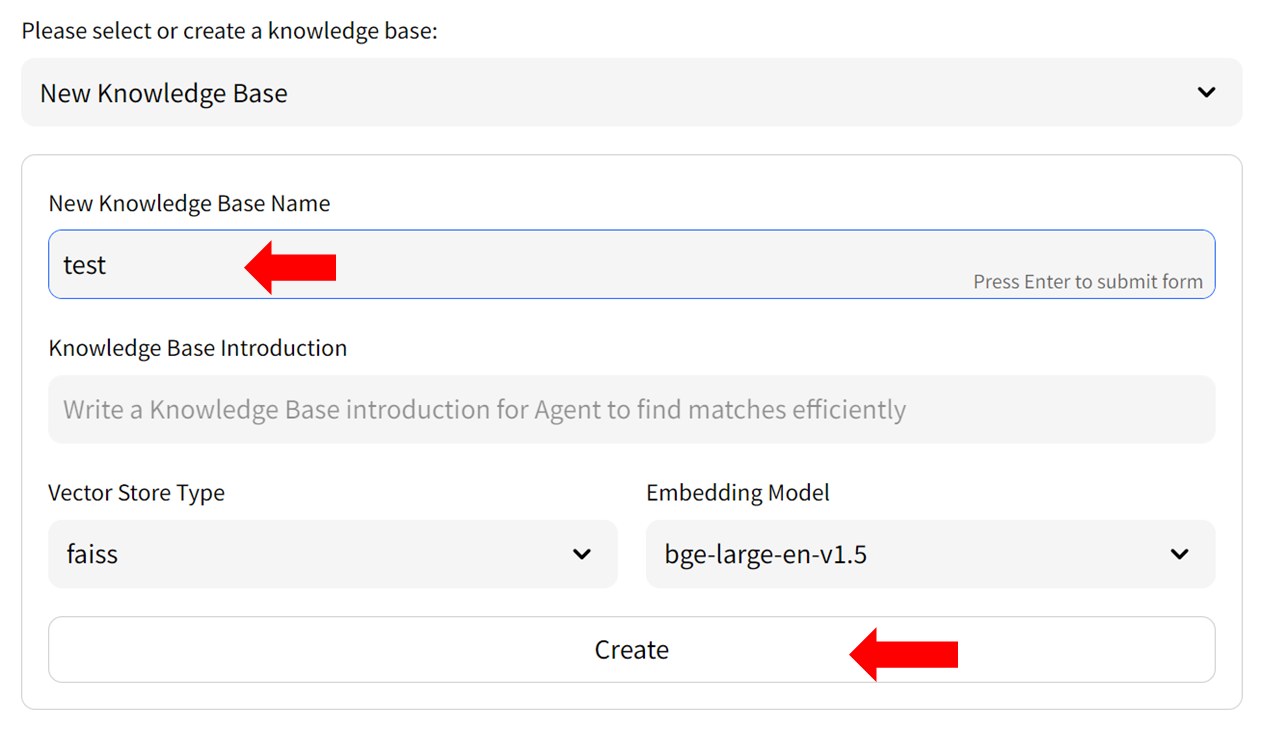

Manage Knowledge Basefrom the menu on the left, then chooseNew Knowledge Basefrom the dropdown menu on the right side. - Fill in the name of your new knowledge base (example: "test") and press the

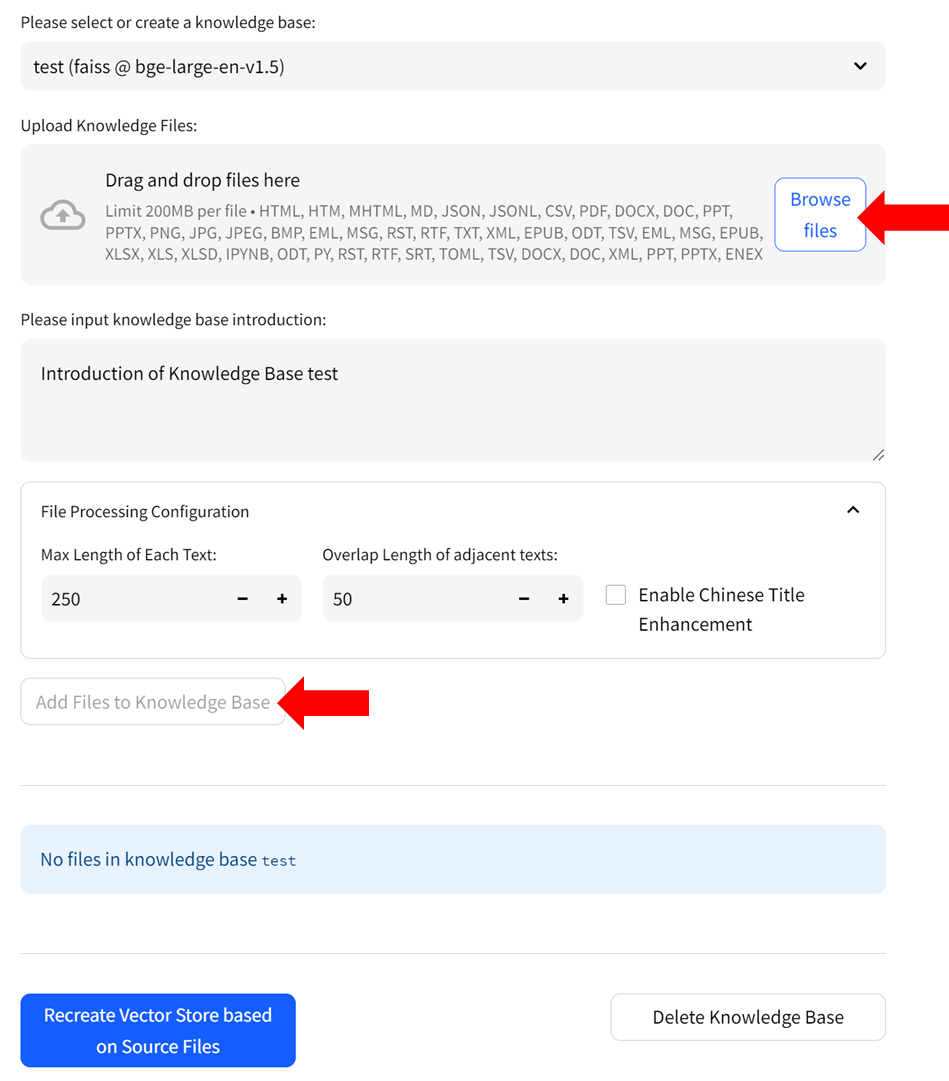

Createbutton. Adjust any other settings as needed. - Upload knowledge files from your computer and allow some time for the upload to complete. Once finished, click on

Add files to Knowledge Basebutton to build the vector store. Note: this process may take several minutes.

You can now click Dialogue on the left-side menu to return to the chat UI. Then in Knowledge base settings menu, choose the Knowledge Base you just created, e.g, "test". Now you can start chatting.

For more information about how to use Langchain-Chatchat, refer to Official Quickstart guide in English, Chinese, or the Wiki.

Ensure that you have installed ipex-llm>=2.1.0b20240612. To upgrade ipex-llm, use

pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/us/or

pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/cn/In the left-side menu, you have the option to choose a prompt template. There're several pre-defined templates - those ending with '_cn' are Chinese templates, and those ending with '_en' are English templates. You can also define your own prompt templates in configs/prompt_config.py. Remember to restart the service to enable these changes.