This is the code of paper Pixel-in-Pixel Net: Towards Efficient Facial Landmark Detection in the Wild. We propose a novel facial landmark detector, PIPNet, that is fast, accurate, and robust. PIPNet can be trained under two settings: (1) supervised learning; (2) generalizable semi-supervised learning (GSSL). With GSSL, PIPNet has better cross-domain generalization performance by utilizing massive amounts of unlabeled data across domains.

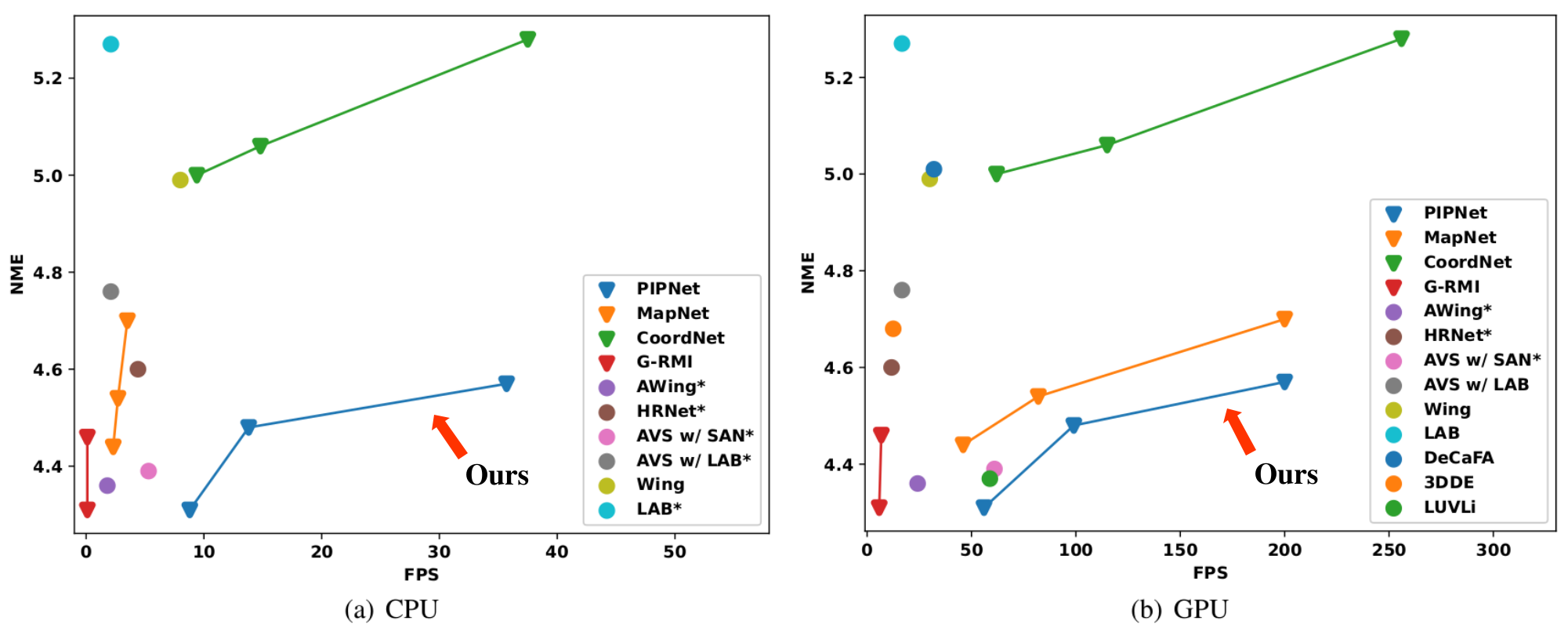

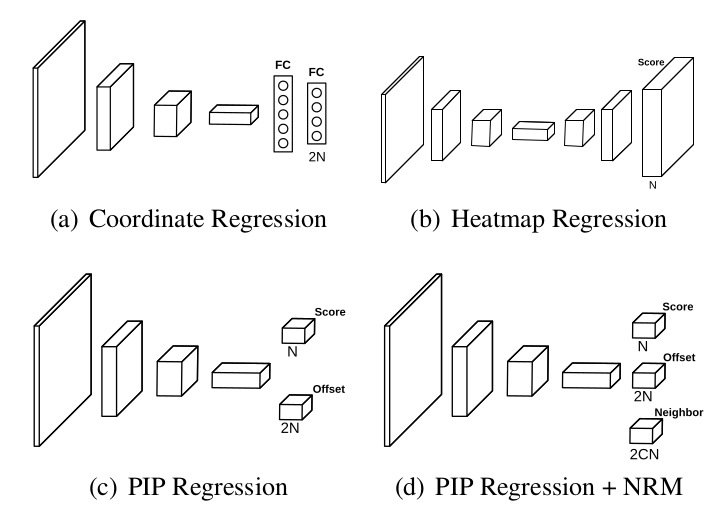

Figure 1. Comparison to existing methods on speed-accuracy tradeoff, tested on WFLW full test set (closer to bottom-right corner is better).Figure 2. Comparison of different detection heads.

- Install Python3 and PyTorch >= v1.1

- Clone this repository.

git clone https://github.com/jhb86253817/PIPNet.git- Install the dependencies in requirements.txt.

pip install -r requirements.txt- We use a modified version of FaceBoxes as the face detector, so go to folder

FaceBoxesV2/utils, runsh make.shto build for NMS. - Back to folder

PIPNet, create two empty folderslogsandsnapshots. For PIPNets, you can download our trained models from here, and put them under foldersnapshots/DATA_NAME/EXPERIMENT_NAME/. - Edit

run_demo.shto choose the config file and input source you want, then runsh run_demo.sh. We support image, video, and camera as the input. Some sample predictions can be seen as follows.

- PIPNet-ResNet18 trained on WFLW, with image

images/1.jpgas the input:

- PIPNet-ResNet18 trained on WFLW, with a snippet from Shaolin Soccer as the input:

- PIPNet-ResNet18 trained on WFLW, with video

videos/002.avias the input:

- PIPNet-ResNet18 trained on 300W+CelebA (GSSL), with video

videos/007.avias the input:

Datasets: 300W, COFW, WFLW, AFLW, LaPa

- Download the datasets from official sources, then put them under folder

data. The folder structure should look like this:

PIPNet

-- FaceBoxesV2

-- lib

-- experiments

-- logs

-- snapshots

-- data

|-- data_300W

|-- afw

|-- helen

|-- ibug

|-- lfpw

|-- COFW

|-- COFW_train_color.mat

|-- COFW_test_color.mat

|-- WFLW

|-- WFLW_images

|-- WFLW_annotations

|-- AFLW

|-- flickr

|-- AFLWinfo_release.mat

|-- LaPa

|-- train

|-- val

|-- test

- Go to folder

lib, preprocess a dataset by runningpython preprocess.py DATA_NAME. For example, to process 300W:

python preprocess.py data_300W

- Back to folder

PIPNet, editrun_train.shto choose the config file you want. Then, train the model by running:

sh run_train.sh

Datasets:

- data_300W_COFW_WFLW: 300W + COFW-68 (unlabeled) + WFLW-68 (unlabeled)

- data_300W_CELEBA: 300W + CelebA (unlabeled)

- Download 300W, COFW, and WFLW as in the supervised learning setting. Download annotations of COFW-68 test from here. For 300W+CelebA, you also need to download the in-the-wild CelebA images from here, and the face bounding boxes detected by us. The folder structure should look like this:

PIPNet

-- FaceBoxesV2

-- lib

-- experiments

-- logs

-- snapshots

-- data

|-- data_300W

|-- afw

|-- helen

|-- ibug

|-- lfpw

|-- COFW

|-- COFW_train_color.mat

|-- COFW_test_color.mat

|-- WFLW

|-- WFLW_images

|-- WFLW_annotations

|-- data_300W_COFW_WFLW

|-- cofw68_test_annotations

|-- cofw68_test_bboxes.mat

|-- CELEBA

|-- img_celeba

|-- celeba_bboxes.txt

|-- data_300W_CELEBA

|-- cofw68_test_annotations

|-- cofw68_test_bboxes.mat

- Go to folder

lib, preprocess a dataset by runningpython preprocess_gssl.py DATA_NAME. To process data_300W_COFW_WFLW, runTo process data_300W_CELEBA, runpython preprocess_gssl.py data_300W_COFW_WFLWandpython preprocess_gssl.py CELEBApython preprocess_gssl.py data_300W_CELEBA - Back to folder

PIPNet, editrun_train.shto choose the config file you want. Then, train the model by running:

sh run_train.sh

- Edit

run_test.shto choose the config file you want. Then, test the model by running:

sh run_test.sh

- lite.ai.toolkit: Provide MNN C++, NCNN C++, TNN C++ and ONNXRuntime C++ version of PIPNet.

- torchlm: Provide a PyTorch re-implement of PIPNet with ONNX Export, can install with pip.

@article{JLS21,

title={Pixel-in-Pixel Net: Towards Efficient Facial Landmark Detection in the Wild},

author={Haibo Jin and Shengcai Liao and Ling Shao},

journal={International Journal of Computer Vision},

publisher={Springer Science and Business Media LLC},

ISSN={1573-1405},

url={http://dx.doi.org/10.1007/s11263-021-01521-4},

DOI={10.1007/s11263-021-01521-4},

year={2021},

month={Sep}

}

We thank the following great works: