rtfm is a Python library for research on tabular foundation models (RTFM).

rtfm is the library used to train TabuLa-8B,

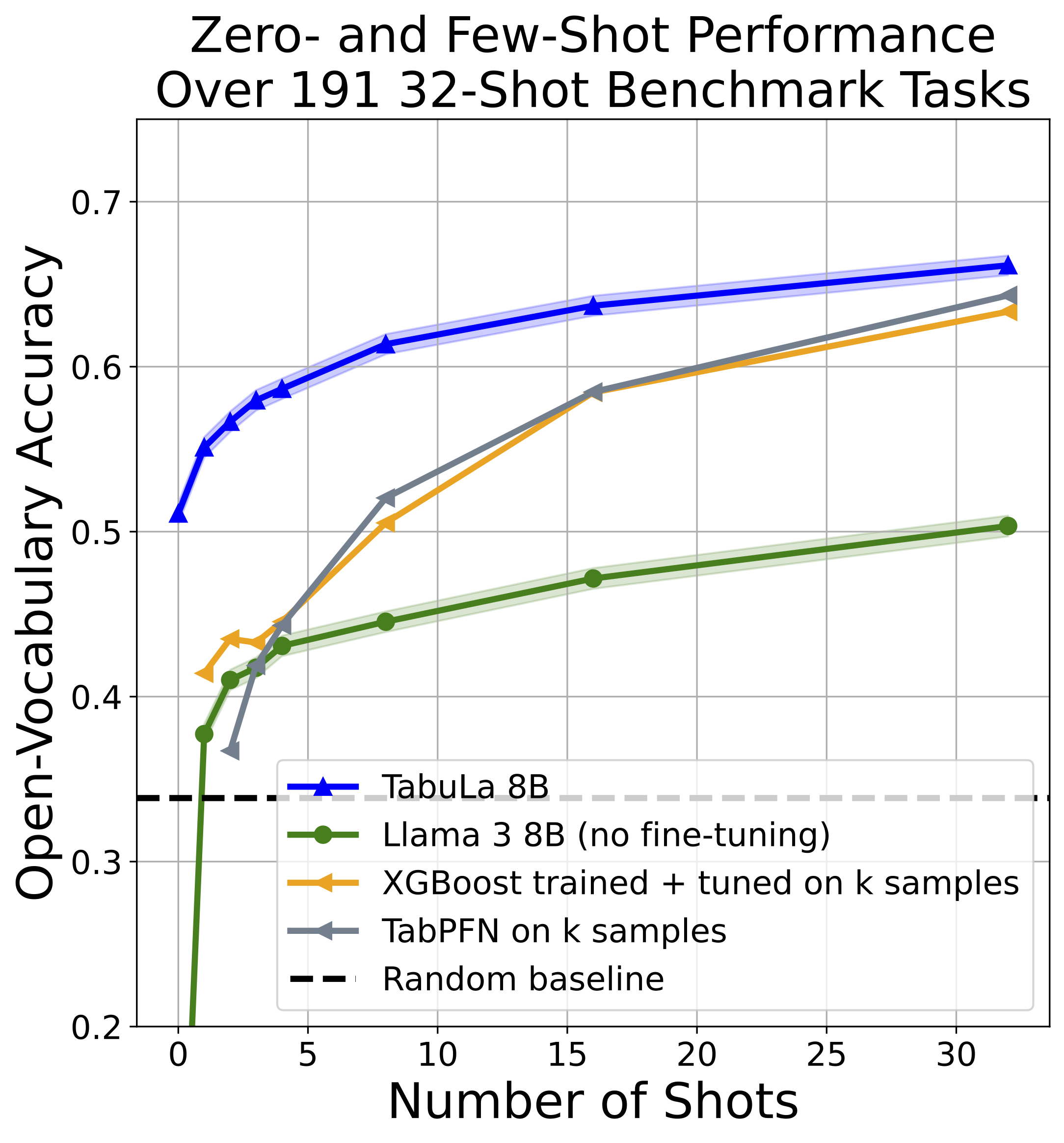

a state-of-the-art model for zero- and few-shot tabular data prediction described in our paper

"Large Scale Transfer Learning for Tabular Data via Language Modeling".

You can also use rtfm to train your own tabular language models.

rtfm has been used to train 7B- and 8B-parameter Llama 2 and Llama 3 language models,

and supports advanced and efficient training methodologies such as fully sharded data parallel (FSDP),

multinode training, and 16-bit training with bf16.

In the future, we plan to support additional base language models and larger scales;

currently, support for larger Llama models exists but should be considered experimental.

We do not currently support other (non-Llama) language models.

We recommend use of the provided conda environment. You can set it up with:

conda env create -f environment.yml

pip install --no-deps git+https://github.com/mlfoundations/tableshift.gitIf you want to interactively explore the model or want to try it on your own, unlabelled data, the best way to do this is by using the inference.ipynb notebook in notebooks. This notebook shows how to create simple DataFrames and use them for inference.

The notebook above is our recommended default for users interested in trying out TabuLa-8B. For more fine-grained control over your inference (e.g. changing the system prompt used at inference time), you can use inference_utils.infer_on_example().

Once you have set up your environment, you can train models using the Python script at scripts/finetune.py.

As an example, to conduct a training run with a small toy model, run:

python -m rtfm.finetune \

--train-task-file "./sampledata/v6.0.3-serialized/test/test-files.txt" \

--eval-task-file "./sampledata/v6.0.3-serialized/train/train-files.txt" \

--run_validation "False" \

--use_wandb "False" \

--warmup_steps 1 \

--num_workers_dataloader 0 \

--max_steps 10 \

--model_name "yujiepan/llama-2-tiny-random" \

--save_checkpoint_root_dir "checkpoints" \

--run_name "my_model_dir" \

--save_model \

--save_optimizerThis will conduct a short training run and save the model and optimizer state to

checkpoints/my_model_dir-llama-2-tiny-random.

To train a Llama3-8B model instead of the toy model in this example, replace yujiepan/llama-2-tiny-random

with meta-llama/Meta-Llama-3-8B.

To evaluate a model, we recommend using the Python script at scripts/evaluate_checkpoint.py.

In order to evaluate against any dataset, we recommend first preparing the dataset using

the provided script scripts/utils/prepare_csv_for_eval.py:

python scripts/utils/prepare_csv_for_eval.py --output_dir ./eval_tasks/my_taskprepare_csv_for_eval.py prescribes how the data in the CSV is serialized at evaluation time

by defining a FeatureList and YAML file describing the

features and prediction task, respectively. By default, prepare_csv_for_eval.py processes data the same way as the

evaluations for TabuLa-8B. If desired, you can write a custom script to control

the creation of the FeatureList and YAML files to change how data is serialized.

Once the data is prepared, run evaluation via:

USER_CONFIG_DIR=./eval_tasks/ \

python scripts/evaluate_checkpoint.py \

--eval-task-names "my_task" \

--model_name "yujiepan/llama-2-tiny-random" \

--resume "checkpoints/my_model_dir-llama-2-tiny-random" \

--eval_max_samples 16 \

--context_length 2048 \

--pack_samples "False" \

--num_shots 1 \

--outfile "tmp.csv"This will write a file to tmp.csv containing evaluation results.

If you want to evaluate the pretrained released TabuLa-8B model,

set --model_name to mlfoundations/tabula-8b and remove the --resume flag or set it to the empty string.

You can create an environment to reproduce or run experiments via rtfm by using conda:

conda env create -f environment.yml

Once you've set up your environment, you need to add your Hugging Face token in order to access the LLama weights. To do this, you can run

huggingface-cli loginor manually set the token via

export HF_TOKEN=your_tokenTo test your setup (on any machine, no GPUs required), you can run the following command:

sh scripts/tests/train_tiny_local_test.shThis section gives an example of how to train a model from a set of parquet files.

The model expects sets of serialized records stored in .tar files, which are in webdataset format.

To serialize data, we provide the script serialize_interleave_and_shuffle.py (located at rtfm/pipelines/serialize_interleave_and_shuffle.py)

to serialize a set of parquet files:

python -m rtfm.pipelines.serialize_interleave_and_shuffle \

--input-dir /glob/containing/parquet/files/ \

--output-dir ./serialized/v6.0.3/ \

--max_tables 64 \

--serializer_cls "BasicSerializerV2"The recommended way to store training data is in a newline-delimited list of webdataset files.

The above command will automatically generate sets of training, validation (train-eval), and test

files, where the train-eval split comprises unseen rows from tables in the training split,

and the test split comprises only unseen tables.

Some datasets may be too large to store on disk during training.

rtfm supports using files stored on AWS S3.

To use files hosted on S3, you need to move the training data there, and update the text files produced

by rtfm/pipelines/serialize_interleave_and_shuffle.py to point to the correct location.

You can do this with sed, for example,

the command below will replace the local training location with the s3 path for all lines in a text file:

sed 's|/path/to/sampledata/|s3://rtfm-hub/tablib/serialized/v0-testdata/|g' traineval-files.txt > traineval-files-s3.txtIf you plan to use local data, you can use the files produced as the output

of serialize_interleave_and_shuffle.py (train-files.txt, traineval-files.txt, test-files.txt).

The recommended way to launch a training job is via finetune.py. You can do this, for example, via:

python -m rtfm.finetune \

--train-task-file "./sampledata/v6.0.3-serialized/test/test-files.txt" \

--eval-task-file "./sampledata/v6.0.3-serialized/train/train-files.txt" \

--run_validation "False" \

--use_wandb "False" \

--warmup_steps 1 \

--num_workers_dataloader 0 \

--max_steps 10 \

--model_name "yujiepan/llama-2-tiny-random" \

--save_checkpoint_root_dir "checkpoints" \

--run_name "my_model_dir" \

--save_model \

--save_optimizerSee finetune.py and the associated configuration classes in rtfm.configs and rtfm.arguments

for more options to control the details of training.

Some additional resources relevant to RTFM:

- Our paper, "Large Scale Transfer Learning for Tabular Data via Language Modeling"

- The t4 dataset on Hugging Face (used to train TabuLa-8B)

- The TabuLa-8B evaluation suite data on Hugging Face

- The TabuLa-8B model on Hugging Face

tabliblib, a toolkit for filtering TabLib