In this work, we proposed a modified version of Inverse Kinodynamic Learning for safe slippage and tight turning in autonomous drifting. We show that the model is effective for loose drifting trajectories. However, we also find that tight trajectories hinder the models performance and the vehicle undershoots the trajectory during test time. We demonstrate that data evaluation is an essential part of learning an inverse kinodynamic function, and that the architecture necessary to have success is simple and effective.

This work has the potential of becoming a stepping stone in finding the most effective and simple ways to autonomously drift in a life-or-death situation. Future work should focus on collecting more robust data, using more inertial readings and sensor readings (such as real-sense, other axes, or LiDAR). We have open-sourced this entire project as a stepping stone in these endeavors, and hope to explore our ideas further beyond this paper.

We denote

In the paper that inspired our work, the goal, generally, is to learn the function

We can denote

Our goal is to learn the function approximator

Given enough real world observations:

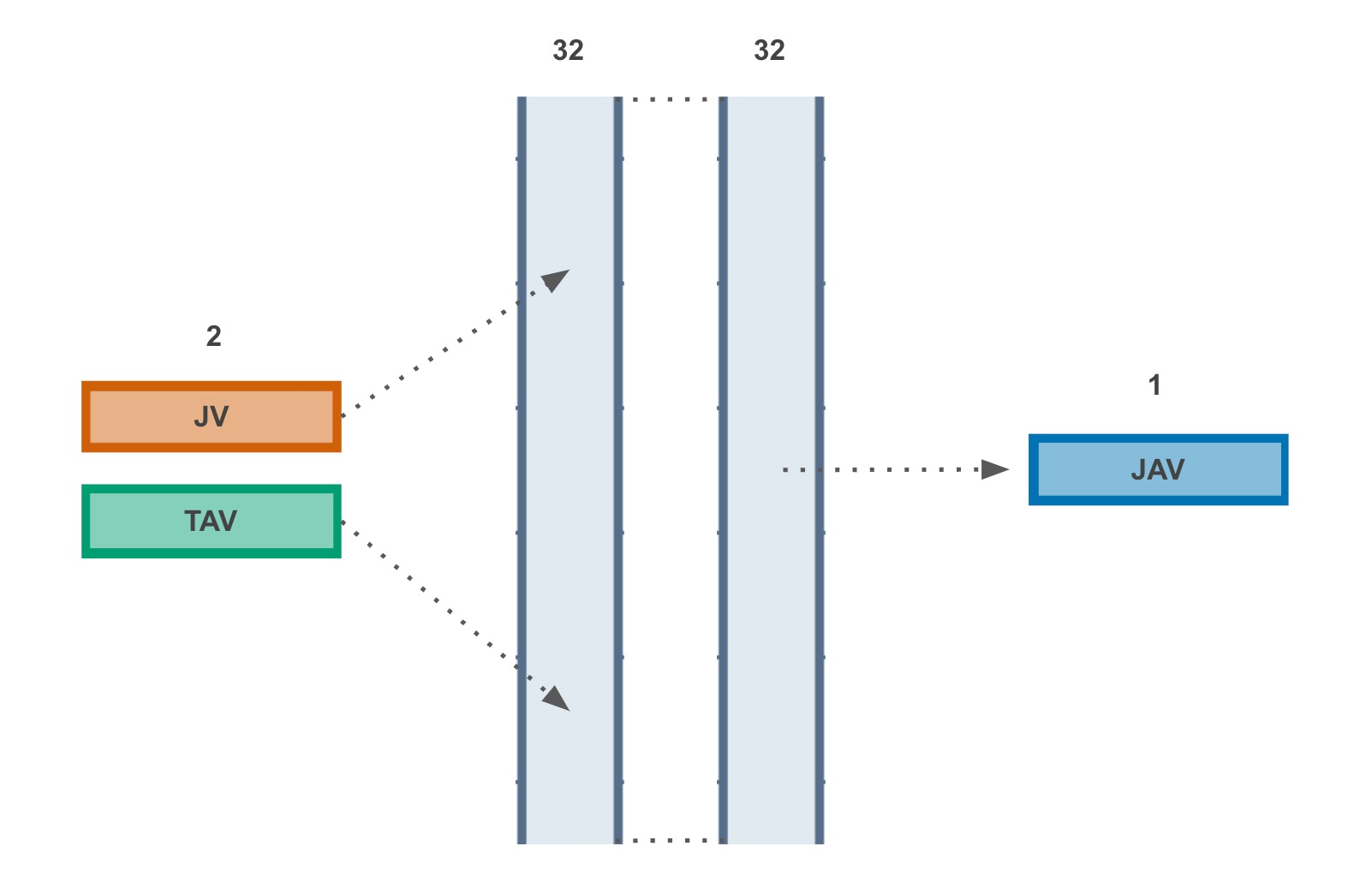

At training time, we feed two inputs into our neural network architecture, which is joystick velocity and ground truth angular velocity from the IMU on the vehicle. The output of this model is the predicted joystick angular velocity. The learned model is our learned function approximator, which is then used as test time as the inverse kinodynamic model to give us our desired control, a corrected angular velocity for the joystick