简体中文 | English

如果觉得有用,不妨给个Star⭐️🌟支持一下吧~ 谢谢!

加微信(备注:StereoAlgorithm),拉你进群(群里超多大佬)

- ubuntu20.04+cuda11.1+cudnn8.2.1+TensorRT8.2.5.1 (test pass)

- ubuntu20.04+cuda11.1+cudnn8.2.1+TensorRT8.6.1.6 (FastACVNet_plus requied TensorRT8.6.1.6)

- ubuntu18.04+cuda10.2+cudnn8.2.1+TensorRT8.2.5.1 (test pass)

- nano,TX2,TX2-NX,Xavier-NX (test pass)

- For other environments, please try it yourself or join a group to understand

- 更改根目录下的CMakeLists.txt,设置tensorrt的安装目录

set(TensorRT_INCLUDE "/xxx/xxx/TensorRT-8.2.5.1/include" CACHE INTERNAL "TensorRT Library include location")

set(TensorRT_LIB "/xxx/xxx/TensorRT-8.2.5.1/lib" CACHE INTERNAL "TensorRT Library lib location")

-

默认opencv已安装,cuda,cudnn已安装

-

为了Debug默认编译

-g O0版本,如果为了加快速度请编译Release版本 -

使用Visual Studio Code快捷键编译(4,5二选其一):

ctrl+shift+B

- 使用命令行编译(4,5二选其一):

mkdir build

cd build

cmake ..

make -j6

- 如果使用vscode调试单个模块,更改launch.json中对应的"program"

-

(可选)首先使用process_image.py脚本将图像(1280,480)的图像裁剪,裁剪后的左右图像(640,480)保存在left_right_image文件夹下

-

left_right_image文件夹下的left*.jpg,right*.jpg图像名称写入stereo_calib.xml中,保证left,right顺序填写;

-

在更改你的棋盘格参数:1)纵向内角点数;2)横向内角点数, 3)棋盘格大小(mm),4)是否显示标定过程中的的图像

numCornersHor = 8; //水平方向棋盘格内角点个数 numCornersVer =11; //垂直方向棋盘格内角点个数 numSquares =25; //棋盘格宽高(这里默认是方格) rectifyImageSavePath = "Stereo_Calibration/rectifyImage" //标定校正为完成后左右图像存放的路径 imagelistfn="stereo_calib.xml" //待标定的左右图像路径 -

标定模块单独编译运行

cd Stereo_Calibration mkdir build&&cd build cmake ..&&make -j8 ./Stereo_Calibration -

在标定的过程中会显示左右图像的角点以及左右图像校正后拼接在一起的图像,可根据拼接后图像的绿色线来初步判断标定校正过程是否正确

-

最终在根目录下生成StereoCalibration.yml的标定文件

- 在标定显示的过程中,可以将角点检测有偏差的图像(一般是远处的角点比较小的)去除后重新标定

-

下载 RAFT-Stereo

-

因为F.grid_sample op直到onnx 16才支持,这里转换为mmcv的bilinear_grid_sample的op

1)需要安装mmcv;

2)F.grid_sample替换为bilinear_grid_sample;

-

导出onnx模型

1) 导出sceneflow模型

(1)python3 export_onnx.py --restore_ckpt models/raftstereo-sceneflow.pth (2)onnxsim raftstereo-sceneflow_480_640.onnx raftstereo-sceneflow_480_640_sim.onnx (3)(option)polygraphy surgeon sanitize --fold-constants raftstereo-sceneflow_480_640_sim.onnx -o raftstereo-sceneflow_480_640_sim_ploy.onnx2)导出realtime模型

(1)python3 export_onnx.py --restore_ckpt models/raftstereo-realtime.pth --shared_backbone --n_downsample 3 --n_gru_layers 2 --slow_fast_gru --valid_iters 7 --mixed_precision (2)onnxsim raftstereo-realtime_480_640.onnx raftstereo-realtime_480_640_sim.onnx (3)(option)polygraphy surgeon sanitize --fold-constants raftstereo-realtime_480_640_sim.onnx -o raftstereo-realtime_480_640_sim_ploy.onnx

([Baidu Drive](链接: https://pan.baidu.com/s/1y74fIsZNsLj_kXp6ziYs6w 提取码: 6cj6))

//双目相机标定文件

char* stereo_calibration_path="StereoCalibration.yml";

//onnx模型路径,自动将onnx模型转为engine模型

char* strero_engine_path="raftstereo-sceneflow_480_640_poly.onnx";

//相机采集的左图像

cv::Mat imageL=cv::imread("left0.jpg");

//相机采集的右图像

cv::Mat imageR=cv::imread("right0.jpg");

cd StereoAlgorithms

mkdir build&&cd build

cmake ..&&make -j8

./build/RAFTStereo/test/raft_stereo_demo

- 会在运行目录下保存视差图disparity.jpg

- 会在运行目录下保存pointcloud.txt文件,每一行表示为x,y,z,r,g,b

| 模型 | 说明 | 备注 |

|---|---|---|

| raftstereo-sceneflow_480_640_poly.onnx | sceneflow双目深度估计模型 | ([Baidu Drive](链接: https://pan.baidu.com/s/1tgeqPmjPeKmCDQ2NGJZMWQ code: hdiv)) |

| raftstereo-realtime_480_640_ploy.onnx | realtime双目深度估计模型 | 可自行下载模型进行转化 |

| 平台 | sceneflow(640*480)耗时 | realtime(640*480)耗时 | 说明 |

|---|---|---|---|

| 3090 | 38ms | 11ms | |

| 3060 | 83ms | 24ms | |

| jetson Xavier-NX | 120ms | sceneflow未尝试 | |

| jetson TX2-NX | 400ms | sceneflow未尝试 | |

| jetson Nano | 支持 |

- https://github.com/princeton-vl/RAFT-Stereo

- https://github.com/nburrus/RAFT-Stereo

Stereo depth estimation on the cones images from the Middlebury dataset (https://vision.middlebury.edu/stereo/data/scenes2003/)

([Baidu Drive](链接: https://pan.baidu.com/s/1M99QhZySeMK2FjaMz-WZxg 提取码: rs3j))

//双目相机标定文件

char* stereo_calibration_path="StereoCalibration.yml";

//onnx模型路径,自动将onnx模型转为engine模型

char* strero_engine_path="model_float32.onnx";

//相机采集的左图像

cv::Mat imageL=cv::imread("left0.jpg");

//相机采集的右图像

cv::Mat imageR=cv::imread("right0.jpg");

cd StereoAlgorithms

mkdir build&&cd build

cmake ..&&make -j8

./build/HitNet/test/HitNet_demo

- 会在运行目录下保存视差图disparity.jpg

- 会在运行目录下保存pointcloud.txt文件,每一行表示为x,y,z,r,g,b

| 平台 | middlebury_d400(640*480)耗时 | flyingthings_finalpass_xl(640*480)耗时 | 说明 |

|---|---|---|---|

| 3090 | 15ms | ||

| 3060 | 未测试 | ||

| jetson Xavier-NX | 未测试 | ||

| jetson TX2-NX | 未测试 | ||

| jetson Nano | 未测试 |

- https://github.com/PINTO0309/PINTO_model_zoo/tree/main/142_HITNET

- https://github.com/iwatake2222/play_with_tensorrt/tree/master/pj_tensorrt_depth_stereo_hitnet

- https://github.com/ibaiGorordo/ONNX-HITNET-Stereo-Depth-estimation

([Baidu Drive](链接: https://pan.baidu.com/s/1GdvBmbx9NCONVGiaSThK1g 提取码: damr))

//双目相机标定文件

char* stereo_calibration_path="StereoCalibration.yml";

//onnx模型路径,自动将onnx模型转为engine模型

char* strero_engine_path="crestereo_init_iter10_480x640.onnx";

//相机采集的左图像

cv::Mat imageL=cv::imread("left0.jpg");

//相机采集的右图像

cv::Mat imageR=cv::imread("right0.jpg");

cd StereoAlgorithms

mkdir build&&cd build

cmake ..&&make -j8

./build/CREStereo/test/crestereo_demo

- 会在运行目录下保存视差图disparity.jpg;

- 会在运行目录下保存pointcloud.txt文件,每一行表示为x,y,z,r,g,b;

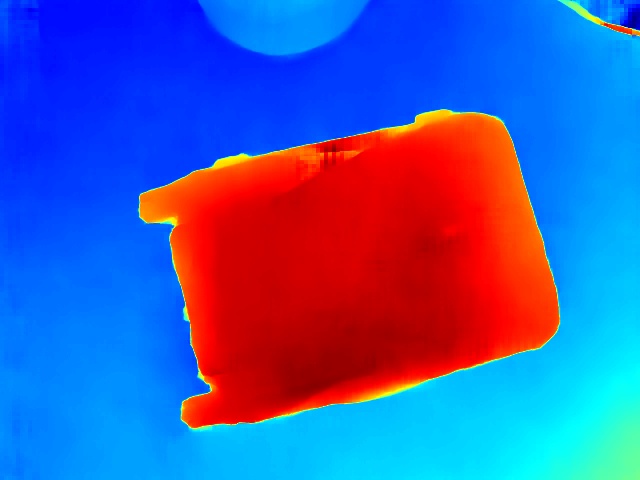

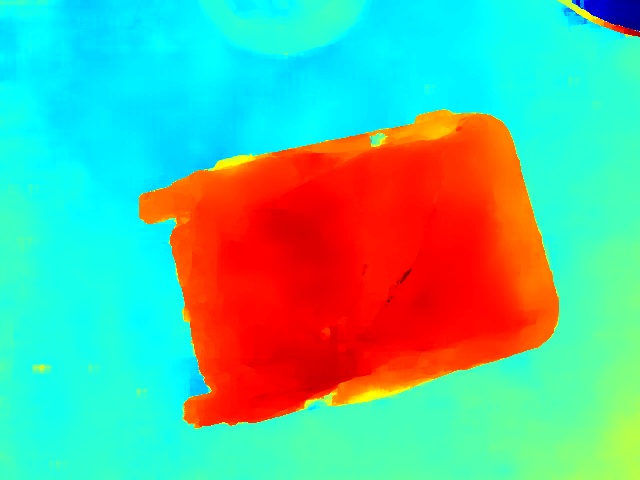

- 会在运行目录下保存heatmap.jpg热力图;

| 平台 | iter2_480x640 | iter5_480x640 | iter10_480x640 | 说明 |

|---|---|---|---|---|

| 3090 | 12ms | 23ms | 42ms | |

| 3060 | 未测试 | |||

| jetson Xavier-NX | 未测试 | |||

| jetson TX2-NX | 未测试 | |||

| jetson Nano | 未测试 |

- https://github.com/megvii-research/CREStereo

- https://github.com/ibaiGorordo/ONNX-CREStereo-Depth-Estimation

- https://github.com/PINTO0309/PINTO_model_zoo/tree/main/284_CREStereo

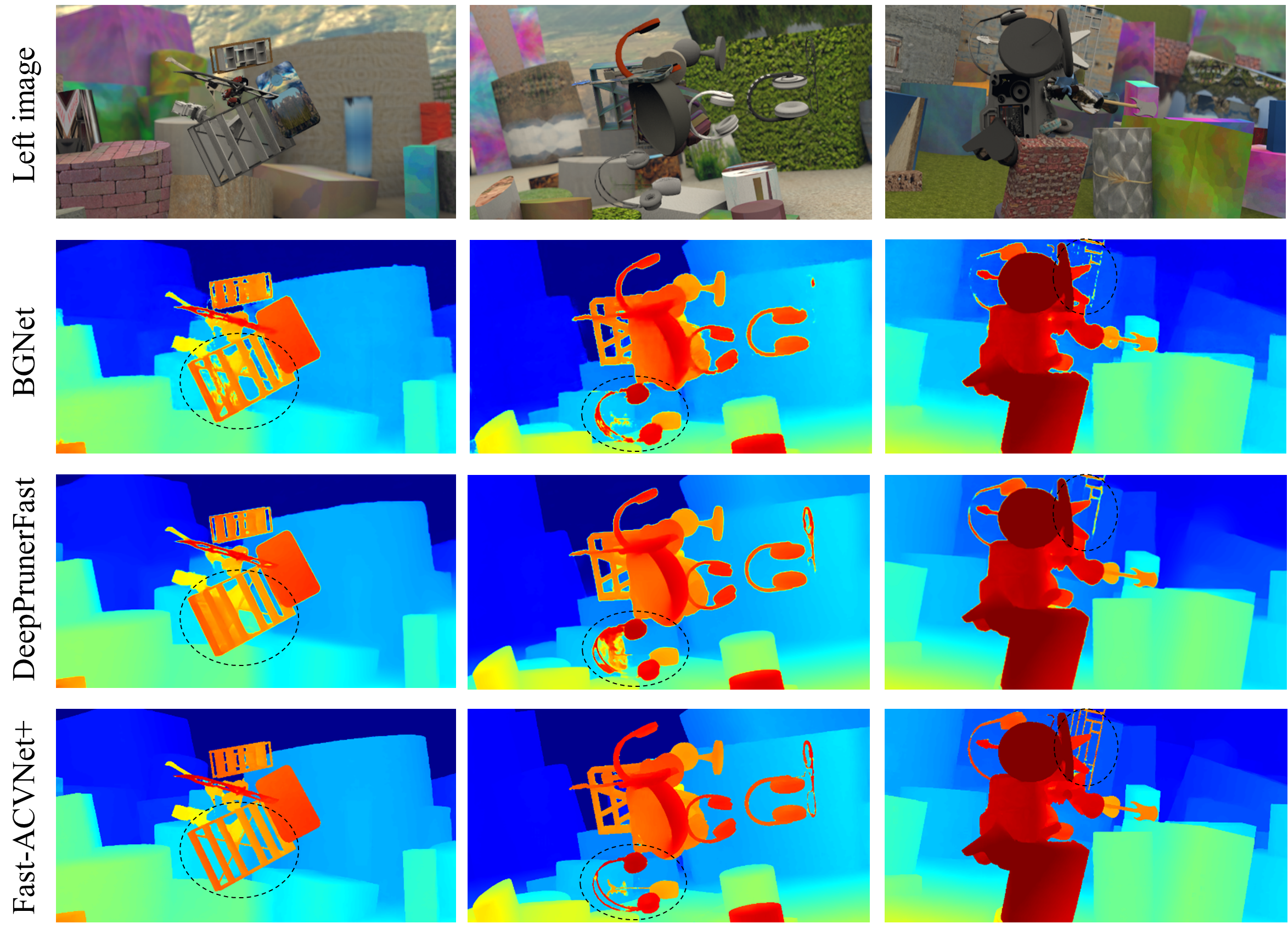

([Baidu Drive](链接: https://pan.baidu.com/s/1gQ32lQS3YoXLVG5u9o5tPw 提取码: ey7e))

//双目相机标定文件

char* stereo_calibration_path="StereoCalibration.yml";

//onnx模型路径,自动将onnx模型转为engine模型

char* strero_engine_path="fast_acvnet_plus_generalization_opset16_480x640.onnx";

//相机采集的左图像

cv::Mat imageL=cv::imread("left0.jpg");

//相机采集的右图像

cv::Mat imageR=cv::imread("right0.jpg");

根目录添加:add_subdirectory("FastACVNet_plus")

cd StereoAlgorithms

mkdir build&&cd build

cmake ..&&make -j8

./build/FastACVNet_plus/test/fastacvnet_plus_demo

- 会在运行目录下保存视差图disparity.jpg;

- 会在运行目录下保存pointcloud.txt文件,每一行表示为x,y,z,r,g,b;

- 会在运行目录下保存heatmap.jpg热力图;

| 平台 | generalization_opset16_480x640 | 说明 |

|---|---|---|

| 3090 | 12ms | |

| 3060 | 未测试 | |

| jetson Xavier-NX | 未测试 | |

| jetson TX2-NX | 未测试 | |

| jetson Nano | 未测试 |

- https://github.com/gangweiX/Fast-ACVNet

- https://github.com/ibaiGorordo/ONNX-FastACVNet-Depth-Estimation/tree/main

- https://github.com/ibaiGorordo/ONNX-ACVNet-Stereo-Depth-Estimation

- https://github.com/PINTO0309/PINTO_model_zoo/tree/main/338_Fast-ACVNet