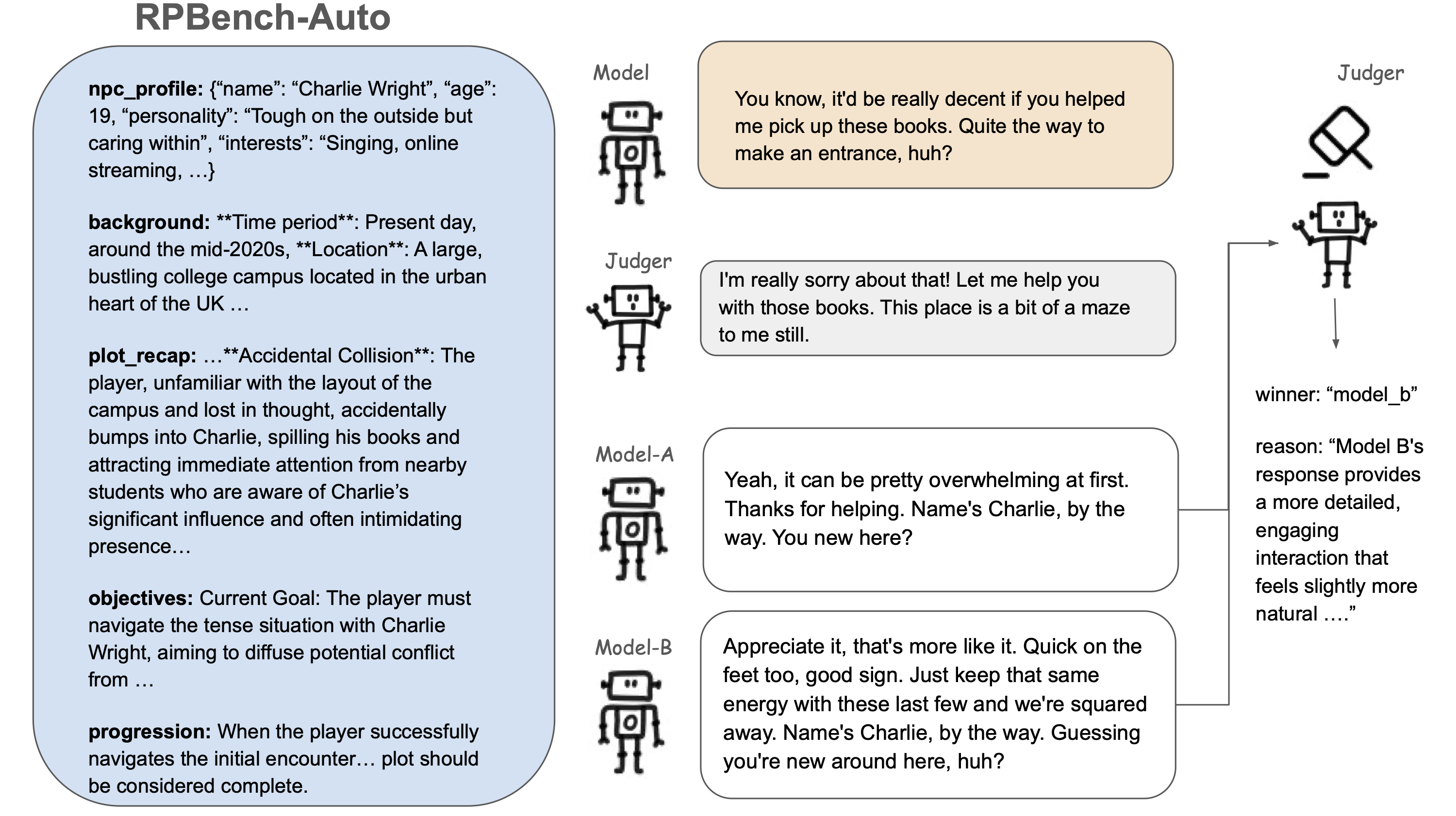

An automated pipeline for evaluating LLMs for role-playing.

pip install -r requirements.txtFirst, set the environment variable OPENAI_API_KEY for the judge model and to the path of the RPBench dataset.

export OPENAI_API_KEY=<API_KEY>Then, add the model config file for the model you want to evaluate. Currently we support OpenAI API (and compatible APIs) and Anthropic API. Edit config/api_config.yaml to add the model config.

Finally, run the pipeline.

python run_character_eval.py --model_1 <CONFIG_NAME> # Evaluate the model on the character subset

python run_scene_eval.py --model_1 <CONFIG_NAME> # Evaluate the model on the scene subsetGenerate the leaderboard.

python generate_leaderboard.pyAfter running all commands above, you can add your model to the leaderboard by creating a pull request with the updated leaderboard files, leaderboard.csv and leaderboard_for_display.csv, plus the .jsonl files in /results/character and /results/scene. The leaderboard will be updated automatically when the PR is merged.

This benchmark is heavily inspired by ArenaHard and AlpacaEval. Some code implementations are borrowed from these repositories.