Project Page: https://cs.stanford.edu/~xtiange/projects/occnerf/

Paper: https://arxiv.org/pdf/2308.04622.pdf

-

Create and activate a virtual environment.

conda create --name occnerf python=3.7 conda activate occnerf

-

Install the required packages as required in HumanNeRF.

pip install -r requirements.txt

-

Install PyTorch3D by following its official instructions.

-

Build

gridencoderby following the instructions provided in torch-ngp

Download the gender neutral SMPL model from here, and unpack mpips_smplify_public_v2.zip.

Copy the smpl model.

SMPL_DIR=/path/to/smpl

MODEL_DIR=$SMPL_DIR/smplify_public/code/models

cp $MODEL_DIR/basicModel_neutral_lbs_10_207_0_v1.0.0.pkl third_parties/smpl/models

Follow this page to remove Chumpy objects from the SMPL model.

Below we take the subject 387 as a running example.

First, download ZJU-Mocap dataset from here.

Second, modify the yaml file of subject 387 at tools/prepare_zju_mocap/387.yaml. In particular, zju_mocap_path should be the directory path of the ZJU-Mocap dataset.

dataset:

zju_mocap_path: /path/to/zju_mocap

subject: '387'

sex: 'neutral'

...

Finally, run the data preprocessing script.

cd tools/prepare_zju_mocap

python prepare_dataset.py --cfg 387.yaml

cd ../../

Download the preprocessed two sequences used in the paper from here.

Please resepect OcMotion's original license of usage.

To train a model:

python train.py --cfg configs/occnerf/zju_mocap/387/occnerf.yaml

Alternatively, you can update the scripts: in train.sh.

Render the frame input (i.e., observed motion sequence).

python run.py \

--type movement \

--cfg configs/occnerf/zju_mocap/387/occnerf.yaml

Run free-viewpoint rendering on a particular frame (e.g., frame 128).

python run.py \

--type freeview \

--cfg configs/occnerf/zju_mocap/387/occnerf.yaml \

freeview.frame_idx 128

Render the learned canonical appearance (T-pose).

python run.py \

--type tpose \

--cfg configs/occnerf/zju_mocap/387/occnerf.yaml

In addition, you can find the rendering scripts in scripts/zju_mocap.

The implementation is based on HumanNeRF, which took reference from Neural Body, Neural Volume, LPIPS, and YACS. We thank the authors for their generosity to release code.

If you find our work useful, please consider citing:

@InProceedings{Xiang_2023_OccNeRF,

author = {Xiang, Tiange and Sun, Adam and Wu, Jiajun and Adeli, Ehsan and Li, Fei-Fei},

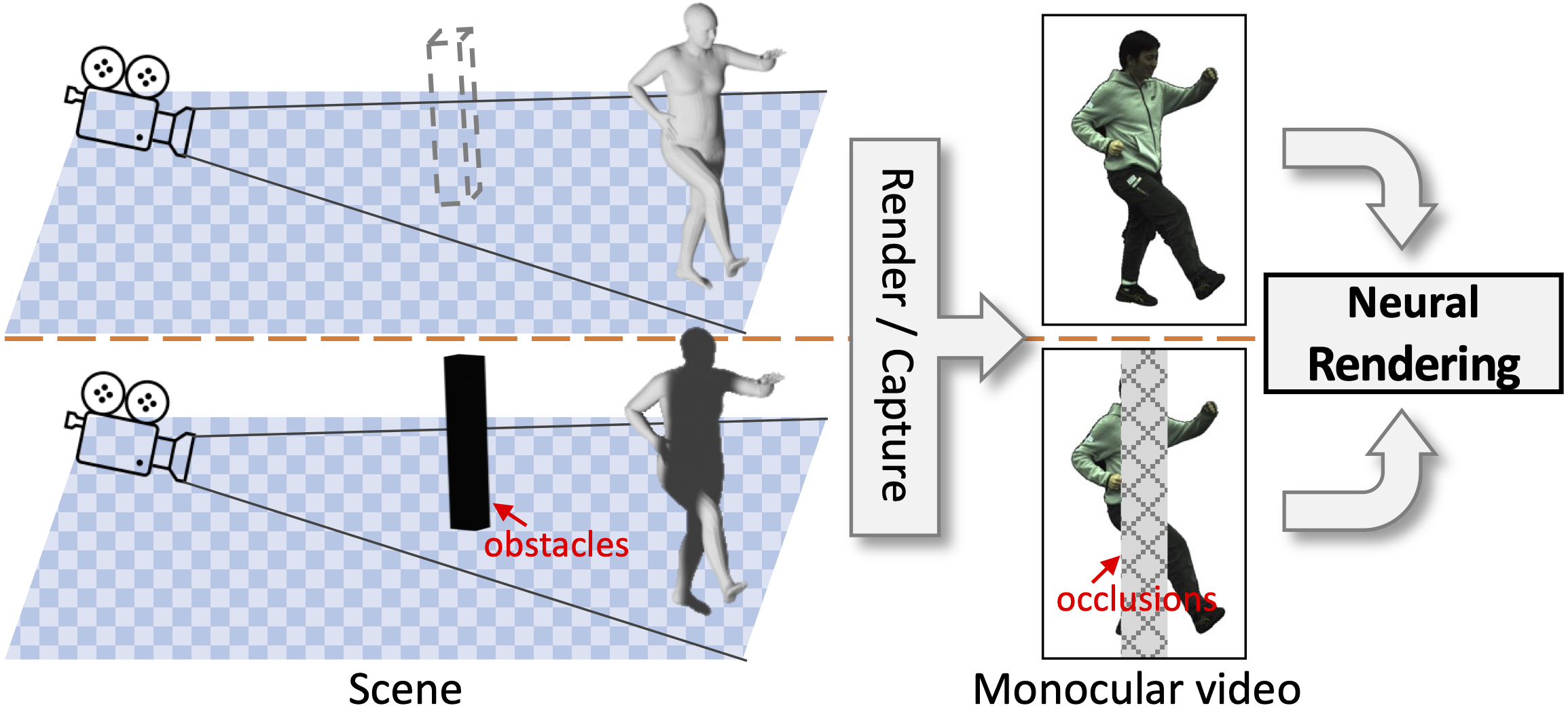

title = {Rendering Humans from Object-Occluded Monocular Videos},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023}

}