Extension of the NeRF (Neural Radiance Fields) method using PyTorch (PyTorch Lightning).

Based on the official implementation: nerf

Benedikt Wiberg 1 *,

Cristian Chivriga 1 *,

Marian Loser 1,

Yujiao Shentu 1

1TUM

*Denotes equal contribution

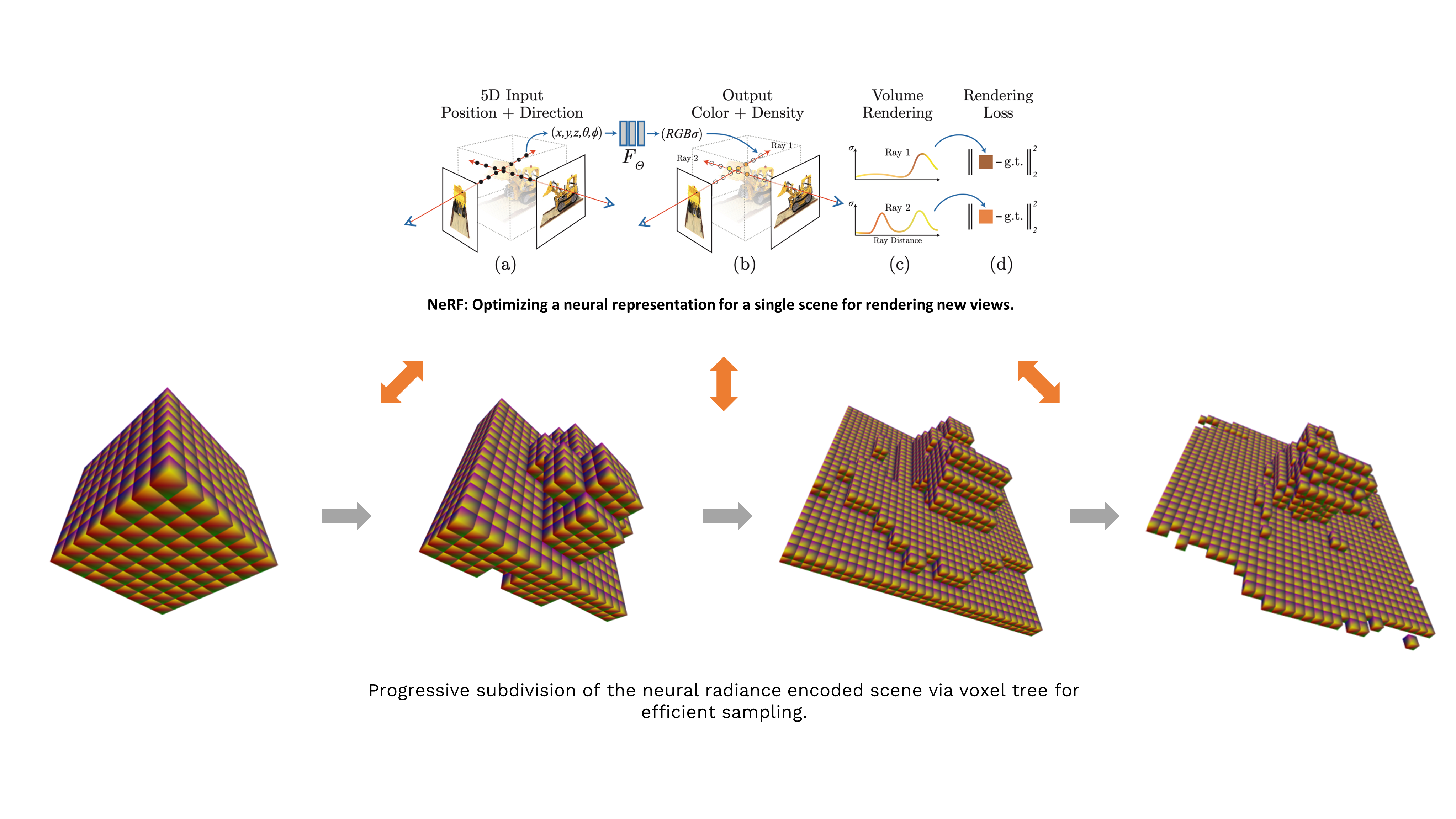

NeRF (Neural radiance field) optimizes directly the parameters of continuous 5D scene representation by minimizing the error of view synthesis with the captured images given the camera poses.

Extracted Lego mesh with appearance.

The project is an extension and improvement upon the original method NeRF for neural rendering view-synthesis designed for rapid prototyping and experimentation. Main improvements are:

- Scene encoding through unstructured radiance volumes for efficient sampling via Axis-Aligned Bounding Boxes (AABBs) intersections.

- Mesh reconstruction with appearance through informed re-sampling based on the inverse normals of the scene geometry via Marching Cubes.

- Modular implementation which is 1.4x faster and at most twice as much memory efficient then the base implementation NeRF-PyTorch.

Install the dependencies via:

In a new conda or virtualenv environment, run

pip install -r requirements.txtIn the root folder, run

poetry install

source .venv/bin/activateIn the root folder, run the script considering the dataset folder is named data/ inside your Drive folder (otherwise tweak it accordingly):

. ./script.shCheckout the provided data links NeRF Original.

Your own data with colmap

- Install COLMAP following installation guide.

- Prepare your images in a folder (60-80). Make sure that auto-focus is turned off.

- Run

python colmap_convert.py your_images_dir_path - Edit the config file e.g.

config/colmap.ymlwith the new generated dataset path.

Get to know the configuration files under the src/config and get started running your own experiments by creating new ones.

The training script can be invoked by running

python train_nerf.py --config config/lego.ymlTo resume training from latest checkpoint:

python train_nerf.py --log-checkpoint ../pretrained/colab-lego-nerf-high-res/default/version_0/By the following command, you can generate mesh with high-level of detail, use python mesh_nerf.py --help for more options:

python mesh_nerf.py --log-checkpoint ../pretrained/colab-lego-nerf-high-res/default/version_0/ --checkpoint model_last.ckpt --save-dir ../data/meshes --limit 1.2 --res 480 --iso-level 32 --view-disparity-max-bound 1e0If tensorboard is properly installed, check in real-time your results on localhost:6006 from your favorite browser:

tensorboard --logdir logs/... --port 6006Based on the existent work:

- NeRF Original - Original work in TensorFlow, please refer also the paper for extra information.

- NeRF PyTorch - PyTorch base code for the current repo.