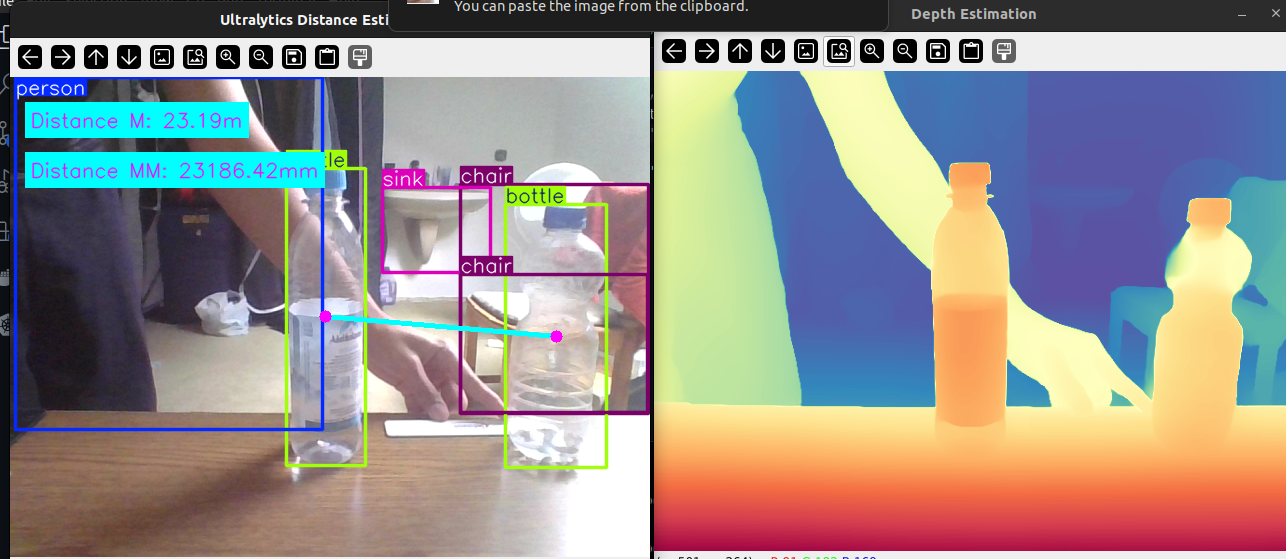

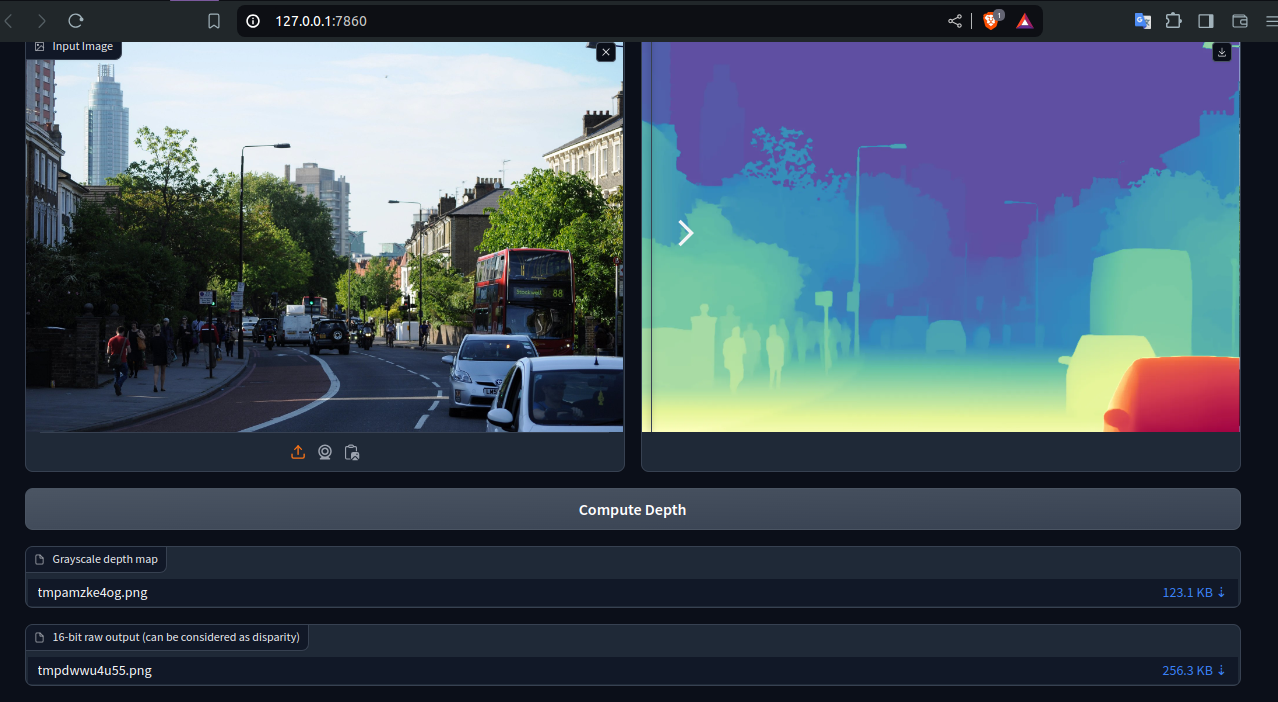

Monocular Depth Estimation: is the task of estimating the depth value (distance relative to the camera) of each pixel given a single (monocular) RGB image. This challenging task is a key prerequisite for determining scene understanding for applications such as 3D scene reconstruction, autonomous driving, and AR.

Measuring the gap between two objects is known as distance calculation within a specified space. In the case of Ultralytics YOLOv8, the bounding box centroid is employed to calculate the distance for bounding boxes highlighted by the user.

- Localization Precision: Enhances accurate spatial positioning in computer vision tasks.

- Size Estimation: Allows estimation of physical sizes for better contextual understanding.

- Scene Understanding: Contributes to a 3D understanding of the environment for improved decision-making.

Download one of the provided four models of varying scales for robust relative depth estimation and keep in checkpoints directory:

| Model | Params | Checkpoint |

|---|---|---|

| Depth-Anything-V2-Small | 24.8M | Download |

| Depth-Anything-V2-Base | 97.5M | Download |

| Depth-Anything-V2-Large | 335.3M | Download |

| Depth-Anything-V2-Giant | 1.3B | Download |

git clone https://github.com/zubi9/depth_and_distance_measure.git

cd depth_and_distance_measure

pip install -r requirements.txtTo run the script for side-by-side YOLO v8 distance measurement and monocular depth estimation with a webcam:

python dnd_live_only.pypython run.py --encoder <vits | vitb | vitl | vitg> --img-path <path> --outdir <outdir> [--input-size <size>] [--pred-only] [--grayscale]Options:

--encoder: Choose fromvits,vitb,vitl,vitg.--img-path: Path to an image directory, single image, or a text file with image paths.--input-size(optional): Default is518. Increase for finer results.--pred-only(optional): Only save the predicted depth map.--grayscale(optional): Save the grayscale depth map without applying a color palette.

Example:

python run.py --encoder vitg --img-path assets/examples --outdir depth_vispython run_video.py --encoder vitg --video-path assets/examples_video --outdir video_depth_visNote: The larger models provide better temporal consistency on videos.

To use the Gradio demo locally:

python app.pyIf you find this project useful, please consider citing:

@article{depth_anything_v2,

title={Depth Anything V2},

author={Yang, Lihe and Kang, Bingyi and Huang, Zilong and Zhao, Zhen and Xu, Xiaogang and Feng, Jiashi and Zhao, Hengshuang},

journal={arXiv preprint arXiv:},

year={2024}

}- Yolo v8: Ultralytics GitHub

- Apache License

- [GNU License]